Wednesday, September 2nd 2020

NVIDIA Announces GeForce Ampere RTX 3000 Series Graphics Cards: Over 10000 CUDA Cores

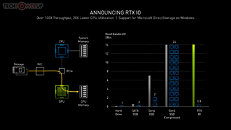

NVIDIA just announced its new generation GeForce "Ampere" graphics card series. The company is taking a top-to-down approach with this generation, much like "Turing," by launching its two top-end products, the GeForce RTX 3090 24 GB, and the GeForce RTX 3080 10 GB graphics cards. Both cards are based on the 8 nm "GA102" silicon. Join us as we live blog the pre-recorded stream by NVIDIA, hosted by CEO Jen-Hsun Huang.Update 16:04 UTC: Fortnite gets RTX support. NVIDIA demoed an upcoming update to Fortnite that adds DLSS 2.0, ambient occlusion, and ray-traced shadows and reflections. Coming soon.Update 16:06 UTC: NVIDIA Reflex technology works to reduce e-sports game latency. Without elaborating, NVIDIA spoke of a feature that works to reduce input and display latencies "by up to 50%". The first supported games will be Valorant, Apex Legends, Call of Duty Warzone, Destiny 2 and Fortnite—in September.Update 16:07 UTC: Announcing NVIDIA G-SYNC eSports Displays—a 360 Hz IPS dual-driver panel that launches through various monitor partners in this fall. The display has a built-in NVIDIA Reflex precision latency analyzer.Update 16:07 UTC: NVIDIA Broadcast is a brand new app available in September that is a turnkey solution to enhance video and audio streaming taking advantage of the AI capabilities of GeForce RTX. It makes it easy to filter and improve your video, add AI-based backgrounds (static or animated), and builds on RTX Voice to filter out background noise from audio.Update 16:10 UTC: Ansel evolves into Omniverse Machinima, an asset exchange that helps independent content creators to use game assets to create movies. Think fan-fiction Star Trek episodes using Star Trek Online assets. Beta in October.Update 16:15 UTC: Updates to the AI tensor cores and RT cores. In addition to more numbers of RT- and tensor cores, the 2nd generation RT cores and 3rd generation tensor cores offer higher IPC. Making ray-tracing have as little performance impact as possible appears to be an engineering goal with Ampere.Update 16:18 UTC: Ampere 2nd Gen RTX technology. Traditional shaders are up by 270%, raytracing units are 1.7x faster and the tensor cores bring a 2.7x speedup.Update 16:19 UTC: Here it is! Samsung 8 nm and Micron GDDR6X memory. The announcement of Samsung and 8 nm came out of nowhere, as we were widely expecting TSMC 7 nm. Apparently NVIDIA will use Samsung for its Ampere client-graphics silicon, and TSMC for lower volume A100 professional-level scalar processors.Update 16:20 UTC: Ampere has almost twice the performance per Watt compared to Turing!Update 16:21 UTC: Marbles 2nd Gen demo is jaw-dropping! NVIDIA demonstrated it at 1440p 30 Hz, or 4x the workload of first-gen Marbles (720p 30 Hz).Update 16:23 UTC: Cyberpunk 2077 is playing big on the next generation. NVIDIA is banking extensively on the game to highlight the advantages of Ampere. The 200 GB game could absorb gamers for weeks or months on end.Update 16:24 UTC: New RTX IO technology accelerates the storage sub-system for gaming. This works in tandem with the new Microsoft DirectStorage technology, which is the Windows API version of the Xbox Velocity Architecture, that's able to directly pull resources from disk into the GPU. It requires for game engines to support the technology. The tech promises a 100x throughput increase, and significant reductions in CPU utilization. It's timely as PCIe gen 4 SSDs are on the anvil.Update 16:26 UTC: Here it is, the GeForce RTX 3080, 10 GB GDDR6X, running at 19 Gbps, 238 tensor TFLOPs, 58 RT TFLOPs, 18 power phases.Update 16:29 UTC: Airflow design. 90 W more cooling performance than Turing FE cooler.Update 16:30 UTC: Performance leap, $700. 2x as fast as RTX 2080, available September 17. Up to 2x faster than the original RTX 2070.Update 17:05 UTC: GDDR6X was purpose-developed by NVIDIA and Micron Technology, which could be an exclusive vendor of these chips to NVIDIA. These chips use the new PAM4 encoding scheme to significantly increase data-rates over GDDR6. On the RTX 3090, the chips tick at 19.5 Gbps (data rates), with memory bandwidths approaching 940 GB/s.Update 16:31 UTC: RTX 3070, $500, faster than RTX 2080 Ti, 60% faster than RTX 2070, available in October. 20 shader TFLOPs, 40 RT TFLOPs, 163 tensor cores, 8 GB GDDR6Update 16:33 UTC: Call of Duty: Black Ops Cold War is RTX-on.Update 16:35 UTC: RTX 3090 is the new TITAN. Twice as fast as RTX 2080 Ti, 24 GB GDDR6X. The Giant Ampere. A BFGPU, $1500 available from September 24. It is designed to power 60 fps at 8K resolution, up to 50% faster than Titan RTX.

Update 16:43 UTC: Wow, I want one. On paper, the RTX 3090 is the kind of card I want to upgrade my monitor for. Not sure if a GPU ever had that impact.Update 16:59 UTC: Insane CUDA core counts, 2-3x increase generation-over-generation. You won't believe these.Update 17:01 UTC: GeForce RTX 3090 in the details. Over Ten Thousand CUDA cores!Update 17:02 UTC: GeForce RTX 3080 details. More insane specs.Update 17:03 UTC: The GeForce RTX 3070 has more CUDA cores than a TITAN RTX. And it's $500. Really wish these cards came out in March. 2020 would've been a lot better.Here's a list of the top 10 Ampere features.

Update 19:22 UTC: For a limited time, gamers who purchase a new GeForce RTX 30 Series GPU or system will receive a PC digital download of Watch Dogs: Legion and a one-year subscription to the NVIDIA GeForce NOW cloud gaming service.

Update 19:47 UTC: All Turing cards support HDMI 2.1. The increased bandwidth provided by HDMI 2.1 allows, for the first time, a single cable connection to 8K HDR TVs for ultra-high-resolution gaming. Also supported is AV1 video decode.

Update 20:06 UTC: Added the complete NVIDIA presentation slide deck at the end of this post.

Update Sep 2nd: We received following info from NVIDIA regarding international pricing:

Update 16:43 UTC: Wow, I want one. On paper, the RTX 3090 is the kind of card I want to upgrade my monitor for. Not sure if a GPU ever had that impact.Update 16:59 UTC: Insane CUDA core counts, 2-3x increase generation-over-generation. You won't believe these.Update 17:01 UTC: GeForce RTX 3090 in the details. Over Ten Thousand CUDA cores!Update 17:02 UTC: GeForce RTX 3080 details. More insane specs.Update 17:03 UTC: The GeForce RTX 3070 has more CUDA cores than a TITAN RTX. And it's $500. Really wish these cards came out in March. 2020 would've been a lot better.Here's a list of the top 10 Ampere features.

Update 19:22 UTC: For a limited time, gamers who purchase a new GeForce RTX 30 Series GPU or system will receive a PC digital download of Watch Dogs: Legion and a one-year subscription to the NVIDIA GeForce NOW cloud gaming service.

Update 19:47 UTC: All Turing cards support HDMI 2.1. The increased bandwidth provided by HDMI 2.1 allows, for the first time, a single cable connection to 8K HDR TVs for ultra-high-resolution gaming. Also supported is AV1 video decode.

Update 20:06 UTC: Added the complete NVIDIA presentation slide deck at the end of this post.

Update Sep 2nd: We received following info from NVIDIA regarding international pricing:

- UK: RTX 3070: GBP 469, RTX 3080: GBP 649, RTX 3090: GBP 1399

- Europe: RTX 3070: EUR 499, RTX 3080: EUR 699, RTX 3090: EUR 1499 (this might vary a bit depending on local VAT)

- Australia: RTX 3070: AUD 809, RTX 3080: AUD 1139, RTX 3090: AUD 2429

502 Comments on NVIDIA Announces GeForce Ampere RTX 3000 Series Graphics Cards: Over 10000 CUDA Cores

RX570 for $120 has 8GB FFS and I already ditched my 6GB card because it ran out of VRAM.

And who cares about VRAM? It's not like it affects your performance in any noticeable way, even if you had 20GB you likely wouldn't notice the single digit FPS bump.

3080S 20GB / 3070S 16GB model what you should keep an eye out if they are forced by AMD.

My upgrade path always used to be when 1 card (reasonably priced or if funds are available) = 2 older gen cards I have in SLI.

Dual 8800 GTS 512MB in SLI roughly equals 1 GTX 280

Add a second GTX 280 for SLI roughly equals 1 GTX 570

Not having funds I didn't upgrade my 570s to a 780Ti and waited for next gen....then jumped on a 980Ti and I've been using it since.

I've been waiting for a single card priced in the $500 range that can give twice the performance of my 980Ti and a 2080Ti is that card, but not in the $1000+ price range. Hell no.

If the 3070 or even an AMD equivalent card around the same price can give me double the performance of my 980Ti and cost is around $500, then this generation will be the one I finally upgrade my GPU.

Also who is joining me on the hype trian choo choo, but be warned the hype trian is really hot:p

What really took me by surprise whas the cuda core amount. I dit not for seen ampere would have this many cores. This also explain why RTX 3080 and 3090 300 watt TDP+ cards. No dout whit that many cuda cores ampere is gonna be a serious beast. RTX 3080 also surprized me whit the price. Not so much much Ngreedia this time as i had fear. RTX 3080 looks on papir like a solid 4K GPU all throw i do have my concerns about only 10 GB vram for future prof the next two years, There are all ready games that uses 8 GB+ vram at 4K and if we look at Microsoft Flight Simulater that is already close to 10 GB at 4K. But besides Vram amount, ampere looks really solid.

Sorry GTX 1080 TI, but i think its time ower ways goes in different directions... no dont look at me like i am betraying you.

When I asked three friends of mine some time back what would they think about leaving the release price of the 3000 series all of them were more or less like 'meh, a lower would be nice but it is expected that the price shouldn't change'. After the price leak and NVIDIA now denying it all of them are amazed of the price.

Feels almost as if the leak was a marketing trick :)

Cyberpunk might just fit into 10GB but I suspect games in 2021 are going to be pushing 12GB with the console devs targeting that for VRAM allocation.

Everybody is so franatic about the 30xx pricing, where the truth is this is the good old Pascal pricing coming back to replace the insanity that Turing was.

Although large performance gains in new generations is not unheard of before.

The point is ~ if Nvidia could price it to Turing levels they'd almost certainly do so.