Friday, September 25th 2020

RTX 3080 Crash to Desktop Problems Likely Connected to AIB-Designed Capacitor Choice

Igor's Lab has posted an interesting investigative article where he advances a possible reason for the recent crash to desktop problems for RTX 3080 owners. For one, Igor mentions how the launch timings were much tighter than usual, with NVIDIA AIB partners having much less time than would be adequate to prepare and thoroughly test their designs. One of the reasons this apparently happened was that NVIDIA released the compatible driver stack much later than usual for AIB partners; this meant that their actual testing and QA for produced RTX 3080 graphics cards was mostly limited to power on and voltage stability testing, other than actual gaming/graphics workload testing, which might have allowed for some less-than-stellar chip samples to be employed on some of the companies' OC products (which, with higher operating frequencies and consequent broadband frequency mixtures, hit the apparent 2 GHz frequency wall that produces the crash to desktop).

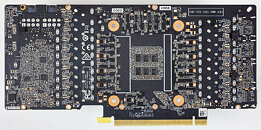

Another reason for this, according to Igor, is the actual "reference board" PG132 design, which is used as a reference, "Base Design" for partners to architecture their custom cards around. The thing here is that apparently NVIDIA's BOM left open choices in terms of power cleanup and regulation in the mounted capacitors. The Base Design features six mandatory capacitors for filtering high frequencies on the voltage rails (NVVDD and MSVDD). There are a number of choices for capacitors to be installed here, with varying levels of capability. POSCAPs (Conductive Polymer Tantalum Solid Capacitors) are generally worse than SP-CAPs (Conductive Polymer-Aluminium-Electrolytic-Capacitors) which are superseded in quality by MLCCs (Multilayer Ceramic Chip Capacitor, which have to be deployed in groups). Below is the circuitry arrangement employed below the BGA array where NVIDIA's GA-102 chip is seated, which corresponds to the central area on the back of the PCB.In the images below, you can see how NVIDIA and it's AIBs designed this regulator circuitry (NVIDIA Founders' Edition, MSI Gaming X, ZOTAC Trinity, and ASUS TUF Gaming OC in order, from our reviews' high resolution teardowns). NVIDIA in their Founders' Edition designs uses a hybrid capacitor deployment, with four SP-CAPs and two MLCC groups of 10 individual capacitors each in the center. MSI uses a single MLCC group in the central arrangement, with five SP-CAPs guaranteeing the rest of the cleanup duties. ZOTAC went the cheapest way (which may be one of the reasons their cards are also among the cheapest), with a six POSCAP design (which are worse than MLCCs, remember). ASUS, however, designed their TUF with six MLCC arrangements - there were no savings done in this power circuitry area.It's likely that the crash to desktop problems are related to both these issues - and this would also justify why some cards cease crashing when underclocked by 50-100 MHz, since at lower frequencies (and this will generally lead boost frequencies to stay below the 2 GHz mark) there is lesser broadband frequency mixture happening, which means POSCAP solutions can do their job - even if just barely.

Source:

Igor's Lab

Another reason for this, according to Igor, is the actual "reference board" PG132 design, which is used as a reference, "Base Design" for partners to architecture their custom cards around. The thing here is that apparently NVIDIA's BOM left open choices in terms of power cleanup and regulation in the mounted capacitors. The Base Design features six mandatory capacitors for filtering high frequencies on the voltage rails (NVVDD and MSVDD). There are a number of choices for capacitors to be installed here, with varying levels of capability. POSCAPs (Conductive Polymer Tantalum Solid Capacitors) are generally worse than SP-CAPs (Conductive Polymer-Aluminium-Electrolytic-Capacitors) which are superseded in quality by MLCCs (Multilayer Ceramic Chip Capacitor, which have to be deployed in groups). Below is the circuitry arrangement employed below the BGA array where NVIDIA's GA-102 chip is seated, which corresponds to the central area on the back of the PCB.In the images below, you can see how NVIDIA and it's AIBs designed this regulator circuitry (NVIDIA Founders' Edition, MSI Gaming X, ZOTAC Trinity, and ASUS TUF Gaming OC in order, from our reviews' high resolution teardowns). NVIDIA in their Founders' Edition designs uses a hybrid capacitor deployment, with four SP-CAPs and two MLCC groups of 10 individual capacitors each in the center. MSI uses a single MLCC group in the central arrangement, with five SP-CAPs guaranteeing the rest of the cleanup duties. ZOTAC went the cheapest way (which may be one of the reasons their cards are also among the cheapest), with a six POSCAP design (which are worse than MLCCs, remember). ASUS, however, designed their TUF with six MLCC arrangements - there were no savings done in this power circuitry area.It's likely that the crash to desktop problems are related to both these issues - and this would also justify why some cards cease crashing when underclocked by 50-100 MHz, since at lower frequencies (and this will generally lead boost frequencies to stay below the 2 GHz mark) there is lesser broadband frequency mixture happening, which means POSCAP solutions can do their job - even if just barely.

297 Comments on RTX 3080 Crash to Desktop Problems Likely Connected to AIB-Designed Capacitor Choice

Having everything with MLCC like glorified asus does means you have single deep resonance notch, instead of two less prominent notches when use MLCC+POSCAP together. Using three kinds, smaller POSCAP, bigger POSCAP, and some MLCCs gives better figure with 3 notches.. But again, with modern DC-DC controllers lot of this can be tuned from PID control and converter slew rate tweaks. This adjustability is one of big reasons why enthusiast cards often use "digital" that allows tweaking almost on the fly for such parameters. However this is almost never exposed to user, as wrong settings can easily make power phases go brrrrrr with smokes. Don't ask me how I know...

Everybody going nuts now with MLCC or POSCAP, but I didn't see a single note that actual boards used DIFFERENT capacitance and capacitor models, e.g. some use 220uF , some use 470uF :) There are 680 or even 1000uF capacitors in D case on the market, that can be used behind GPU die. It is impossible to install that much of capacitance with MLCC in same spot for example, as largest cap in 0603 is 47uF for example.

Before looking onto poor 6 capacitors behind the die - why nobody talks about huge POSCAP capacitor bank behind VRM on FE card, eh? Custom AIB cards don't have that, just usual array without much of bulk capacitance. If I'd be designing a card, I'd look on a GPU's power demands and then add enough bulk capacitance first to make sure of good power impedance margin at mid-frequency ranges, while worrying about capacitors for high-frequency decoupling later, as that is relatively easier job to tweak.

After all these wild theories are easy to test, no need any engineering education to prove this wrong or right. Take "bad" crashing card with "bad POSCAPs", test it to confirm crashes... Then desolder "bad POSCAPs", put bunch of 47uF low-ESR MLCCs instead, and test again if its "fixed". Something tells me that it would not be such a simple case and card may still crash, heh. ;-)

Due lots of reading and practice and the opportunity to receive highest precision parts and measuring tools, I did my entrance also at at electrical metrology.

This is the top of pyramid at that science.

And I won recognition at my sector by the industry it self, as they made the judgement that their Blogger and in a way a trainee early adopter, he does have true potentials to adopt and understand of what their High-tech work can do and it usage.

But here comes the difference between of me and others, I was preparing my self for 30 years as freelancer electrician and electronics repair man, studying , practicing , having a very high success rate when I do repairs or troubleshoot real problems at my local customers.

This is the hard and slow and painful way so some one to develop skills and understanding.

Today because of Igor an German retiree, all YouTube actors / product reviewers, they did found a reason to power on their cameras.

But even so they are clueless of what they are talking about.

And therefore all consumers they should simply wait so NVIDIA and their partners to do their own homework and any new decisions will be officially announced in the market no sooner than 40 days from now.I can solder and desolder of anything too, but GPU engineering this is something that no one can grasp with out be part of NVIDIA R&D team.

Fifteen years ago the only word that consumers knew was number of pipelines.

GPU engineering has nothing to do of YOU becoming a car mechanic at your own car, it does not work that way due the unimaginable complexity of modern design.

Though there is no much need for highest precision equipment to be able on capturing bode plot and response of relatively slow DC-DC converter that is used on 3080/3090 GPUs here. One do need decent differential probes, injector or high-speed load and good scope or bode plot analyzer :)

Again, one does not need to know anything about GPU or silicon design to make a good DC-DC converter that can meet power requirements of the chip. You can measure all this in typical EE lab that all AIBs already have. No need to work at NVIDIA to do this, as DC-DC converter design is very common job that is done in majority electronics, be it GPU, motherboard, console or TV.

Also fun fact = MLCC caps produce lot of acoustic noise. Remember sqeaking cards that customers hate and RMA so much? :)

I got one a year ago and I even help at developing logging software for it.

3080/3090 GPUs they are more complex than the 8846A. :)

Just keep that in mind.

Just so someone doesn't jump the gun and says he's pulling this out of his you know what.

EVGA stance seems to confirm there is a problem with the choice of capacitors, although maybe not cheaping out is the root of the problem, but rather not enough testing.

On the other hand, FE cards seem to crash, too, so there might be other sources of issues, PSU related or such.

Mostly because the users they are not aware of the actual health status of the PSU in their hands, current performance delivery in watts due it age.

P.S. No joy in 8846A was not because of it's digital issues, but because I am/was not interested in it much, having way more fun with 3458A/2002/etc. :) Even fully working 8846A is quite poor unit for what it costs...

P.P.S. All above are just my personal ramblings, not related to any AIB point of view.

Brazilian overclocking legend Ronaldo "Rbuass" Buassali pushed his Galax GeForce RTX 3080 SG to 2,340 MHz

www.tomshardware.com/news/rtx-3080-overclock-record

I am aware of your measuring gear, but your accident did stop your exploration at the discovery of what an 8846A can do as by far most modern design.

Anyway this is another story, and a boring one for the readers of this forum.

This component race somehow makes me wonder if there are forbidden cheats that don't meet the regulations. Where there is a rule, so is a violation.

Re-manucfacturing uhmmmm

Now the companies will have to show that they made a reliable product and works fine.

Over 2GHz seems to be an issue, either MCU design or design process limit or both.

I personally expect there will be BIOS updates that will lower the maximum boost clock.

This is what TSMC posted a month before. Fluke or coincidence?

Nvidia is trying to reinvent the wheel, maybe...

www.tsmc.com/english/newsEvents/blog_article_20200803.htm