Friday, September 25th 2020

RTX 3080 Crash to Desktop Problems Likely Connected to AIB-Designed Capacitor Choice

Igor's Lab has posted an interesting investigative article where he advances a possible reason for the recent crash to desktop problems for RTX 3080 owners. For one, Igor mentions how the launch timings were much tighter than usual, with NVIDIA AIB partners having much less time than would be adequate to prepare and thoroughly test their designs. One of the reasons this apparently happened was that NVIDIA released the compatible driver stack much later than usual for AIB partners; this meant that their actual testing and QA for produced RTX 3080 graphics cards was mostly limited to power on and voltage stability testing, other than actual gaming/graphics workload testing, which might have allowed for some less-than-stellar chip samples to be employed on some of the companies' OC products (which, with higher operating frequencies and consequent broadband frequency mixtures, hit the apparent 2 GHz frequency wall that produces the crash to desktop).

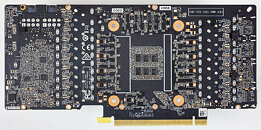

Another reason for this, according to Igor, is the actual "reference board" PG132 design, which is used as a reference, "Base Design" for partners to architecture their custom cards around. The thing here is that apparently NVIDIA's BOM left open choices in terms of power cleanup and regulation in the mounted capacitors. The Base Design features six mandatory capacitors for filtering high frequencies on the voltage rails (NVVDD and MSVDD). There are a number of choices for capacitors to be installed here, with varying levels of capability. POSCAPs (Conductive Polymer Tantalum Solid Capacitors) are generally worse than SP-CAPs (Conductive Polymer-Aluminium-Electrolytic-Capacitors) which are superseded in quality by MLCCs (Multilayer Ceramic Chip Capacitor, which have to be deployed in groups). Below is the circuitry arrangement employed below the BGA array where NVIDIA's GA-102 chip is seated, which corresponds to the central area on the back of the PCB.In the images below, you can see how NVIDIA and it's AIBs designed this regulator circuitry (NVIDIA Founders' Edition, MSI Gaming X, ZOTAC Trinity, and ASUS TUF Gaming OC in order, from our reviews' high resolution teardowns). NVIDIA in their Founders' Edition designs uses a hybrid capacitor deployment, with four SP-CAPs and two MLCC groups of 10 individual capacitors each in the center. MSI uses a single MLCC group in the central arrangement, with five SP-CAPs guaranteeing the rest of the cleanup duties. ZOTAC went the cheapest way (which may be one of the reasons their cards are also among the cheapest), with a six POSCAP design (which are worse than MLCCs, remember). ASUS, however, designed their TUF with six MLCC arrangements - there were no savings done in this power circuitry area.It's likely that the crash to desktop problems are related to both these issues - and this would also justify why some cards cease crashing when underclocked by 50-100 MHz, since at lower frequencies (and this will generally lead boost frequencies to stay below the 2 GHz mark) there is lesser broadband frequency mixture happening, which means POSCAP solutions can do their job - even if just barely.

Source:

Igor's Lab

Another reason for this, according to Igor, is the actual "reference board" PG132 design, which is used as a reference, "Base Design" for partners to architecture their custom cards around. The thing here is that apparently NVIDIA's BOM left open choices in terms of power cleanup and regulation in the mounted capacitors. The Base Design features six mandatory capacitors for filtering high frequencies on the voltage rails (NVVDD and MSVDD). There are a number of choices for capacitors to be installed here, with varying levels of capability. POSCAPs (Conductive Polymer Tantalum Solid Capacitors) are generally worse than SP-CAPs (Conductive Polymer-Aluminium-Electrolytic-Capacitors) which are superseded in quality by MLCCs (Multilayer Ceramic Chip Capacitor, which have to be deployed in groups). Below is the circuitry arrangement employed below the BGA array where NVIDIA's GA-102 chip is seated, which corresponds to the central area on the back of the PCB.In the images below, you can see how NVIDIA and it's AIBs designed this regulator circuitry (NVIDIA Founders' Edition, MSI Gaming X, ZOTAC Trinity, and ASUS TUF Gaming OC in order, from our reviews' high resolution teardowns). NVIDIA in their Founders' Edition designs uses a hybrid capacitor deployment, with four SP-CAPs and two MLCC groups of 10 individual capacitors each in the center. MSI uses a single MLCC group in the central arrangement, with five SP-CAPs guaranteeing the rest of the cleanup duties. ZOTAC went the cheapest way (which may be one of the reasons their cards are also among the cheapest), with a six POSCAP design (which are worse than MLCCs, remember). ASUS, however, designed their TUF with six MLCC arrangements - there were no savings done in this power circuitry area.It's likely that the crash to desktop problems are related to both these issues - and this would also justify why some cards cease crashing when underclocked by 50-100 MHz, since at lower frequencies (and this will generally lead boost frequencies to stay below the 2 GHz mark) there is lesser broadband frequency mixture happening, which means POSCAP solutions can do their job - even if just barely.

297 Comments on RTX 3080 Crash to Desktop Problems Likely Connected to AIB-Designed Capacitor Choice

Maybe they did want to beat AMD to market, maybe they thought they were ready for prime time, who knows. Either way maybe the scalpers will be stuck with some excess stock ;)

Time will tell.

Great, they seemed to have the best cooling/noise ratio anyway

No.

Consumers are getting played.

Damn Beta community tactics need to stop.

Even trusted reviewers like gamers nexus and jayz two cents who reveal issues your conventional reviewer wont do the extra work to uncover, never had an issue with them ?

I think this is just the case of end users trying so hard to overclock their cards pushing them to perform to whatever high standards they deem acceptable then post negative threads when their cards cant overclock high enough past default profiles

The MCUs are simply not binned good enough or there is an issue with boost algorithm. Same happened with Turing boards.

The point that Igor makes about improper power design causing instability is a very plausible one. Especially with first production runs where it indeed could be the case that they did not have the time/equipment/driver etc to do proper design verification.

However, concluding from this that a POSCAP = bad and MLCC = good is waaay to harsh and a conclusion you cannot make.

Both POSCAPS (or any other 'solid polymer caps' and MLCC's have there own characteristics and use cases.

Some (not all) are ('+' = pos, '-' = neg):

MLCC:

+ cheap

+ small

+ high voltage rating in small package

+ high current rating

+ high temperature rating

+ high capacitance in small package

+ good at high frequencies

- prone to cracking

- prone to piezo effect

- bad temperature characteristics

- DC bias (capacitance changes a lot under different voltages)

POSCAP:

- more expensive

- bigger

- lower voltage rating

+ high current rating

+ high temperature rating

- less good at high frequencies

+ mechanically very strong (no MLCC cracking)

+ not prone to piezo effect

+ very stable over temperature

+ no DC bias (capacitance very stable at different voltages)

As you can see, both have there strengths and weaknesses and one is not particularly better or worse then the other. It all depends.

In this case, most of these 3080 and 3090 boards may use the same GPU (with its requirements) but they also have very different power circuits driving the chips on the cards.

Each power solution has its own characteristics and behavior and thus its own requirements in terms of capacitors used.

Thus, you cannot simply say: I want the card with only MLCC's because that is a good design.

It is far more likely they just could/would not have enough time and/or resources to properly verify their designs and thus where not able to do proper adjustments to their initial component choices.

This will very likely work itself out in time. For now, just buy the card that you like and if it fails, simply claim warranty. Let them fix the problem and down draw to many conclusions based on incomplete information and (educated) guess work.

Yes it consumes more but the perf/watt is the highest of any card, AMD doesn't even get close.

You gotta lay off the cool-aid, Pascal was almost 40% better than Maxwell in terms of per/watt. In comparison Ampere's improvement in that area is absolutely pathetic over Turing.

I did dare to make preliminary collection of RTS 3000 electrical weak points, and some one from the TPU stuff it did block my access at the topic ..... reason unproductive comments.

Here is another unproductive prediction .... masive product return to bases (they are many ) = Product Recalls.They did not demonstrate actual games rather plain cards, some one made even a comparison RTX 3800 vs GTS 1660 Super at 4K ( he is an idiot).