Friday, September 25th 2020

RTX 3080 Crash to Desktop Problems Likely Connected to AIB-Designed Capacitor Choice

Igor's Lab has posted an interesting investigative article where he advances a possible reason for the recent crash to desktop problems for RTX 3080 owners. For one, Igor mentions how the launch timings were much tighter than usual, with NVIDIA AIB partners having much less time than would be adequate to prepare and thoroughly test their designs. One of the reasons this apparently happened was that NVIDIA released the compatible driver stack much later than usual for AIB partners; this meant that their actual testing and QA for produced RTX 3080 graphics cards was mostly limited to power on and voltage stability testing, other than actual gaming/graphics workload testing, which might have allowed for some less-than-stellar chip samples to be employed on some of the companies' OC products (which, with higher operating frequencies and consequent broadband frequency mixtures, hit the apparent 2 GHz frequency wall that produces the crash to desktop).

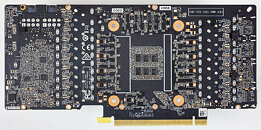

Another reason for this, according to Igor, is the actual "reference board" PG132 design, which is used as a reference, "Base Design" for partners to architecture their custom cards around. The thing here is that apparently NVIDIA's BOM left open choices in terms of power cleanup and regulation in the mounted capacitors. The Base Design features six mandatory capacitors for filtering high frequencies on the voltage rails (NVVDD and MSVDD). There are a number of choices for capacitors to be installed here, with varying levels of capability. POSCAPs (Conductive Polymer Tantalum Solid Capacitors) are generally worse than SP-CAPs (Conductive Polymer-Aluminium-Electrolytic-Capacitors) which are superseded in quality by MLCCs (Multilayer Ceramic Chip Capacitor, which have to be deployed in groups). Below is the circuitry arrangement employed below the BGA array where NVIDIA's GA-102 chip is seated, which corresponds to the central area on the back of the PCB.In the images below, you can see how NVIDIA and it's AIBs designed this regulator circuitry (NVIDIA Founders' Edition, MSI Gaming X, ZOTAC Trinity, and ASUS TUF Gaming OC in order, from our reviews' high resolution teardowns). NVIDIA in their Founders' Edition designs uses a hybrid capacitor deployment, with four SP-CAPs and two MLCC groups of 10 individual capacitors each in the center. MSI uses a single MLCC group in the central arrangement, with five SP-CAPs guaranteeing the rest of the cleanup duties. ZOTAC went the cheapest way (which may be one of the reasons their cards are also among the cheapest), with a six POSCAP design (which are worse than MLCCs, remember). ASUS, however, designed their TUF with six MLCC arrangements - there were no savings done in this power circuitry area.It's likely that the crash to desktop problems are related to both these issues - and this would also justify why some cards cease crashing when underclocked by 50-100 MHz, since at lower frequencies (and this will generally lead boost frequencies to stay below the 2 GHz mark) there is lesser broadband frequency mixture happening, which means POSCAP solutions can do their job - even if just barely.

Source:

Igor's Lab

Another reason for this, according to Igor, is the actual "reference board" PG132 design, which is used as a reference, "Base Design" for partners to architecture their custom cards around. The thing here is that apparently NVIDIA's BOM left open choices in terms of power cleanup and regulation in the mounted capacitors. The Base Design features six mandatory capacitors for filtering high frequencies on the voltage rails (NVVDD and MSVDD). There are a number of choices for capacitors to be installed here, with varying levels of capability. POSCAPs (Conductive Polymer Tantalum Solid Capacitors) are generally worse than SP-CAPs (Conductive Polymer-Aluminium-Electrolytic-Capacitors) which are superseded in quality by MLCCs (Multilayer Ceramic Chip Capacitor, which have to be deployed in groups). Below is the circuitry arrangement employed below the BGA array where NVIDIA's GA-102 chip is seated, which corresponds to the central area on the back of the PCB.In the images below, you can see how NVIDIA and it's AIBs designed this regulator circuitry (NVIDIA Founders' Edition, MSI Gaming X, ZOTAC Trinity, and ASUS TUF Gaming OC in order, from our reviews' high resolution teardowns). NVIDIA in their Founders' Edition designs uses a hybrid capacitor deployment, with four SP-CAPs and two MLCC groups of 10 individual capacitors each in the center. MSI uses a single MLCC group in the central arrangement, with five SP-CAPs guaranteeing the rest of the cleanup duties. ZOTAC went the cheapest way (which may be one of the reasons their cards are also among the cheapest), with a six POSCAP design (which are worse than MLCCs, remember). ASUS, however, designed their TUF with six MLCC arrangements - there were no savings done in this power circuitry area.It's likely that the crash to desktop problems are related to both these issues - and this would also justify why some cards cease crashing when underclocked by 50-100 MHz, since at lower frequencies (and this will generally lead boost frequencies to stay below the 2 GHz mark) there is lesser broadband frequency mixture happening, which means POSCAP solutions can do their job - even if just barely.

297 Comments on RTX 3080 Crash to Desktop Problems Likely Connected to AIB-Designed Capacitor Choice

Greed is the one of the most important things we must get rid of in human society if we are to avoid extinction.

TO ALL 3080 OWNERS, DO NOT ACCEPT BIOS UPDATES THAT REDUCES YOUR PERFORMANCE, RETURN YOUR CARD NOW WHILE YOU CAN !!!!!

But when all they see is "unprecedented demand" all that takes a back seat and next time around you'll get an even shittier and more expensive product.

That said, it doesn't make for good reputation and hurts the brand when some users lose a bit of performance from a firmware update, and will hurt even further given it'll take some time before revised cards are made and released. Then there is the fact that some companies may or may not allow for RMA/exchanges for revised GPUs (although most probably will bend and allow GPU exchanges, if only for publicity purposes). At the very least, the AIBs might be able to re-market the early batch GPUs either as cheaper SI cards for prebuilts for say, Target, Walmart, Best Buy, Dell (non-gaming-oriented lines), foreign equivalents, and so forth, where they're great as stock GPUs, since they do work at stock and just have a lower max OC ceiling.

Personally, I suspect that any major revisions will not make it to mainstream until November/December, or even not until after the new year, since all the early batches (including the "more incoming stock" being promised) were produced according to the original, finalized setup that's currently in the wild, and it'll take a bit of time to sort out the issues and get that change passed in the manufacturing process. And we're still dealing with a human virus that's still hampering the world's recovery.

You were sold a product that had a specific performance characteristic which was diminished after a software update because the item was defective, that alone would be enough to win the case. You don't even need to worry about what's written on the box.

And may I remind you of this : www.theverge.com/2020/3/2/21161271/apple-settlement-500-million-throttling-batterygate-class-action-lawsuit

Early adopters beware...For those of you who did not figure this out a long time ago.

Me and TIN we have some sort of relation as we are both engaging with electrical test and measurement sector for a decade at least.

I am an maintenance electrician involving mostly with industrial electronics and power supply.

We do differentiate by allot as he has specialization and further understanding of electronic circuits how to.

But he should be the one so to inform this forum that GPU circuit analysis this requiring an 100.000 Euro 10GHz or better oscilloscope and special probes them worth 4000 Euro or more its one.

In simple English ... we are both missing the required very damn expensive tools which they are required for in-depth analysis of what is happening.

Therefore it is wise that all of us, to wait for the findings of the well paid engineers them working at the big brands.

Recently there has been some discussion about the EVGA GeForce RTX 3080 series.

During our mass production QC testing we discovered a full 6 POSCAPs solution cannot pass the real world applications testing. It took almost a week of R&D effort to find the cause and reduce the POSCAPs to 4 and add 20 MLCC caps prior to shipping production boards, this is why the EVGA GeForce RTX 3080 FTW3 series was delayed at launch. There were no 6 POSCAP production EVGA GeForce RTX 3080 FTW3 boards shipped.

But, due to the time crunch, some of the reviewers were sent a pre-production version with 6 POSCAP’s, we are working with those reviewers directly to replace their boards with production versions.

EVGA GeForce RTX 3080 XC3 series with 5 POSCAPs + 10 MLCC solution is matched with the XC3 spec without issues.

Also note that we have updated the product pictures at EVGA.com to reflect the production components that shipped to gamers and enthusiasts since day 1 of product launch.

Once you receive the card you can compare for yourself, EVGA stands behind its products!

Thanks

EVGA

Desided to reactivate my old Twitter account... Surprised it was still there.

You need to have two types of Capacitors in the circuit because MLCC's as a General Rule do not have Enough Capacitance and Other types of polymer Capacitors don't have the same filtering/frequency capability

think of it this way the larger poly type caps act as a reservoir and the MLCC handles the heavy lifting of dealing with the extreme changes in demand coming from the gpu core

its entirely possible to build a circuit with nothing but MLCC, But that requires a lot of PCB Space, its expensive, and in the end probly overkill

you can also do it with other types of Caps so long as you keep the Capacitance and frequency requirements in mind

youtubers that don't have electrical degrees should not talk about shit that requires said degree :roll:

what the article should read is this

AIBS screw up PCB design

"The thing is, as far as I'm concerned this is like nvidia screwing up the design guidelines, I wouldn't really throw this on the board partners because as far as I'm aware you can't even ship an nvidia gpu without running it through nvidia's green light program. So if nvidia doesn't approve you pcb design, you can't sell it."

Again, they are simply pushing the limits of advertising, in a real legal matter those figures would never hold up just like it didn't in the Apple case. If the world operated how you think it does, then you could for example sometimes get water from the gas station in your car's tank because the oil company claimed "Up to 95% petrol content" and so that would be fine and no one could ever take them to court. That'd obliviously be completely absurd, companies can't just claim what they want and sell crap with no repercussions.

This creates a new echelon of enthusiast - the ones who are privileged enough to be able to run the cards.