Friday, September 25th 2020

RTX 3080 Crash to Desktop Problems Likely Connected to AIB-Designed Capacitor Choice

Igor's Lab has posted an interesting investigative article where he advances a possible reason for the recent crash to desktop problems for RTX 3080 owners. For one, Igor mentions how the launch timings were much tighter than usual, with NVIDIA AIB partners having much less time than would be adequate to prepare and thoroughly test their designs. One of the reasons this apparently happened was that NVIDIA released the compatible driver stack much later than usual for AIB partners; this meant that their actual testing and QA for produced RTX 3080 graphics cards was mostly limited to power on and voltage stability testing, other than actual gaming/graphics workload testing, which might have allowed for some less-than-stellar chip samples to be employed on some of the companies' OC products (which, with higher operating frequencies and consequent broadband frequency mixtures, hit the apparent 2 GHz frequency wall that produces the crash to desktop).

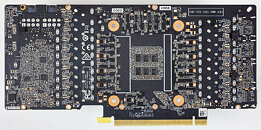

Another reason for this, according to Igor, is the actual "reference board" PG132 design, which is used as a reference, "Base Design" for partners to architecture their custom cards around. The thing here is that apparently NVIDIA's BOM left open choices in terms of power cleanup and regulation in the mounted capacitors. The Base Design features six mandatory capacitors for filtering high frequencies on the voltage rails (NVVDD and MSVDD). There are a number of choices for capacitors to be installed here, with varying levels of capability. POSCAPs (Conductive Polymer Tantalum Solid Capacitors) are generally worse than SP-CAPs (Conductive Polymer-Aluminium-Electrolytic-Capacitors) which are superseded in quality by MLCCs (Multilayer Ceramic Chip Capacitor, which have to be deployed in groups). Below is the circuitry arrangement employed below the BGA array where NVIDIA's GA-102 chip is seated, which corresponds to the central area on the back of the PCB.In the images below, you can see how NVIDIA and it's AIBs designed this regulator circuitry (NVIDIA Founders' Edition, MSI Gaming X, ZOTAC Trinity, and ASUS TUF Gaming OC in order, from our reviews' high resolution teardowns). NVIDIA in their Founders' Edition designs uses a hybrid capacitor deployment, with four SP-CAPs and two MLCC groups of 10 individual capacitors each in the center. MSI uses a single MLCC group in the central arrangement, with five SP-CAPs guaranteeing the rest of the cleanup duties. ZOTAC went the cheapest way (which may be one of the reasons their cards are also among the cheapest), with a six POSCAP design (which are worse than MLCCs, remember). ASUS, however, designed their TUF with six MLCC arrangements - there were no savings done in this power circuitry area.It's likely that the crash to desktop problems are related to both these issues - and this would also justify why some cards cease crashing when underclocked by 50-100 MHz, since at lower frequencies (and this will generally lead boost frequencies to stay below the 2 GHz mark) there is lesser broadband frequency mixture happening, which means POSCAP solutions can do their job - even if just barely.

Source:

Igor's Lab

Another reason for this, according to Igor, is the actual "reference board" PG132 design, which is used as a reference, "Base Design" for partners to architecture their custom cards around. The thing here is that apparently NVIDIA's BOM left open choices in terms of power cleanup and regulation in the mounted capacitors. The Base Design features six mandatory capacitors for filtering high frequencies on the voltage rails (NVVDD and MSVDD). There are a number of choices for capacitors to be installed here, with varying levels of capability. POSCAPs (Conductive Polymer Tantalum Solid Capacitors) are generally worse than SP-CAPs (Conductive Polymer-Aluminium-Electrolytic-Capacitors) which are superseded in quality by MLCCs (Multilayer Ceramic Chip Capacitor, which have to be deployed in groups). Below is the circuitry arrangement employed below the BGA array where NVIDIA's GA-102 chip is seated, which corresponds to the central area on the back of the PCB.In the images below, you can see how NVIDIA and it's AIBs designed this regulator circuitry (NVIDIA Founders' Edition, MSI Gaming X, ZOTAC Trinity, and ASUS TUF Gaming OC in order, from our reviews' high resolution teardowns). NVIDIA in their Founders' Edition designs uses a hybrid capacitor deployment, with four SP-CAPs and two MLCC groups of 10 individual capacitors each in the center. MSI uses a single MLCC group in the central arrangement, with five SP-CAPs guaranteeing the rest of the cleanup duties. ZOTAC went the cheapest way (which may be one of the reasons their cards are also among the cheapest), with a six POSCAP design (which are worse than MLCCs, remember). ASUS, however, designed their TUF with six MLCC arrangements - there were no savings done in this power circuitry area.It's likely that the crash to desktop problems are related to both these issues - and this would also justify why some cards cease crashing when underclocked by 50-100 MHz, since at lower frequencies (and this will generally lead boost frequencies to stay below the 2 GHz mark) there is lesser broadband frequency mixture happening, which means POSCAP solutions can do their job - even if just barely.

297 Comments on RTX 3080 Crash to Desktop Problems Likely Connected to AIB-Designed Capacitor Choice

But it'll take a while, and time is on our side really. The more and longer Nvidia struggles, the more they will need to watch the AMD space. Lacking supply can also be an easy ticket to switch camps, at some point people do need a GPU even if the one available is not their first choice - which has even yet to be seen, mind.

If Nvidia needs a downclock or limit to peak clocks they're losing % against competition which might just nudge things in Navi's favor. Interesting times! I hope our resident reviewer is happy to revisit those FE's.... :DYou'd think folks would know better by now, but no. So its well deserved really. Early adopting is great, as long as its not me ;)The power of emphasis in speech... hahaYou don't see the inside of big companies a lot do you...

I do... and yes 'they hate it'... until they get in the car and drive home. Its a 9 to 5 job, this hating of the work people have or haven't done, and the bottom line is just people screwing up and management not giving it enough mind to fix it. Or, management killing the workforce with too much work and/or too little time. The assumption everyone can do his job proper is a bad one, the assumption should be 'double check everything or it will likely go wrong'. This is what you do when you release software or code, too. You make sure there is no room for error through well defined processes - and even thén, something minor might just get through the cracks.

ALL of this is self-inflicted, conscious, well calculated risk management - even that last 1% that does get past and goes wrong. The bottom line is cost/benefit, it just doesn't always work out like people think it does. In the end, it is only and always the company producing something that is fully responsible. Nobody should ever have to find excuses for any company making mistakes. They're not mistakes. They were thoroughly looked at, and some people in suits together said 'We'lll run with this', and poof, consumer can start shoveling poop. Meanwhile, a healthy profit margin was already secured as 'the bottom line'...

Case in point here, because the only reason this is happening is because cards get pushed beyond or too close to the edge. That is directly, and only a cost/benefit scenario: performance per dollar. Even despite this capacitor detail, really, which kinda comes on top of it. The fact the line is thís thin, is telling in terms of overall product longevity, as well. That alongside with the heat of memory and several other decisions made with this 3080 really keeps me FAR away from it, so far.

It doesn't look good at all. Its a bit like cheap sports cars. Lots of HP's for not a lot of cash... but your seat is shit, the tank is empty before you've reached the end of the street and after a year you're replacing half the engine.

AMD did both .... the immediate fix on the 480 was to cut power delivery with BIOS and driver updates, , but later on, vendors switched to 8 pin designs

EVGA did with the 970 ...1st they argued that they 'designed it that way", but later they came out with a new design

EVGA did again, with the malfunctioning 1060 - 108os ... 1st offer was a recall or thermal pad kit you could install yaself ... later all cards came with thermal pads.I think that's an automatic ... as above, AMD did the same thing with the 6 pin 480 fiasco ... but they followed with a move to 8 pin cards later on. Same with EVGA mishaps ... I just don't see everyone sitting and leaving this alone .... at the next board meeting, there will be at least one person in the room saying "we need to take thin step to distinguish ourselves above the others" ... but the reality is there will be one of those guys in every boardroom. Im still curious as to why no one was able to beat the FE fps wise .... while most of the other AIBs allowed for greater wattage limit. MSI left theird 20 watts BELOW the DE .... maybe MSI saw something no one else picked up ?

Also by default the 3080 are the lower bin GA102 dies.

Samsung 8N is fine, they seem to run cooler even with increased power consumption compare to TSMC 12nm FFN.

I expect all these CTDs would be fixed with newer driver, not like the cause of these CTD is that mysterious anyways. As for SPCAP vs MLCC, sounds like Asus did an excellent job with their TUF line, kudo to them, and I guess they can't be making 3080/3090 fast enough. I asked my local retailer and they said they won't have 3090 TUF in stock for at least 2 months ~_~.

It will also make a horrible node for any mobile GPU, I'm curious to what will Nvidia do to come with reasonable SKU for laptops, because these gobble way too much power as they are.

Almost like this has been true since silicon has been used in computers.

Almost like overclock instability related to silicon limits has nothing to do with capacitor choice.

Almost like this is a non-issue that has been blown way out of proportion.

As for those people who will say "but some people get over 2GHz": silicon lottery.

As for those people who will say "but MUH CLOCKS NVIDIA IS RIPPING ME OFF": NVIDIA never guaranteed you'd get over 2GHz boost, NVIDIA in fact never even guaranteed you'd get anything more than the rated base or boost clocks. Nobody does.

On the subject of thermal and noise,

3080 TUF has better thermal and noise than 2080 Ti Strix

3080 Gaming X Trio the same, better than 2080 Ti Trio

3080 Zotac Trinity, same thing

So far all reviewed samples of 3080 show very good thermal and noise characteristic, the 3090 samples are hotter and louder but that is to be expected.

Ampere has around 20% higher perf/watt than Turing, yes it is a little on the low side but it is a compromise people have to accept to get a better perf/dollar, I expect any AMD GPU owner would understand this :D

Real and responsible brands will offer specific pack of solutions or choices to their customers.

The low-end they might simply hide their head under the sand, under-clock will be their only offering or a refund if you are a lucky one.

Nvidia rushed their own Fe development, yet gave time to ,AIB.

Yeah right, it's a rushed launch, I'm sure blame will be thrown about but I'm not buying, so my concern and care levels are minimum , I have an opinion yes but I have said it, leave me out of the debate until you have something other than your opinion to discuss, because I don't give a shit what you Think,. I stated Facts.

Lets return to today and latest edge of GPU architecture.

RTX 3080 due it high pricing this is now considered as investment.

NVIDIA did use additional tricks to protect it work (product) so to minimize the fail rate, it is extremely costly to handle an 1000 Euro worth of VGA card about return to base for an exchange.

I would not be impressed if the people later on will discover that even BIOS_Flash at those cards this is locked by password.

I wrote too much in this topic, now I will simply take a seat at the back of the buss and I will wait so to inspect the quality degree of product support, that all major brands will deliver to their customers.There is no good enough schools to teach us foreigners at the detection of sentiments due written text.

My advice to Americans, use neutral clear text as description of your true point which you are up to make.

TPU this is read internationally, this is not a neighborhood of Dallas - Texas

You are not the English language police, you can tell me how to do nothing, sir. ... .

And I'm English not American.

Yeah Turing was known for its terrible perf/dollar, lucky for Nvidia that Navi was not that much better anyways...

Its not a non issue at all. Previous generations worked a lot more smoothly with GPU Boost peaking up high at the beginning of a load, and sustained too. The ripoff part...myeah... its not substantial in any way. But it does tell us a big deal about the quality of this generation and the design choices they've been making for it.

The whole rock solid GPU Boost perception we used to have... has been smashed to pieces with this. For me at least. Its a big stain on Nvidia's rep, if you ask me.