Sunday, November 22nd 2020

Cyberpunk 2077 System Requirements Lists Updated, Raytracing Unsupported on RX 6800 Series at Launch

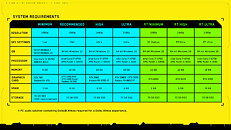

CD Projekt RED released updated PC system requirements lists for "Cyberpunk 2077," which will hopefully release before the year 2077. There are a total of seven user experience grades, split into conventional raster 3D graphics, and with raytracing enabled. The bare minimum calls for at least a GeForce GTX 780 or Radeon RX 480; 8 GB of RAM, Core i3 "Sandy Bridge" or AMD FX "Bulldozer," and 64-bit Windows 7. The 1080 High grade needs at least a Core i7 "Haswell" or Ryzen 3 "Raven Ridge" processor, 12 GB of RAM, GTX 1060 6 GB or GTX 1660 Super or RX 590 graphics. The 1440p Ultra grade needs the same CPUs as 1080p High, but with steeper GPU requirements of at least an RTX 2060 or RX 5700 XT.

The highest sans-RT grade, 4K UHD Ultra, needs either the fastest i7-4790 "Haswell" or Ryzen 5 "Zen 2" processor, RTX 2080 Super or RTX 3070, or Radeon RX 6800 graphics. Things get interesting with the three lists for raytraced experience. 1080p Medium raytraced needs at least an RTX 2060; 1440p High raytraced needs an RTX 3070, and 4K UHD Ultra raytraced needs at least a Core i7 "Skylake" or Ryzen 5 "Zen 2" chip, and RTX 3080 graphics. All three raytraced presets need 16 GB of RAM. Storage requirements across the board are 70 GB, and CDPR recommends the use of an SSD. What's interesting here is that neither the RX 6800 nor RX 6800 XT make it to the raytraced list (despite the RX 6800 finding mention in the non-raytraced lists). PC Gamer reports that Cyberpunk 2077 will not enable raytracing on Radeon RX 6800 series at launch. CDPR, however, confirmed that it is working with AMD to optimize the game for RDNA2, and should enable raytracing "soon."

Sources:

Cyberpunk 2077 (Twitter), PC Gamer

The highest sans-RT grade, 4K UHD Ultra, needs either the fastest i7-4790 "Haswell" or Ryzen 5 "Zen 2" processor, RTX 2080 Super or RTX 3070, or Radeon RX 6800 graphics. Things get interesting with the three lists for raytraced experience. 1080p Medium raytraced needs at least an RTX 2060; 1440p High raytraced needs an RTX 3070, and 4K UHD Ultra raytraced needs at least a Core i7 "Skylake" or Ryzen 5 "Zen 2" chip, and RTX 3080 graphics. All three raytraced presets need 16 GB of RAM. Storage requirements across the board are 70 GB, and CDPR recommends the use of an SSD. What's interesting here is that neither the RX 6800 nor RX 6800 XT make it to the raytraced list (despite the RX 6800 finding mention in the non-raytraced lists). PC Gamer reports that Cyberpunk 2077 will not enable raytracing on Radeon RX 6800 series at launch. CDPR, however, confirmed that it is working with AMD to optimize the game for RDNA2, and should enable raytracing "soon."

103 Comments on Cyberpunk 2077 System Requirements Lists Updated, Raytracing Unsupported on RX 6800 Series at Launch

I'm fine that I will be missing out on ray tracing, meh.

www.techpowerup.com/review/bfg-ageia-physx-card/

On the other hand it is good that they take their time to optimize it for 6800 series rather than enabling it without optimization which could cripple the cards.

Like the Witcher 3, I think CD Project RED is getting support from Nvidia to integrate their technologies so maybe they did not had the time before to work on optimising for 6800.

www.nvidia.com/en-us/geforce/news/cyberpunk-2077-ray-tracing-dlss-geforce-now-screenshots-trailer/

As we know that this game has been delay number of times because they were not finished and now they needed to concentrate on some features and leave others for after launch. Maybe they don't want to delay the game again. :laugh:

I enjoy playing their games.

I played all of their Witcher games but usual not from the launch date but few months later.

Maybe this time I will buy Cyberpunk near Christmas to play it in holidays. :rolleyes:

So in future AMD sponsored games, can we expect comprasion of 6700XT to 3080 because of AMD's SuperResolution?

Also, between Cyberpunk 2077 and Godfall, there goes the whole DXR is a standard thing.

Looks like, per brand's RT development and optimization, will be the new norm.

Proprietary upsampling and RT implementations.

I don't like where this shit is going. Do you?

I do think think that Nvidia is majorly taking advantage of it, trying to corner the whole market... and that's swaying devs to stay in that wheelhouse when it comes to their own work implementing RT. Of course they are going to favor RTX... it's the simplest, most complete option. And as a dev, you can bet on it getting better without you directly working on it, because Nvidia is all in on pushing it forward. No brainer to work with them, put another feather in your cap come release time.

No doubt, the exclusivity sucks, but the cat will be out of the bag eventually. Right now it's enough that we have some hardware that can do it at all, let alone hardware that can actually provide useful and significant results. Have people forgotten how unthinkable RT was as recently as back when Vega and GTX10xx cards were the mainstays? People didn't even believe it. They would've said it 'd have to be many more years away before it even started to be workable, if it could ever be done without major technological shifts.

I'm just sayin... it's noteworthy. This isn't another PhysX. It has picked up, and the impact isn't minor.

Still, it's barely off the starting line. The cost is still very high, both in hardware cost and performance cost. I don't think anyone should feel left out by that, though. The fact is, the techniques that RT is looking to replace are way beyond serviceable and can often produce gorgeous results. They're known parameters for devs, and the good ones make incredible visual art with them. It just takes skilled coders on the shader pipeline (or a versatile ready-made one) and level designers who really understand the pipeline and have a good sense of composition and aesthetic. We have seen so many GLORIOUS looking games without RT, because of efforts like that.

One day, everybody will have RT. Until then, we'll have to 'tolerate' regular old amazeballs visual artistry. It's not that big of a deal. I have a 2060 and have really been influenced by what I've seen with RT - it's really something worth doing if you ask me. But I don't expect that card to hold up RT for this game, and that's fine.

You don't always have to be on the bleeding edge. There are still people happily gaming on RX580's out there. And the thing is... in a few years, the RT games we're seeing now will still be available. And if they're good, they'll still be relevant enough to play and discuss. Meaning that later on when the tech inevitably becomes cheaper and more available... and devs are better able to optimize it (who knows, for big titles you might see patches much later on, as new options become available,) you can play those games in full RT glory. I'm still pulling for AMD getting up to snuff on it. They clearly have an interest. And that goes to show how real this is. They recognize that they'll need cards that can do RT to compete in the future. Nvidia has shown it can be done. It's on the table, now.

That's really the main factor here. Nvidia can only keep their proprietary hegemony going because they're the only ones to really get it done. If AMD can get it going too, there's no telling what happens. And if they don't, well... that kind of is what it is. It's up to them to make that happen. I really hope they will succeed. It's probably the only way we ever get anything resembling a universal standard. Hardware still kind of stands in the way of that too, though.

For now, it's not that big of a deal, though. If CDPR did their job, the game will look great without RT and all you will lose out on is another chance to see the hawt graphical newness in action. Honestly, it's not that great on the bleeding edge anyway. It's costly and unreliable. Call me a half-full type of guy. Panties so not wadded by this that I don't even own a pair.

I mean... fkn Crysis man. How many people never got to see that game in its full glory until years after it came out and the hardware to run it became widely accessible? I don't think that's necessarily prudent from a dev standpoint. If you want those critical release sales, people need to be able to run it. However, this isn't even that bad compared to a game like that. The non-RT requirements are pretty reasonable for a full scale, current-gen AAA title. RT is not a hard requirement for making anything look good, unless your lighting/post-processing work is terrible.

There's no sense getting upset about it just yet. To me, this is more about hardware than propriety or hegemony. It would be a different story if both companies had even footing on the hardware and stuff like this was happening. I'd be clamoring for a standard. But honestly, I don't see how a standard is even viable right now.

...who am I kidding though, this is the gaming community we're talking about :laugh:

Who cares, this is Hairworks v2, totally forgettable add-on effects that kill perf. This is the way of RT, get used to it, it'll last for years.

That being said all games should run on any hardware that supports DXR, AMD or Nvidia. If it works well on Nvidia and it doesn't on AMD, well ... you can use your imagination on why that might be the case.

They do seem to be perfectly capable of delaying the game for every other reason it seems though.

The fact is Nvidia had free reign selling RTX as they did, what company wouldn't want to use such a USP?

Never mind just tired and reading it wrong GN.