Tuesday, January 19th 2021

NVIDIA Quietly Relaxes Certification Requirements for NVIDIA G-SYNC Ultimate Badge

UPDATED January 19th 2021: NVIDIA in a statement to Overclock3D had this to say on the issue:

Sources:

PC Monitor, via Videocardz

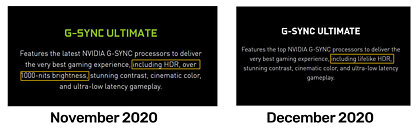

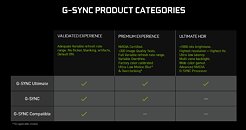

Late last year we updated G-SYNC ULTIMATE to include new display technologies such as OLED and edge-lit LCDs.NVIDIA has silently updated their NVIDIA G-SYNC Ultimate requirements compared to their initial assertion. Born as a spin-off from NVIDIA's G-SYNC program, whose requirements have also been laxed compared to their initial requirements for a custom and expensive G-SYNC module that had to be incorporated in monitor designs, the G-SYNC Ultimate badge is supposed to denote the best of the best in the realm of PC monitors: PC monitors that feature NVIDIA's proprietary G-SYNC module and HDR 1000, VESA-certified panels. This is opposed to NVIDIA's current G-SYNC Compatible (which enables monitors sans the G-SYNC module but with support for VESA's VRR standard to feature variable refresh rates) and G-SYNC (for monitors that only feature G-SYNC modules but may be lax in their HDR support) programs.The new, silently-edited requirements have now dropped the HDR 1000 certification requirement; instead, NVIDIA is now only requiring "lifelike HDR" capabilities from monitors that receive the G-SYNC Ultimate Badge - whatever that means. The fact of the matter is that at this year's CES, MSI's MEG MEG381CQR and LG's 34GP950G were announced with an NVIDIA G-Sync Ultimate badge - despite "only" featuring HDR 600 certifications from VESA. This certainly complicates matters for users, who only had to check for the Ultimate badge in order to know they're getting the best of the best when it comes to gaming monitors (as per NVIDIA guidelines). Now, those users are back at perusing through spec lists to find whether that particular monitor has the characteristics they want (or maybe require). It remains to be seen if other, previously-released monitors that shipped without the G-SYNC Ultimate certification will now be backwards-certified, and if I were a monitor manufacturer, I would sure demand that for my products.

All G-SYNC Ultimate displays are powered by advanced NVIDIA G-SYNC processors to deliver a fantastic gaming experience including lifelike HDR, stunning contract, cinematic colour and ultra-low latency gameplay. While the original G-SYNC Ultimate displays were 1000 nits with FALD, the newest displays, like OLED, deliver infinite contrast with only 600-700 nits, and advanced multi-zone edge-lit displays offer remarkable contrast with 600-700 nits. G-SYNC Ultimate was never defined by nits alone nor did it require a VESA DisplayHDR1000 certification. Regular G-SYNC displays are also powered by NVIDIA G-SYNC processors as well.

The ACER X34 S monitor was erroneously listed as G-SYNC ULTIMATE on the NVIDIA web site. It should be listed as "G-SYNC" and the web page is being corrected.

36 Comments on NVIDIA Quietly Relaxes Certification Requirements for NVIDIA G-SYNC Ultimate Badge

Maybe ask VESA to devise a special badge for you, so you can take a spot next to that ridiculous set of ' HDR standards' for monitor settings that can barely be called pleasant to look at and can't ever be called HDR in any useable form.

We've seriously crossed into idiot land with all these certifications and add on technologies. The lengths companies go to hide inferior technology at its core... sheet. And if you bought into that Ultimate badge, you even paid for inferior on top... oh yeah 'subject to change' of course. Gotta love that premium feel.

(No, I'm not sour today, dead serious. And laughing my ass off at all the nonsense 2020 brought us, and 2021 seems eager to continue bringing)

The whole ultimate badge is technology pushed beyond reasonable at astronomical price increases. Now, Nvidia figured that out too because the stuff didn't sell or make waves, and adapts accordingly. Meanwhile, there are still hundreds of monitors out there with VESA HDR spec that are going to get a new Ultimate badge now.

Selling no is bad sales I know, but did mommy not teach us not to lie?

Nvidia is still marketing Gsync as something special, and one by one, all those supposed selling points fall apart as reality sets in.

I wonder where DX12 Ultimate is going to end up, I'm still trying to come to terms with all the goodness that API has brought to gaming. /s

No 2 such clusterfucks have been released into the tech space.

I guess it's a matter of trying to drive technology forward as well, but yeah, the pricing is insane in this case, as it would seem the technology isn't quite ready to transition to the kind of specs Nvidia wanted. This is also most likely why they're quietly scaling it back now.

I guess we need to move from Ultimate to Preeminent for the next generation of kit...Did you see they added a new 500 category, as well as True Black 400 and 500?

displayhdr.org/

Gsync is the perfect example. It has lost the war of standards already, so we get an upsell-version of it still. Like... what the actual f... take your loss already, you failed. Its like presenting us now with a new alternative to BluRay that does almost nothing better but still 'exists' so you can throw money at it.WOW.

Stuff like this makes me wonder if my VESA mount might not just fall off the wall anyway at some point. What are those stickers worth anymore?

Its really just so simple... you either have OLED or you have nothing. I guess thats not the best marketing story, but yeah.

I wish that the vesa certification would be used to enforce a strict minimum about the general quality of screens. 100% sRGB, factory calibration 1000:1 contrast ratio and 400nits. I was looking for a decent laptop two months ago, but I was baffled to see that even with a budget of 800-900€, it's nearly impossible to get a decent screen, but put the same amount of money on a phone/tablet and you'll end up with a great screen.

HD Ready.

Watt RMS

Dynamic Contrast

the list is long and its not getting more simple, but more complicated as technology gets pushed further. Look at VESA's HDR spec. They HAVE a range of limitations much like you say... but then they lack some key points that truly make it a solid panel.

The spec intentionally leaves out certain key parts of a spec to leave wiggle room for display vendors to tweak their panels 'within spec' with the same old shit they always used, just calibrated differently. G2G Rise and fall time for example.... VESA only measures rise time. G2G has always been cheated with, now its part of a badge so we no longer look at a fake 'x' ms G2G number (which was actually more accurate than just measuring rise time)... so the net effect of VESA HDR is that we know even less. Basically all we've won is... 'your ghosting/overshoot will look a specific way'.

The new additions also underline that the previous versions actually had black levels that were not good - which is obvious with weak local dimming on a backlit LCD. 'True black' actually spells 'Not true black elsewhere'.

Kill it with fire. :)

And yes, anything better than DisplayHDR 600 is as rare as hen's teeth (and DisplayHDR 600 monitors are only slightly easier to find). But the failure is not Nvidia's, the failure lies with manufacturers. Monitors are presented at various shows, yet are only actually made available one year later. Even later than that, in some cases. Same goes with mini and microLED. Those were supposed to improve the HDR experience, yet here we are, years later after at least miniLED was supposed to be available and we can buy like 3 or 4 monitors for like 3 grand. It looks like LG will be able to give us OLED monitors (I know, with pros and cons) before anyone else can tame mini or microLED.

OLED TVs dish out 600-700nit (and not full screen, of course) and even that is "it hurts me eyes" level of bright.

And that is something that is viewed from several meters distance.

That figure naturally needs to go down, when one is right next to the screen.

400/500nit "true black" (i.e. OLED) makes perfect sense on a monitor.

1000+ nit on a monitor is from "are you crazy, dude" land. People who think that makes sense should start with buying an actual HDR device (and not "it supports HDR" crap like TCL and other bazingas)

Left behind... All of them... PhysX has grinded down to one of many already existing CPU-phisics core engines where it was unable to compere with Havoc. Why? Because Havoc could be unleashed to all CPU-cores while PhysX had to been restricted in order to make up the difference between GPU and CPU PhysX... The year 2016 saw 6 titles with PhysX, the years after saw 1 in each year except in 2018 in which there was none...

Sure an OLED will not be as good as traditional LED when viewing a scene that goes from a cave to full-blown sunlight. But try to count how often do you see that in a day.

I also have an OLED TV (LG, which is supposed to be on the dimmer side of OLEDs) and I can vouch the brightness is high enough I don't go near 100%. Except when viewing HDR content, because you can't control brightness in DolbyVision mode.

But I agree about 500 nit OLED making perfect sense, I'd take that over 1000 nit FALD any day. Natural performance of the panel is always more important and applies universally to everything with no side effects.

If any of the monitor tests back in day proved anything aside from game developers having to

wastespend more time tuning their game for HDR for it to be even a thing, it was that either computer monitors need to have DisplayHDR1000 levels of brightness at a constant to even pretend it was HDR. Otherwise brightness would not matter (and would be nice especially for me who can barely even handle an IPS at full brightness) since its trying to make up for the lack of true contrast via FALD -- DisplayHDR600 or maybe even lower would be fine as long as FALD on computer monitors was a thing. On top of that, even monitors that purportedly met "G-SYNC Ultimate" requirements before weren't able to do high refresh rate (120Hz+), 1440p resolution or higher, and "HDR" together anyway (misleading customers from the beginning), due to HDMI2.1 only finally introduced (on "consumer" graphics cards) last year with the mythical Nvidia 30xx series. I don't care about this whole mumbo jumbo since it's something I can worry about after I get a HDMI2.1/DP2.0 graphics card and Cyberpunk 2077 is actually playable (or at least supports mods, so we can have something like USSEEP/USLEEP) in the way I envisioned, lol.tl;dr G-SYNC Ultimate is a stupid "certification" to begin with and will remain that way for several more years but, Jensen needs to deceive consumers to buy designer leather jackets, one in every color to match his spatulas.

I reckon, it's happened because the royalty-free FreeSync is killing the royalty-bearing G-SYNC, regardless of technical merit, since it reduces the price of monitors.

But that monitor is an abomination even before factoring in the lame local dimming implementation.

And yes, it's a major VESA failure they did not mandate proper local dimming implementation. They did this because to this day very few monitors do a semi-proper job implementing local dimming, but when you're making standards to help customers choose, bending the standards to get on the good side of the manufacturers is not the way to go. And don't get me started on DisplayHDR 400.

www.usa.philips.com/c-p/558M1RY_27/momentum-4k-hdr-display-with-ambiglow

Now is there any model like that for G-sync Ultimate?