Monday, June 14th 2021

Western Digital May Introduce Penta Layer Cell (PLC) NAND by 2025

Western Digital has apparently delayed the introduction of Penta Layer Cell (PLC) NAND-based flash to 2025. The company had already disclosed development on the technology back in 2019, around the same time that Toshiba announced it (Toshiba which is now Kioxia, and a Western Digital partner in the development of the technology). The information was disclosed at Bank of America Merrill Lynch 2021 Global Technology Conference, where Western Digital's technology and strategy chief Siva Sivaram said that "I expect that transition [from QLC to PLC] will be slower. So maybe in the second half of this decade we are going to see some segments starting to get 5 bits per cell."

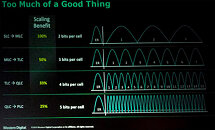

PLC is another density-increase step for NAND flash, whereby each NAND cell can have five bits written into it, thus increasing the amount of information available in the same NAND footprint. To achieve these 5 bits, each cell must store one of 32 voltage states, which in turn inform the flash controller of which corresponding data bits are stored herein. Siva Sivaram said that he expect the technology to take some more time to mature than most, due to the need for controller development that can take advantage of the increased density while making up for the shortcoming in this increased bit-per-cell approach (lower endurance and lower performance). PLC won't bring us HDD-tier storage density by itself (it only enables storage of 25% more data per cell); however, when paired with increasing layers of NAND flash, those 25% extra quickly add up.

Source:

Tom's Hardware

PLC is another density-increase step for NAND flash, whereby each NAND cell can have five bits written into it, thus increasing the amount of information available in the same NAND footprint. To achieve these 5 bits, each cell must store one of 32 voltage states, which in turn inform the flash controller of which corresponding data bits are stored herein. Siva Sivaram said that he expect the technology to take some more time to mature than most, due to the need for controller development that can take advantage of the increased density while making up for the shortcoming in this increased bit-per-cell approach (lower endurance and lower performance). PLC won't bring us HDD-tier storage density by itself (it only enables storage of 25% more data per cell); however, when paired with increasing layers of NAND flash, those 25% extra quickly add up.

30 Comments on Western Digital May Introduce Penta Layer Cell (PLC) NAND by 2025

a) SLC - Many PBW

b) MLC - Small PBW or many TBW

c) TLC - Many TBW or average TBW

d) QLC - Average TBW or small TBW

e) PLC - Small TBW(not GBW only because in years from 2025+ will not be produced SSD's with below of 1TB volume)

f) NextLC - below 1 write per cell :D

Mark my words when i say that the future is not QLC, PLC etc. The future is adding more layers to TLC. Samsung has said they believe it scales to ~1000L and are set to indroduce 176L this year.

Adding more layers means cheaper, faster and bigger TLC drives. Perhaps this will drag QLC along to the point that it will actually be acceptable at some point but i wont hold my breath.

I mean, if QLC is something to avoid, what can we expect from PLC?

SLC (1 bit) = 100% vs 0

MLC (2 bit) = 100% vs 66% vs 33% vs 0

TLC (3 bit) = 100% vs 86% vs 71% vs 57% vs 43% vs 28% vs 14% vs 0

QLC (4 bit) = 100% vs 93% vs 87% vs 80% vs 73% vs 67% vs 60% vs 53% vs 47% vs 40% vs 33% vs 27% vs 20% vs 13% vs 7% vs 0%

PLC (5 bit) = 100% vs 97% vs 94% vs 90% vs 87% vs 84% vs 81% vs 77% vs 74% vs 71% vs 68% vs 65% vs 61% vs 58% vs 55% vs 52% vs 48% vs 45% vs 42% vs 39% vs 35% vs 32% vs 29% vs 26% vs 23% vs 19% vs 16% vs 13% vs 9% vs 6% vs 3% vs 0%

Drive durability has fallen through the floor 11x since MLC (if the voltage for PLC based drives drifts as little as 3% (vs 33% MLC) the data changes / gets corrupted). We only got away with TLC due to using SLC cache + more advanced ECC to hide their own issues vs MLC but those were "one shot" improvements. There's no "magic beans" equivalent for QLC / PLC as all such workarounds are maxed out now. Despite the usual tech site click-bait of "HDD's are dead", I wouldn't touch these with a barge pole for any offline / cold storage / external drive backup likely to remain unpowered for any length of time. I still find it shocking that no tech site is bothering to test unpowered data retention of modern drives on the grounds of "we did one article on that back in 2013 and found no problems, so that's that" whilst ignoring that the "1 year data retention" standard was based off several year old MLC based Intel drives with relatively large +20nm cell sizes and an awful lot has changed since then...

QLC actually has a lot going for it moving forward. For one, it gives the flexibility of pSLC and pMLC modes but also pTLC. Kioxia's QLC already supports simultaneous pSLC and pTLC modes, and I've tracked the latter as being just as fast as their native TLC. QLC is also the basis of X-NAND which I covered extensively, because of the math. If your physical page size is 16 kB, logical page size 4kB, and of course 16 kB data/page buffers, then splitting it into 4 is quite convenient, or 4x4, again as seen with X-NAND. To put it simply, QLC is binary in levels (e.g. 2^2) which makes such things simpler. See source below.

QLC also benefits more from floating gate as with Intel's 144L QLC. There's a reason nobody else really makes good QLC, outside possibly Samsung, although they have struggled as well. Split-gate technology is especially robust with FG designs. And here again you can use FG for TLC, but it makes much more sense to risk it with SG using QLC due to its 4-bit nature. And then you can run that in pTLC mode of course. I don't need to remind people that the Plotripper Pro is probably QLC in pSLC mode...because it's evenly divisible and cheaper.Arguably. Intel's 64L QLC achieved 1000 P/E while at 96L it could get up to 1500 P/E. Micron's TLC in the same architecture was 1500 P/E (64L) but up to 3000 P/E (B27B) although more commonly 2000 P/E (B27A). Of course, Micron moved to replacement gate which has much higher endurance at the baseline, but that's not what we're comparing here. See below for a source.

QLC has been surprisingly robust with floating gate and this is liable to remain the case, given Intel's 144L QLC and Kioxia's split-gate research (for QLC and PLC). FG with SG may actually be "magic beans" due to the shape of the cells - you get natural isolation and electromagnetic shielding. See the SG source for more on this.

In general, 3D NAND is superior to 2D/planar in retention, simply because the process node is so much larger (that is why they moved to 3D). 3D flash has more possible sources of disturb (i.e. there's X, Y, and XY disturb) but they are much less impactful (more isolation between cells). There's also a ton of ways to mitigate it (more "magic beans" if you prefer) - I've covered these extensively on my discord if you want the technical aspects. Now of course you're talking about power-off retention which fits JEDEC testing (at a given temperature) and I can't argue with you on cold storage as that remains a possible issue here. And you're right, old SLC/MLC drives could retain data for a decade but things have changed. Although I question the relevance even in the enterprise space due to how QLC tends to be used (it's not for long-term cold storage).

[/HR]

Some sources:

3D Semicircular Flash Memory Cell: Novel Split-Gate Technology to Boost Bit Density

3D NAND: Current, Future, and Beyond

X-NAND: New Flash Architecture Combines QLC Density with SLC Speed

A Closer Look at Micron 3D NAND Features

PLC (and beyond) will mostly go to the big buyers. It'll be available for us to buy but we're not the intended audience.Drive durability has gone up. What's come down is the amount of over provisioning and endurance for consumer drives. And it's not because they're worse it's because manufacturers have a better understanding of how much endurance is actually needed.

If you need a high endurance device they still exist but they're the 'enterprise' versions. The TLC enterprise devices don't use SLC cache, they're just fast on their own. Look at the Samsung PM1733. It's a 3.84TB read intensive drive with 7 petabytes of endurance.

And regarding your last statement of 1 year data retention being based off relatively large 20nm cell sizes, current 3D cell sizes are ~40nm. Since NAND is scaling through layer increases they were able to go waaay up in cell size and still increase density. It'll be a while before we're going back down in cell size as there is quite a bit of room to scale layers and it's more cost effective to do so.

EDIT: Read what Maxx said above. He smart.

I can see it now 2025 announcement, "vendor adds 8th layer to NAND, an extra whopping 12.5% capacity, but dont worry it still allows 20 erase cycles and can write faster than 5meg/sec in SLC cache".

QLC has failed.

You've been the goodest boye so far, please stay alive at least for the next 2 or 3 decade

For those unfamiliar, ZNS effectively puts the onus on the application to manage the Flash Translation Layer (FTL). The host needs to do all writes sequentially with no modification of data. This means that there is no write amplification so the 2000 P/E cycles translate to nearly 2000 drive fills whereas current drives when full and doing lots of garbage collection can suffer as high as 10x - 15x. This means that as far as spec sheets are concerned that 2,000 P/E cycles NAND is limited to ~150 drive fills.

ZNS will enable QLC/PLC/HLC and beyond for large users (FB, Google, Amazon, etc) that can tune their software to optimally use NAND devices (sequential writes, random reads).

Is your boot drive going to get cheaper because of this? No. Will online storage get cheaper? Yup.

1000-layers-high stacks (1000 layers on one die, not a stack of dies) may become viable one day or not, it's a matter of economics, like most things in chip manufacturing.

Semi Engineering has a good and long article on that.

My point of layers vs cell size is that even with the hurdles of scaling layers it's still a better / more cost-effective path forward. And it's not just scaling up layers that you can etch through. Stacking is used by all the manufacturers now and offers a way to again scale layers without the individual etch needing to scale at the same rate.

Also im not sure what enterprise segment are you looking at but the ones i have had contact with always go with high endurance. Even if it means paying insane $ per GB like Optane.

As for Intel SSD's: contacts that i have in enterprise space say they will never again buy an Intel SSD due to absurdly high failiure rate. Atleast when it comes to QLC. Optane is very reliable tho.Still faster than increasing bits per cell. In 13 years (2008, Intel X-25) we have gone from SLC > MLC > TLC > QLC. That is 3 jumps. Layer count has increased by 6 times from first 3D NAND in 2013 that was 24 layer to 176 layer this year. That is 8 years and 6 times increase compared to 13 years and 3 times increase. Nearly double the increase in half the time. It's pretty clear that manufacturers are at a limit already with QLC and no one knows if PLC will even be viable. Where as stacking layers still leaves plenty of room for progress.

Intel surpassed 10 million QLC SSDs. Last year. In February. You underestimate the volume massively.

Jeongdong Choe covers the future of NAND for TechInsights. In his presentation (p. 10) he shows we'll have PLC around 2026 with viability all the way up to OLC (octal-level cell) through the use of Through Silicon Via (TSV), package on package (PoPoP), high bandwidth NAND (HBN, listed as HBM on his chart), etc. I have an article here that covers some of this technology.

I've already explained how PLC will do well with ample sources, but to reiterate, Kioxia's article has a figure that shows you just how good PLC would be with a semicircular floating gate architecture (b) as compared to the normal circular charge trap for QLC (a, right side):

- Intel is 8% of the SSD market

- There have been about 800m SSDs shipped in 2018 - 2020

- 8% of 800 million is 64 million

- 10million of 64 million is ~15%

So Intel does about 15% of their volume as QLC SSDs. QLC drives are generally higher capacity than non-QLC so it's possible they do a higher percent of bits sold as QLC than 15%.But doing 15% of all SSDs in the timeframe where the technology was launched and tuned is pretty good. Expect that 15% to be back loaded such that 2021 can be 15 - 20% of SSDs sold as QLC.

The other vendors are behind in their QLC offering and are not as far in that ramp up but it's definitely happening.

I guess my point is that QLC has not achieved significant market share and the progress to more bits per cell is slowing down more and more with WD/Kioxia for example not expecting PLC before 2025/2026. Intel is more optimistic but i think we have all seen what Intel's optimism in roadmaps looks like (10nm in 2015, reality in 2020, desktop/server in 2021).