Friday, August 20th 2021

No PCIe Gen5 for "Raphael," Says Gigabyte's Leaked Socket AM5 Documentation

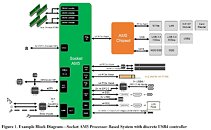

AMD might fall behind Intel on PCI-Express Gen 5 support, say sources familiar with the recent GIGABYTE ransomware attack and ensuing leak of confidential documents. If you recall, AMD had extensively marketed the fact that it was first-to-market with PCI-Express Gen 4, over a year ahead of Intel's "Rocket Lake" processor. The platform block-diagram for Socket AM5 states that the AM5 SoC puts out a total of 28 PCI-Express Gen 4 lanes. 16 of these are allocated toward PCI-Express discrete graphics, 4 toward a CPU-attached M.2 NVMe slot, another 4 lanes toward a discrete USB4 controller, and the remaining 4 lanes as chipset-bus.

Socket AM5 SoCs appear to have an additional 4 lanes to spare than the outgoing "Matisse" and "Vermeer" SoCs, which on higher-end platforms are used up by the USB4 controller, but can be left unused for the purpose, and instead wired to an additional M.2 NVMe slot on lower-end motherboards. Thankfully, memory is one area where AMD will maintain parity with Intel, as Socket AM5 is being designed for dual-channel DDR5. The other SoC-integrated I/O, as well as I/O from the chipset, appear to be identical to "Vermeer," with minor exceptions such as support for 20 Gbps USB 3.2x2. The Socket has preparation for display I/O for APUs from the generation. Intel's upcoming "Alder Lake-S" processor implements PCI-Express Gen 5, but only for the 16-lane PEG port. The CPU-attached NVMe slot, as well as downstream PCIe connectivity, are limited to PCIe Gen 4.

Socket AM5 SoCs appear to have an additional 4 lanes to spare than the outgoing "Matisse" and "Vermeer" SoCs, which on higher-end platforms are used up by the USB4 controller, but can be left unused for the purpose, and instead wired to an additional M.2 NVMe slot on lower-end motherboards. Thankfully, memory is one area where AMD will maintain parity with Intel, as Socket AM5 is being designed for dual-channel DDR5. The other SoC-integrated I/O, as well as I/O from the chipset, appear to be identical to "Vermeer," with minor exceptions such as support for 20 Gbps USB 3.2x2. The Socket has preparation for display I/O for APUs from the generation. Intel's upcoming "Alder Lake-S" processor implements PCI-Express Gen 5, but only for the 16-lane PEG port. The CPU-attached NVMe slot, as well as downstream PCIe connectivity, are limited to PCIe Gen 4.

118 Comments on No PCIe Gen5 for "Raphael," Says Gigabyte's Leaked Socket AM5 Documentation

Source: www.cpu-rumors.com/amd-cpu-roadmap/

Many old interfaces are long gone, some could've disappeared years ago, but didn't due to the fact that they were more cost efficient rather than more modern interfaces. Look at the humble D-Sub VGA connector, it's only really disappeared off of monitors with resolutions higher than the interface is capable of using, i.e. north of 2048x1536. In some ways, it should've disappeared with the introduction of of DVI and DFP, but DFP made it into obscurity long before the VGA connector did. Logic doesn't always apply to these things, neither does compatibility sometimes, as there has been a lot of weird, proprietary connectors over the years, especially courtesy of Apple. At one point I had an old Sun Microsystems display for my PC that connected via five BNC connector to a standard D-Sub VGA connector, much like you can connect to a DVI-I display with an HDMI to DVI adapter. I'm not sure I would call that compatibility, more like a dirty hack to make old hardware work with a new interface. This is also why we have so many different adapters between various standards. I guess more recently we can thank Apple for all the various dongles and little hubs that are required to make a Mac work with even the most rudimentary interfaces, due to their choice of going with the Type-C connectors on all their laptops (I don't own any Apple products).

As for USB 2.0, well, most things we use on an everyday basis doesn't really need a faster interface, I mean, what benefit do you get from having a mouse or a keyboard connect over USB 3.x? Also, if you look at the design of a USB 3.x host controller, the USB 2.0 part is separate from the USB 3.x part, so technically they're two separate standards rolled into one.

Just be glad you don't work with anything embedded or industrial, those things all still use RS-422, RS-485, various parallel busses and what not, as that's where compatibility really matters, but it's been pushed to the extreme in some cases where more modern solutions are shunned, just because. Some of it obviously comes down to mechanical stability as well, as I doubt some more modern interfaces would survive on a factory floor.

You brought this argument up as a way of there being potential consumer (and not just enterprise) benefits in PCIe 5.0. But the fact that PCIe 3.0 and 4.0 still aren't fully utilized entirely undermines that point. For there to be consumer value in 5.0, we would first need to be held back by current interfaces. We are not.

Also: what you're saying here isn't actually correct. Whether the bottleneck is the GPU's inability to process sufficient amounts of data or the interfaces' inability to transfer sufficient amounts of data, both can be alleviated (obviously to different degrees) by reducing the amount of data present in these operations. Reducing texture sizes or introducing GPU decompression (like DirectStorage does) reduces bandwidth requirements. This is of course dependent on a huge number of factors, but the same applies to graphics settings and whether your GPU is sufficiently powerful. It might be more difficult to scale for interface bandwidth, but on the flip side nobody is actually doing that (or programming bandwidth-aware games, at least AFAIK), which begs the question of what could be done if this was actually addressed. Just because games today lack options explicitly labeled and designed to alleviate such bottlenecks doesn't mean that such options are impossible to implement.

- It's boring and they didn't post it because it's boring

- It's exciting and they're holding it for later

- They're AMD fans and want to spread AMD hype

- They're Intel fans and want to hurt AMD by spoiling its plans

- Intel paid them off to not leak it

- Intel is behind the hack

Yes, that last option is rather tongue-in-cheek :P

I am ignoring in this post some attitude, especially that last line. It's understandable. It probably also made you feel nice.

I am also not totally agreeing with some parts. Other parts just say what I already wrote. But it is a very nice nice reply.

As for ARQ113, they probably got payed from Intel, I mean same case as AMD and Phison if that story is true, or simply they decided that the userbase is enough for them with Intel in the PCIe 4.0 game.

If you can't see why these things impose hard restrictions on performance and why you need to be sure that your customers have the required hardware first before you change the way you write the software then there is nothing I can add to convince you.

Please see:

pcisig.com/membership

Why would Intel pay companies to make things that would compete with Intel products? That makes no sense at all.

Again, the reasons why we haven't seen a huge amount of products is because 1. it takes time to develop 2. it would most likely be something made on a fairly cutting edge node and as you surely know, there's limited fab space and 3. a lot of things simply don't need PCIe 4.0.

There are already PCIe 5.0 SSDs in the making, but not for you or me.

www.techpowerup.com/284334/samsung-teases-pcie-5-0-enterprise-ssd-coming-q2-2022

www.anandtech.com/show/16703/marvell-announces-first-pcie-50-nvme-ssd-controllers

Expect it to take even longer for consumer PCIe 5.0 devices to appear compared to PCIe 4.0.

Honestly though, I really don't get you, you keep going on and on about something without even trying to, or wanting to understand how the industry works. It's really quite annoying.

Oh and you can find all certified PCIe 4.0 devices here. It looks like quite a few to me, it's just that most of them aren't for consumers.

pcisig.com/developers/integrators-list?field_version_value%5B%5D=4&field_il_comp_product_type_value=All&keys=

After all AMD have before updated pciex while retaining the same CPU socket, it's a good inflection point to introduce a new protocol likely pciex comformable and supporting in nature.

We will see if development of PCIe 5.0 products ends up, slower, at the same pace or faster compared to PCIe 4.0. I believe it will be (much) faster.Your attitude is also annoying, but I am not complaining.Oh, how nice!

newsroom.intel.com/news/intel-driving-data-centric-world-new-10nm-intel-agilex-fpga-family/

I really doubt it'll be any faster, but you're refusing to understand what I've mentioned, so I give up. Bye bye.AMD is a board member of the PCI-SIG, since PCIe is what everything from a Raspberry Pi 4 CM to Annapurna's custom server chips for Amazon uses.

Unless there's an industry wide move to something else, I think we're going to keep using PCIe for now.

We're obviously going to be switching to something different at one point, but we're absolutely not at a point where PCIe is getting useless in most devices.

I'm sure we'll see very high-end server platforms switch to something else in the near future, but a regular PC doesn't have multiple CPU sockets or FPGA cards for real-time computational tasks, so the requirements for a wider bus simply isn't there yet.

CCIX is unlikely to ever end up in consumer platforms, but Gen-Z/CXL might (AMD is in both camps). I also have a feeling, as with so many past standards, that whatever becomes the de facto standard, will end up being managed by the PCI-SIG. They've taken over a lot of standards, like PCIe, M.2 etc.

en.wikipedia.org/wiki/PCI-SIG