Friday, August 20th 2021

No PCIe Gen5 for "Raphael," Says Gigabyte's Leaked Socket AM5 Documentation

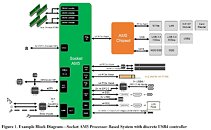

AMD might fall behind Intel on PCI-Express Gen 5 support, say sources familiar with the recent GIGABYTE ransomware attack and ensuing leak of confidential documents. If you recall, AMD had extensively marketed the fact that it was first-to-market with PCI-Express Gen 4, over a year ahead of Intel's "Rocket Lake" processor. The platform block-diagram for Socket AM5 states that the AM5 SoC puts out a total of 28 PCI-Express Gen 4 lanes. 16 of these are allocated toward PCI-Express discrete graphics, 4 toward a CPU-attached M.2 NVMe slot, another 4 lanes toward a discrete USB4 controller, and the remaining 4 lanes as chipset-bus.

Socket AM5 SoCs appear to have an additional 4 lanes to spare than the outgoing "Matisse" and "Vermeer" SoCs, which on higher-end platforms are used up by the USB4 controller, but can be left unused for the purpose, and instead wired to an additional M.2 NVMe slot on lower-end motherboards. Thankfully, memory is one area where AMD will maintain parity with Intel, as Socket AM5 is being designed for dual-channel DDR5. The other SoC-integrated I/O, as well as I/O from the chipset, appear to be identical to "Vermeer," with minor exceptions such as support for 20 Gbps USB 3.2x2. The Socket has preparation for display I/O for APUs from the generation. Intel's upcoming "Alder Lake-S" processor implements PCI-Express Gen 5, but only for the 16-lane PEG port. The CPU-attached NVMe slot, as well as downstream PCIe connectivity, are limited to PCIe Gen 4.

Socket AM5 SoCs appear to have an additional 4 lanes to spare than the outgoing "Matisse" and "Vermeer" SoCs, which on higher-end platforms are used up by the USB4 controller, but can be left unused for the purpose, and instead wired to an additional M.2 NVMe slot on lower-end motherboards. Thankfully, memory is one area where AMD will maintain parity with Intel, as Socket AM5 is being designed for dual-channel DDR5. The other SoC-integrated I/O, as well as I/O from the chipset, appear to be identical to "Vermeer," with minor exceptions such as support for 20 Gbps USB 3.2x2. The Socket has preparation for display I/O for APUs from the generation. Intel's upcoming "Alder Lake-S" processor implements PCI-Express Gen 5, but only for the 16-lane PEG port. The CPU-attached NVMe slot, as well as downstream PCIe connectivity, are limited to PCIe Gen 4.

118 Comments on No PCIe Gen5 for "Raphael," Says Gigabyte's Leaked Socket AM5 Documentation

About materials it’s obviously a discussion we’ll have in the future, although for enterprise and/or longer distances “exotic” materials are probably being tried or even used already.

So, if a full PCIe 5.0 board requires $50 of retimers, another $10-20 in redrivers (which a 4.0 board would of course also need, and might need more of), and $50+ of more expensive materials and manufacturing costs, plus a gross margin of, let's say something low like 30% (which for most industries would barely be enough to cover the costs of running the business), that's a baseline $156 cost increase, before any additional controllers (USB 4.0, nGbE, etc.). That means your 'good enough' baseline $100 PCIe 3.0 motherboard is now a $256 PCIe 5.0 motherboard. Your bargain-basement $80 PCIe 3.0 motherboard would now be a $236 motherboard, unless they choose to restrict PCIe slots further from the socket to slower speeds, but they would still be more expensive as they'd need to ensure 5.0 speeds to the first slot as a minimum. We've already seen this with PCIe 4.0 boards, where baseline costs have jumped $20-50 depending on featuresets, while premium pricing has skyrocketed.

We know that PCIe 4.0 devices can take time, and will take time, especially that a lot of companies want to ensure backward compatibility for older platforms, I mean you can do a 10GbE NIC using PCIe 4.0 x1 lane (with bandwidth to spare also), but they know that most users doesn't have PCIe 4.0, so they make it x2 for PCIe 3.0.

Also, if you look now, low-cost SSD's are using SATA, go one step above and get NVMe x4 regardless of PCIe version as the M.2 slot won't support multi drives, so if you have an SSD were you don't need fast speeds, you will be limited to SATA speeds, as moving to NVMe will mean you wasted x3 more lanes as x1 PCIe 4.0 lane can get you already to 1.9GB/s.

The main idea is to bifurcation, splitting the PCIe 4.0 lanes to multiple devices, sorta like a PCIe 4.0 switch (like a network switch). But PCIe switches are expensive thanks mainly to servers market while they don't need to be that expensive.

I didn't design the specifications and as I pointed out elsewhere in this thread, it's a shame PCIe isn't "forward" compatible as well as backwards compatible.

Bifurcation has a lot of limitations though, the biggest one being that people don't understand how it works. This means people buy the "wrong" hardware for their needs and then later find out it won't work as the expected. A slot is a slot is a slot to most people, they don't understand that it might be bifurcated, muxed or shared with an entirely different interface. This is already causing problems on cheaper motherboards, so it's clearly not a solution that makes a lot of sense and should be best avoided.

PCIe switches were much more affordable, until PLX was bought out by Broadcom who increased the prices by a significant amount. It seems like ASMedia is picking up some of the slack here, but again, this is not a real solution. Yes, it's workable for some things, but you wouldn't want to hang M.2 or 10Gbps Ethernet controllers off of a switch/bridge. So far ASMedia only offers PCIe 3.0 switches, but I'm sure we'll see 4.0 solutions from them in the future.

I don't see any proof of PCIe 4.0 having a slower rollout than PCIe 3.0 had, the issue here is that people have short memories. PCIe 3.0 took just as long, if not longer for anything outside of graphics cards and we had a couple of generations if not three of motherboards that had a mix of PCIe 3.0 and PCIe 2.0 slots. I mean, Intel didn't even manage to offer more than two SATA 6Gbps initially and that was only on the high-end chipset. Anyone remember SATA Express? It heavily pushed by Intel when they launched the 9-series chipset and it was a must have feature, yet I can't say I ever saw a single SATA Express drive in retail. This seems to be the same play, a tick box feature that they can brag about, but that won't deliver anything tangible for the consumer. The difference being that PCIe 5.0 might offer some benefits in 3-4 years from the launch, but by then, Intel's 12th gen will be mostly forgotten.

What, then, makes having that stuff expensive? Making it work properly. Trace quality, signal integrity, routing complexity. All of which has to do with the PCB, its materials, its thickness, its layout and design. And no, consumer boards don't need as many lanes or DIMMs as server boards - that's why consumer boards have always been cheaper, typically at 1/5th to 1/10th the price. The problem is that the limitation for servers used to be quantity: how good a board do you need to stuff it full of I/O? But now, with PCIe 4.0, and even more 5.0, it instead becomes core functionality: what quality of board do you need to make the essential features work at all? Where you previously needed fancy materials to accommodate tons of PCIe lanes and slots, you now need it to make one single slot work. See how that is a problem? See how that raises costs?

And I have no idea where you're getting your "5-10-20%" number from, but the only way that is even remotely accurate is if you only think of the base material costs and exclude design and production entirely from what counts as PCB costs. Which would be rather absurd. The entire point here is that these fast I/O standards drive up the baseline cost of making stuff work at all. Plus, you're forgetting scale: A $100-200-300 baseline price increase on server boards would be relatively easy for most buyers to absorb, and would be a reasonably small percentage of the base price. A $100-150 increase in baseline price for consumer motherboards would come close to killing the diy PC market. And margins in the consumer space are much smaller than in the server world, meaning all costs will get directly passed on to buyers.

I suggest to read the comments. If some of these people had gotten their ideas through, we'd still be using AGP...

www.techpowerup.com/62766/pci-sig-unveils-more-pci-express-3-0-details

www.techpowerup.com/66221/pci-express-3-0-by-2010-supports-heavier-gluttonous-cards

www.techpowerup.com/100976/pci-express-3-0-hits-backwards-compatibility-roadblock-delayed

www.techpowerup.com/134771/pci-sig-finalizes-pci-express-3-0-specifications

www.techpowerup.com/144801/ivy-bridge-cpus-feature-pci-express-3-0

Also, it took nearly a year for PCIe 3.0 graphics cards to arrive after there was chipset support from Intel. Another six months or so later AMD had it's first chipset. It took three years for the first PCIe 3.0 NVMe SSD controller to appear from Intel's announcement...

So yeah, PCIe 4.0 isn't slow in terms of rollout.

PCIe 3.0 turned out to be remarkably resilient in many ways, allowing for large motherboards with long trace lengths without anything especially fancy or exotic involved. Heck, like LTT demonstrated, with decent quality riser cables you can daisy-chain several meters of PCIe 3.0 (with many connectors in the signal path, which is even more remarkable) without adverse effects in some scenarios. PCIe 4.0 changed that dramatically, with not a single commercially available 3.0 riser cable working reliably at 4.0 speeds, and even ATX motherboards requiring thicker boards (more layers) and redrivers (which are essentially in-line amplifiers) to ensure a good signal for that (relatively short) data path. That change really can't be overstated. And now for 5.0 even that isn't sufficient, requiring possibly even more PCB layers, possibly higher quality PCB materials, and more expensive retimers (possibly in addition to redrivers for the furthest slots).

I hope that PCIe becomes a differentiated featureset like USB is - not that consumers need 5.0 at all for the next 5+ years, but when we get it, I hope it's limited in scope to useful applications (likely SSDs first, though the real-world differences are likely to be debatable there as well). But just like we still use USB 2.0 to connect our keyboards, mice, printers, DACs, and all the other stuff that doesn't need bandwidth, I hope the industry has the wherewithal to not push 5.0 and 4.0 where it isn't providing a benefit. And tbh, I don't want PCIe 4.0-packed chipsets to trickle down either. Some connectivity, sure, for integrated components like NICs and potentially fast storage, but the more slots and components are left at 3.0 (at least until we have reduced lane count 4.0 devices) the better in terms of keeping motherboard costs reasonable.

Of course, this might all result in high-end chipsets becoming increasingly niche, as the benefits delivered by them matter less and less to most people. Which in turn will likely lead to feature gatekeeping from manufacturers to allow for easier upselling (i.e. restricting fast SSDs to only the most expensive chipsets). The good thing about that is that the real-world consequences of choosing lower end platforms in the future will be very, very small. We're already seeing this today in how B550 is generally identical to X570 in any relevant metric, but is cheaper and can run with passive cooling. Sure, there are on-paper deficits like only a single 4.0 m.2, but ... so? 3.0 really isn't holding anyone back, and won't realistically be for the useful lifetime of the platform, except in very niche use cases (in which case B550 really wouldn't make sense anyhow).

I think I like the idea of a 4-tier chipset system, instead of the current 3-tier one. Going off of AMD's current naming, something like

x20 - barest minimum

x50 - does what most people need while keeping costs reasonable

x70 - fully featured, for more demanding users

x90 - all the bells and whistles, tons of I/O

In a system like that, you'd get whatever PCIe is provided by the CPU (likely to stay at 20 lanes, though 5.0, 4.0 or a mix?), plus varying levels of I/O from chipsets (though x20 tiers might for example not get 5.0 support. I would probably even advocate for that in the x50 tier to keep prices down, tbh.) x20 chipset is low lane count 3.0, x50 is high(er) lane count 3.0, x70 has plenty of lanes in a mix of 4.0 and 3.0, and x90 goes all 4.0 and might even throw in a few 5.0 lanes - for the >$500 motherboard crowd. I guess the downside of a system like this would be shifting large groups of customers into "less premium" segments, which they might not like the idea of. But it would sure make for more consumer choice and more opportunities for smartly configured builds.

www.gigabyte.com/Motherboard/B550-AORUS-MASTER-rev-10#kf

And this

www.gigabyte.com/Motherboard/B550-VISION-D-P-rev-10#kf

Once the manufacturers are trying to make high-end tiers with mid-range chipsets, it just doesn't add up.

Both of those boards end up "limiting" the GPU slot to x8 (as you already pointed out), as they need to borrow the other eight lanes or you can't use half of the features on the boards.

For consumers that aren't aware of this and now maybe end up pairing that with an APU, are going to be in for a rude awakening where the CPU doesn't have enough PCIe lanes to enable some features that the board has. It's even worse in these cases, as Gigabyte didn't provide a block diagram, so it's not really clear of what interfaces are shared.

This might not be the chipset vendors fault as such, but 10 PCIe lanes is not enough in these examples. It would be fine on most mATX or mini-ITX boards though.

We're already starting to see high-end boards with four M.2 slots (did Asus have one with five even?) and it seems to be the storage interface of the foreseeable future when it comes to desktop PCs as U.2 never happened in the desktop space.

I think we kind of already have the x90 for AMD, but that changes the socket and moves you to HEDT...

My issue is more that the gap between the current high-end and current mid-range is a little bit too wide, but maybe we'll see that fixed next generation. At least the B550 is an improvement on B450.

Judging by this news post, we're looking at 24 usable PCIe lanes from the CPU, so the USB4 ones might be allocated to NVMe duty on cheaper boards, depending the cost of USB4 host controllers. Obviously this is still PCIe 4.0, but I guess some of those lanes are likely to changed to PCIe 5.0 at some point.

Although we've only seen the Z690 chipset from Intel, it seems like they're kind of going down the route you're suggesting, since they have PCIe 5.0 in the CPU, as well as PCIe 4.0, both for an SSD and the DMI interface, but then have PCIe 4.0 and PCIe 3.0 in the chipset.

PCIe 3.0 is still more than fast enough for the kind of Wi-Fi solutions we get, since it appears no-one is really doing 3x3 cards any more. It's obviously still good enough for almost anything you can slot in, apart from 10Gbps Ethernet (if we assume x1 interface here) and high-end SSDs, but there's little else in a consumer PC that can even begin to take advantage of a faster interface right now. As we've seen from the regular PCIe graphics card bandwidth tests done here, PCIe 4.0 is only just about making a difference on the very highest-end of cards and barely that. Maybe this will change when we get to MCM type GPUs, but who knows.

Anyhow, I think we more or less agree on this and hopefully this is something AMD and Intel also figures out, instead of making differentiators that lock out entire feature sets just to upsell to a much higher tier.

Edit: Just spotted this, which suggest AMD will have AM5 CPU SKUs with 20 or 28 PCIe lanes in total, in addition to the fact that those three SKUs will have an integrated GPU as well.

videocardz.com/newz/amd-zen4-ryzen-cpus-confirmed-to-offer-integrated-graphics

My thinking with the chipset lineup was more along the lines of moving the current 70 tier to a 90 tier (including tacking on those new features as they arrive - all the USB4 you'd want, etc.), with the 70 tier taking on the role of that intermediate, "better than 50 but doesn't have all the bells and whistles" type of thing. Just enough PCIe to provide a "people's high end" - which of course could also benefit platform holders in marketing their "real" high-end for people with infinite budgets. Of course HEDT is another can of worms entirely, but then the advent of 16-core MSDT CPUs has essetially killed HEDT for anything but actual workstation use - and thankfully AMD has moved their TR chipset naming to another track too :)

Though IMO 3 m.2 slots is a reasonable offering, with more being along the lines of older PCs having 8+ SATA ports - sure, some people used all of them, but the vast majority even back then used 2 or 3. Two SSDs is pretty common, but three is rather rare, and four is reserved for the people doing consecutive builds and upgrades over long periods an carrying over parts - that's a pretty small minority of PC users (or even builders). Two is too low given the capacity restrictions this brings with it, but still acceptable on lower end builds (and realistically most users will only ever use one or two). Five? I don't see anyone but the most dedicated upgrade fiends actually keeping five m.2 drives in service in a single system (unless it's an all-flash storage server/NAS, in which case you likely have an x16 AIC for them anyhow).

I means that in the case of the Vision board, the Thunderbolt ports won't work. Not a big deal...

Personally I have generally gone for the upper mid-range of boards, as they used to have a solid feature set. However, this seems to have changed over the past 2-3 generations of boards and you now have to go lower high-end tiers to get some features. I needed a x4 slot for my 10Gbps card and at the point I built this system, that wasn't a common feature on cheaper models for some reason. Had we been at the point we are now, with PCIe x1 10Gbps cards, that wouldn't have been a huge deal, except the fact I would've had to buy a new card...

I just find the current lineups from many of the board makers to be odd, although the X570S SKUs fixed some of the weird feature advantages of B550 boards, like 2.5Gbps Ethernet.

Looking at Intel, they seemingly skipped making an H570 version, but it looks like there will be an H670. So this also makes it harder for the board makers to continue their regular differentiation between various chipsets and SKUs. Anyhow, we'll have to wait and see what comes next year, since we have zero control over any of this.

I think NVMe will be just like SATA in a few years, where people upgrade their system and carry over drives from the old build. I mean, I used to have 4-5 hard drives in my system back in the days, not because I needed to, but because I just kept the old drives around as I had space in the case and was too lazy to copy the files over...

Anyhow, three M.2 slots is plenty right now, but in a couple of years time it might not be. That said, I would prefer them as board edge connectors that goes over the side of the motherboard, with mounting screws on the case instead. This would easily allow for four or five drives along the edge of the board, close to the chipset and with better cooling for the drives, than the current implementation. However, this would require cooperation with case makers and it would require a slight redesign of most cases. Maybe it would be possible to do some simple retrofit mounting bar for them as well, assuming there's enough space in front of the motherboard in the case. Some notebooks is already doing something very similar.

I guess we'll just have to wait and see how things develop. On the plus side, I guess with PCIe 5.0, we'd only need a x8 card for four PCIe 4.0 NVMe drives :p

Image: videocardz.

Read roll with number of PCIe lanes. I suppose that platform with 28 lanes PCIe is X670. Other two will be with 20 lines. I don't know but maybe this is number of lanes from chipsets or from CPU?

I agree that we're in a weird place with motherboard lineups, I guess that comes from being in a transition period between various standards. And of course X570 boards mostly arrived too early to really implement 2.5GbE, while B550 was late enough that nearly every board has it, which is indeed pretty weird. But I think that will shake out over time - there are always weird things due to timing and component availability.

I think Intel skipped H570 simply because it would have been the same as H470 and would then either hav required rebranding a heap of motherboards (looks bad) or making new ones (expensive) for no good reason. Makes sense in that context. But Intel having as many chipset types as they do has always been a bit redundant - they usually have five, right? That's quite a lot, and that's across only PCIe 2.0 and 3.0. Even if one is essentially 'Zx70 but for business with no OC' that still leaves a pretty packed field of four. Hopefully that starts making a bit more sense in coming generations as well.

But as I said above, I don't think the "I carry over all my drives" crowd is particularly notable. On these forums? Sure. But most people don't even upgrade, but just sell (or god forbid, throw out) their old PC and buy/build a new one. A few will keep the drives when doing so, but not most. And the biggest reason for multiple SSDs is capacity, which gets annoying pretty soon - there's a limit to how many 256-512GB SSDs you can have in a system and not go slightly insane. People tend to consolidate over time, and either sell/give away older drives or stick them in cases for external use. So, as I said, there'll always be a niche who want 5+ m.2s, but I don't think those are worth designing even upper midrange motherboards around - they're too much of a niche.

I just read some other thread here in the forums the other day with people having this exact issue, hence why I didn't realise that it had changed.

My board has a Realtek 2.5Gbit Ethernet chip, but I guess a lot of board makers didn't want to get bad reviews for not having Intel Ethernet on higher-end board, as they're apparently the gold standard for some reason. Yes, I obviously know the background to this, but by now there's really no difference between the two companies in terms of performance.

But yes, you're right, timing is always tricky with these things, as there's always something new around the corner and due to company secrecy, it's rare that the stars align and everything launches around the same time.

I would seem I was wrong about the H570 too, it appears to be a thing, but it seems to have very limited appeal with the board makers, which is why I missed it. Gigabyte and MSI didn't even bother making a single board with the chipset. It does look identical to the H470, with the only difference being that H570 boards having a PCIe 4.0 x4 M.2 interface connected to the CPU. The B series chipset seems to have large replaced the H series, mostly due to cost I would guess and the fact that neither supports CPU overclocking.

Well, these days it appears to be six, as they made the W line for workstations as well, I guess it replaced some C version of chipset for Xeons. Intel has really complicated things too much.

I guess I've been spending too much time around ARM chips and not really followed the mid-range and low-end PC stuff enough... The downside of work and being more interested in the higher-end of PC stuff...

You might very well be right about the M.2 drives, although I still hope we can get better board placement and stop having drives squeezed in under the graphics card.

I don't have any SSD under 1TB... I skipped all the smaller M.2 drives, as I had some smaller SATA drives and it was just frustrating running out of space. I also ended up getting to a point with my laptop where the Samsung SATA drive got full enough to slow down the entire computer. Duplicated the drive, extended the partition and it was like having a new laptop. I obviously knew it was an issue, but I didn't expect having an 80%+ full drive being quite as detrimental to the performance as it was.

Can't wait for the day that 4TB NVMe drives or whatever comes next are the norm :p

Of course, adding USB4 support requires some more lanes (or more integrated controllers). But it really isn't a lot.

Resizeable BAR also has nothing to do with Microsoft - it's a PCIe feature.

Peak theoretical transfer speed between the CPU and VRAM is the peak transfer speed of the PCIe bus connecting them.