Sunday, January 16th 2022

Intel "Raptor Lake" Rumored to Feature Massive Cache Size Increases

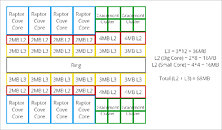

Large on-die caches are expected to be a major contributor to IPC and gaming performance. The upcoming AMD Ryzen 7 5800X3D processor triples its on-die last-level cache using the 3D Vertical Cache technology, to level up to Intel's "Alder Lake-S" processors in gaming, while using the existing "Zen 3" IP. Intel realizes this, and is planning a massive increase in on-die cache sizes, although spread across the cache hierarchy. The next-generation "Raptor Lake-S" desktop processor the company plans to launch in the second half of 2022 is rumored to feature 68 MB of "total cache" (that's AMD lingo for L2 + L3 caches), according to a highly plausible theory by PC enthusiast OneRaichu on Twitter, and illustrated by Olrak29_.

The "Raptor Lake-S" silicon is expected to feature eight "Raptor Cove" P-cores, and four "Gracemont" E-core clusters (each cluster amounts to four cores). The "Raptor Cove" core is expected to feature 2 MB of dedicated L2 cache, an increase over the 1.25 MB L2 cache per "Golden Cove" P-core of "Alder Lake-S." In a "Gracemont" E-core cluster, four CPU cores share an L2 cache. Intel is looking to double this E-core cluster L2 cache size from 2 MB per cluster on "Alder Lake," to 4 MB per cluster. The shared L3 cache increases from 30 MB on "Alder Lake-S" (C0 silicon), to 36 MB on "Raptor Lake-S." The L2 + L3 caches hence add up to 68 MB. All eyes are now on "Zen 4," and whether AMD gives the L2 caches an increase from the 512 KB per-core size that it's consistently maintained since the first "Zen."

Sources:

OneRaichu (Twitter), Olrack (Twitter), HotHardware

The "Raptor Lake-S" silicon is expected to feature eight "Raptor Cove" P-cores, and four "Gracemont" E-core clusters (each cluster amounts to four cores). The "Raptor Cove" core is expected to feature 2 MB of dedicated L2 cache, an increase over the 1.25 MB L2 cache per "Golden Cove" P-core of "Alder Lake-S." In a "Gracemont" E-core cluster, four CPU cores share an L2 cache. Intel is looking to double this E-core cluster L2 cache size from 2 MB per cluster on "Alder Lake," to 4 MB per cluster. The shared L3 cache increases from 30 MB on "Alder Lake-S" (C0 silicon), to 36 MB on "Raptor Lake-S." The L2 + L3 caches hence add up to 68 MB. All eyes are now on "Zen 4," and whether AMD gives the L2 caches an increase from the 512 KB per-core size that it's consistently maintained since the first "Zen."

66 Comments on Intel "Raptor Lake" Rumored to Feature Massive Cache Size Increases

8 P-cores is plenty for most workloads for the foreseeable future. Possibly longer than we might even think based on historic trends, as scaling with more threads will ultimately have diminishing returns. The workloads which can scale to 8+ threads are non-interactive batch loads (like non-realtime rendering, video encoding, server batch loads, etc.), where the work chunks are significantly large enough where a large pool of threads can be saturated and the relative overhead becomes negligible.

Interactive applications needs workloads to finish ~5ms to be responsive, which means that the scaling limit of such workloads depends on the nature of the workloads, i.e. how much of it can be done independently. As most workloads generally are pipelines (chains of operations) with dependencies to other data, there will always be diminishing returns. It's not like anything can be divided into 1000 chunks and fed to a thread pool when there are need for synchronization, and such dependencies often means all threads may have to wait for the slowest thread before all of them can proceed to the next step, and if just one of them is slightly delayed (e.g. by the OS scheduler), we could be talking about ms of delay, which is too severe when each chunk need to sync up in a matter of micro seconds to keep things flowing. So in conclusion, we will "never" see interactive applications in general scale well beyond 8 threads, not until a major hardware change, new paradigm etc. This is not just about software.

Where you don't hit the mark is about the extra E-cores. These are added because it's a cheap way for PC makers to sell you a "better" product. We will probably see a "small core race" now that the "big core race" and the "clockspeed race" have hit a wall. So this is all about marketing. :)

Once you have enough P-cores for the "Interactive applications needs workloads to finish ~5ms to be responsive" all spare silicon area should be spent on E-cores. They just better at saleable, non-interactive workloads in terms of efficiency and density. The concessions that make them unsuitable for interactive workloads are the exact same advantages that give them such a huge advantage in die area and power consumption.

We're still in the early days of hybrid architectures on Windows but once the teething troubles are ironed out I firmly believe that the E-core count race is on. It will be especially important in the ULP laptop segment where efficiency and power consumption are far more important than on desktop. I'm actually super excited for the Ultra-Mobile silicon Intel showed during their architecture day; Big IGP, and massive BIAS towards E-cores - 2P, 8E.

we are speaking about the manufacturer who played around HT and core counting for years just for segmentation

But I do know a guy who had an 8086. No HDD at all, he'd load games off floppy disks. He even learned how to shuffle files around so that the "please insert disk into drive a:" message would pop up as few times as possible.

One good contender for the direction we may be heading is Intel's research into "threadlets". Something in this direction is what I've been expecting based on seeing the challenges of low level optimization. So the idea is basically to have smaller "threads" of instructions only existing inside the CPU core for a few clock cycles, transparent to the OS. But Intel have tried and failed before to tackle these problems with Itanium, and there is no guarantee they will be first to succeed with a new tier of performance. But if we find a sensible, efficient and scalable way to express parallelism and constraints on a low level, then it is certainly possible to scale performance to new levels without more high level threads. There is a very good chance that new paradigms will play a key role too, paradigms that I'm not aware of yet.

E-cores are far from useless though. In addition to firewall and router appliances with Avoton/Denverton Atoms I've setup plenty of NUCs with Pentium Silver or Celeron J CPUs comprised entirely of what is now called an E-core and whilst they're no speed demons they're relatively competent machines that can be passively cooled at 10W, something that none of the *-Lake architectures have managed for a very long time.

All the problems with them have genuinely been scheduler teething troubles where threads requiring high performance and maximum response time were incorrectly scheduled for the E-cores and effectively given a very low priority by mistake.

As the scheduler bugs get ironed out and tweaks to the Intel Thread Director get made, the perfect blend of P+E cores working together to do the things that each is best at will show that the hybrid architecture is the way forward.

I mean, I could be wrong, but big.LITTLE has been dominatingly superior in smartphones. No flaghship phone CPU is made any other way now.The bottleneck here is Microsoft who still haven't finished making Windows 8, whilst rebranding it 8.1, 10, 11 etc. 11 isn't new, it's just yet more lipstick on the unfinished pig that is halfway towards the full ground-up OS redesign that they promised with Project Longhorn (turned into Vista!). Vista SP2 finally gave Microsoft a revamp of underlying backend stuff. Windows 8 was the start of the frontend revamp. Once the Windows NT management consoles and legacy control panel settings are fully migrated to the native modernUI, then Microsoft will finally have achieved what they promised with Longhorn that they started on in May 2001, almost 22 years ago.

Expecting a high-quality, futuristic scheduler from those useless clowns is pointless. In 22 years they've basiscally failed to finish their original project. That might be done within 25 years. Expecting them to competently put together a working scheduler that does things the way we all hope they will is crazy. We might as well just howl at the moon until we're all old and grey for all the good that wishful thinking will do ;)

It's no accident that more and more developers are moving to Linux. We need a stable and reliable platform to get work done.

Anyway, this is good. 8 P-Cores is definitely enough for gaming for years to come, what needs improvement now is the cache sizes. Intel has seen this in AMD's CPUs and I'm glad they're following.After experiencing Loonix and its cultist userbase for months, I ended up with 11 again for a good reason. The "clowns" at Microsoft are still the only ones who managed to give me an OS that does everything I want it to do and more, including running my expensive VR headset and my competent video editing software. And also handling my CPU better. I never saw 5.2 GHz and proper scheduling out of my 5900X on Linux.

If Linux ever wants to overtake Windows and finally have that mythical Year of the Linux desktop (which was supposed to comes ages ago, then now, then in the future), then they'd need a culling of their crappy user base (which will never happen) and accelerating in compatibility (which will be as slow as ReactOS). Because so far, Windows is still the best distro and compatibility layer I've used.

You have Linux users desperately trying to get people to ditch Windows, and when they finally do and choose a beginner friendly distro like Ubuntu, they scream and howl for not choosing this or that elitist distro with tons of work needed to get it running properly. They should be glad they even managed to get people to ditch Windows in the first place.

I frankly don’t mind who is “winning “, and I don’t think that an 1 years old CPU (actually more a 1 year and half old CPU…) with more cache is something to be happy about. And I’m sure 5800X3D price will be hilarious. I’m an AMD customer, and I’m not happy with what AMD is commercially doing lately: Ryzen Zen 3 pricing and some technical choices on RDNA2 products (that embarrassing joke the 6500XT is , for instance) make me think AMD need competition because they are becoming more and more greedy over the time.

With Intel, you never know…