Friday, July 14th 2017

Windows 10 Process-Termination Bug Slows Down Mighty 24-Core System to a Crawl

So, you work for Google. Awesome, right? Yeah. You know what else is awesome? Your 24-Core, 48-thread Intel build system with 64 GBs of ram and a nice SSD. Life is good man. So, you've done your code work for the day on Chrome, because that's what you do, remember? (Yeah, that's right, it's awesome). Before you go off to collect your google-check, you click "compile" and expect a speedy result from your wicked fast system.

Only you don't get it... Instead, your system comes grinding to a lurching halt, and mouse movement becomes difficult. Fighting against what appears to be an impending system crash, you hit your trusty "CTRL-ALT-DELETE" and bring up task manager... to find only 50% CPU/RAM utilization. Why then, was everything stopping?

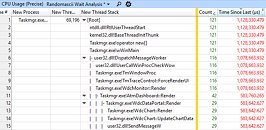

If you would throw up your arms and walk out of the office, this is why you don't work for Google. For Google programmer Bruce Dawson, there was only one logical way to handle this: "So I did what I always do - I grabbed an ETW trace and analyzed it. The result was the discovery of a serious process-destruction performance bug in Windows 10."This is an excerpt from a long, detailed blog post by Bruce titled "24-core CPU and I can't move my mouse" on his Wordpress blog randomascii. In it, he details a serious new bug that is only present in Windows 10 (not other versions). Process destruction appears to be serialized.

What does that mean, exactly? It means when a process "dies" or closes, it must go through a single thread to handle this. In this critical part of the OS which every process must eventually partake in, Windows 10 is actually single threaded.

To be fair, this is not a normal issue an end user would encounter. But developers often spawn lots of processes and close them just as often. They use high-end multi-core CPUs to speed this along. Bruce notes that in his case, his 24-core CPU only made things worse, as it actually caused the build process to spawn more build processes, and thus, even more had to close. And because they all go through the same single threaded queue, the OS grinds to a halt during this operation, and performance peak is never realized.

As for whether this is a big bug if you aren't a developer: Well that's up for debate. Certainly not directly, I'd wager, but as a former user of OS/2 and witness to Microsoft's campaign against it back in the day, I can't help but be reminded of Microsoft FUD surrounding OS/2's SIQ issue that persisted even years after it had been fixed. Does this not feel somewhat like sweet, sweet karma for MS from my perspective? Maybe, but honestly, that doesn't help anyone.

Hopefully a fix will be out soon, and unlike the OS/2 days, the memory of this bug will be short lived.

Source:

randomascii Wordpress Blog

Only you don't get it... Instead, your system comes grinding to a lurching halt, and mouse movement becomes difficult. Fighting against what appears to be an impending system crash, you hit your trusty "CTRL-ALT-DELETE" and bring up task manager... to find only 50% CPU/RAM utilization. Why then, was everything stopping?

If you would throw up your arms and walk out of the office, this is why you don't work for Google. For Google programmer Bruce Dawson, there was only one logical way to handle this: "So I did what I always do - I grabbed an ETW trace and analyzed it. The result was the discovery of a serious process-destruction performance bug in Windows 10."This is an excerpt from a long, detailed blog post by Bruce titled "24-core CPU and I can't move my mouse" on his Wordpress blog randomascii. In it, he details a serious new bug that is only present in Windows 10 (not other versions). Process destruction appears to be serialized.

What does that mean, exactly? It means when a process "dies" or closes, it must go through a single thread to handle this. In this critical part of the OS which every process must eventually partake in, Windows 10 is actually single threaded.

To be fair, this is not a normal issue an end user would encounter. But developers often spawn lots of processes and close them just as often. They use high-end multi-core CPUs to speed this along. Bruce notes that in his case, his 24-core CPU only made things worse, as it actually caused the build process to spawn more build processes, and thus, even more had to close. And because they all go through the same single threaded queue, the OS grinds to a halt during this operation, and performance peak is never realized.

As for whether this is a big bug if you aren't a developer: Well that's up for debate. Certainly not directly, I'd wager, but as a former user of OS/2 and witness to Microsoft's campaign against it back in the day, I can't help but be reminded of Microsoft FUD surrounding OS/2's SIQ issue that persisted even years after it had been fixed. Does this not feel somewhat like sweet, sweet karma for MS from my perspective? Maybe, but honestly, that doesn't help anyone.

Hopefully a fix will be out soon, and unlike the OS/2 days, the memory of this bug will be short lived.

107 Comments on Windows 10 Process-Termination Bug Slows Down Mighty 24-Core System to a Crawl

Is he using the Creators Update? Is he still on the original Windows 10 release? Has this already been fixed? We don't know, because he didn't tell us what build of Windows 10 he is on.

And I'd expect a developer to know about the different builds of Windows 10...

this is a edge case bug thats only a issue when you have 40 fucking cores and need to terminate 30 processes

and I bet you windows server doesn't have the issue

this is just a case of somebody using a desktop-os on a server and then wondering why stuff is broke

WTF is WRONG with you?

but thats neither here nor there if you need a example of the damage a outdated os can do Cough windows 7 and wannacry cough cough

I think what it's going to boil down to is that it's like having 1000 files on a file system versus having 1000 files archived into one library. It isn't abundantly obvious but those 1000 files on the file system have 4 MiB of overhead and are generally slow to access because the HDD/SDD has to read from the file system to find out where the data is then move to the file and read it. If they're all in one library, you're moving a pointer about one single file that the file system already looked up. Access time is much faster because the overhead is exponentially less.

Processes are the same way. The process has to keep record of all of its memory use. Threads can quickly request and release memory because there isn't much in the way of overhead (e.g. security/NXbit/etc.). Processes need to not only get permission to execute, they have to load all of their modules (10-30 is not uncommon). All of that needs to be unloaded from the memory while the process winds down.

Windows Server 2012 R2:

Here's the same program but instead using _Stop = true (peaceful termination):

As I said, whomever wrote gomacc.exe is an idiot.

Like one big file is better than lots of small files, one big process with lots of threads is better than lots of processes with one thread. The former in both cases creates a lot of hidden overhead.

To recap: left is bad (spam processes), right is good (spam threads)...

Process.GetCurrentProcess().Kill() = instant close, no hitch

Environment.Exit(0) = instant close, no hitch

_Stop = true = moderate close, no hitch

Thread.Abort() = slow close, no hitch

has nothing todo with memory-management or disk i/o

from the original post:

Well, what do you know. Process creation is CPU bound, as it should be. Process shutdown, however, is CPU bound at the beginning and the end, but there is a long period in the middle (about a second) where it is serialized – using just one of the eight hyperthreads on the system, as 1,000 processes fight over a single lock inside of NtGdiCloseProcess. This is a serious problem. This period represents a time when programs will hang and mouse movements will hitch – and sometimes this serialized period is several seconds longer.the fuck they can't

thats exactly the issue thread killing is getting stuck in a lock as NtGdiCloseProcess can only work though one thread at a time and its hanging

the process needs to cooperate yes but you can most certainly force-term a thread

Hmm, out of curiousity, I'm going to try killing a single processes that uses over 10 GiB of memory...

Edit: No perceived hitch:

That is one process consuming over 12 GiB of RAM (probably some page file too), switched over to the Proccesses tab, end task, then switched back to performance and watched it unwind while moving the mouse in a circle. I never saw it stop.