Friday, July 14th 2017

Windows 10 Process-Termination Bug Slows Down Mighty 24-Core System to a Crawl

So, you work for Google. Awesome, right? Yeah. You know what else is awesome? Your 24-Core, 48-thread Intel build system with 64 GBs of ram and a nice SSD. Life is good man. So, you've done your code work for the day on Chrome, because that's what you do, remember? (Yeah, that's right, it's awesome). Before you go off to collect your google-check, you click "compile" and expect a speedy result from your wicked fast system.

Only you don't get it... Instead, your system comes grinding to a lurching halt, and mouse movement becomes difficult. Fighting against what appears to be an impending system crash, you hit your trusty "CTRL-ALT-DELETE" and bring up task manager... to find only 50% CPU/RAM utilization. Why then, was everything stopping?

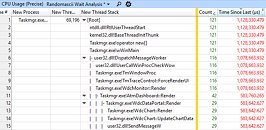

If you would throw up your arms and walk out of the office, this is why you don't work for Google. For Google programmer Bruce Dawson, there was only one logical way to handle this: "So I did what I always do - I grabbed an ETW trace and analyzed it. The result was the discovery of a serious process-destruction performance bug in Windows 10."This is an excerpt from a long, detailed blog post by Bruce titled "24-core CPU and I can't move my mouse" on his Wordpress blog randomascii. In it, he details a serious new bug that is only present in Windows 10 (not other versions). Process destruction appears to be serialized.

What does that mean, exactly? It means when a process "dies" or closes, it must go through a single thread to handle this. In this critical part of the OS which every process must eventually partake in, Windows 10 is actually single threaded.

To be fair, this is not a normal issue an end user would encounter. But developers often spawn lots of processes and close them just as often. They use high-end multi-core CPUs to speed this along. Bruce notes that in his case, his 24-core CPU only made things worse, as it actually caused the build process to spawn more build processes, and thus, even more had to close. And because they all go through the same single threaded queue, the OS grinds to a halt during this operation, and performance peak is never realized.

As for whether this is a big bug if you aren't a developer: Well that's up for debate. Certainly not directly, I'd wager, but as a former user of OS/2 and witness to Microsoft's campaign against it back in the day, I can't help but be reminded of Microsoft FUD surrounding OS/2's SIQ issue that persisted even years after it had been fixed. Does this not feel somewhat like sweet, sweet karma for MS from my perspective? Maybe, but honestly, that doesn't help anyone.

Hopefully a fix will be out soon, and unlike the OS/2 days, the memory of this bug will be short lived.

Source:

randomascii Wordpress Blog

Only you don't get it... Instead, your system comes grinding to a lurching halt, and mouse movement becomes difficult. Fighting against what appears to be an impending system crash, you hit your trusty "CTRL-ALT-DELETE" and bring up task manager... to find only 50% CPU/RAM utilization. Why then, was everything stopping?

If you would throw up your arms and walk out of the office, this is why you don't work for Google. For Google programmer Bruce Dawson, there was only one logical way to handle this: "So I did what I always do - I grabbed an ETW trace and analyzed it. The result was the discovery of a serious process-destruction performance bug in Windows 10."This is an excerpt from a long, detailed blog post by Bruce titled "24-core CPU and I can't move my mouse" on his Wordpress blog randomascii. In it, he details a serious new bug that is only present in Windows 10 (not other versions). Process destruction appears to be serialized.

What does that mean, exactly? It means when a process "dies" or closes, it must go through a single thread to handle this. In this critical part of the OS which every process must eventually partake in, Windows 10 is actually single threaded.

To be fair, this is not a normal issue an end user would encounter. But developers often spawn lots of processes and close them just as often. They use high-end multi-core CPUs to speed this along. Bruce notes that in his case, his 24-core CPU only made things worse, as it actually caused the build process to spawn more build processes, and thus, even more had to close. And because they all go through the same single threaded queue, the OS grinds to a halt during this operation, and performance peak is never realized.

As for whether this is a big bug if you aren't a developer: Well that's up for debate. Certainly not directly, I'd wager, but as a former user of OS/2 and witness to Microsoft's campaign against it back in the day, I can't help but be reminded of Microsoft FUD surrounding OS/2's SIQ issue that persisted even years after it had been fixed. Does this not feel somewhat like sweet, sweet karma for MS from my perspective? Maybe, but honestly, that doesn't help anyone.

Hopefully a fix will be out soon, and unlike the OS/2 days, the memory of this bug will be short lived.

107 Comments on Windows 10 Process-Termination Bug Slows Down Mighty 24-Core System to a Crawl

I am an early adopter, Win 10 was fine for the first few years. Now MS just c**ked the OS with stupid updates to add more tumors to their crap kernel which is decades old.

They said there would be no new Windows numbered versions. Which means things are just going to get worse rather than better.

And developers are also taking only baby steps because the OS is just a mess now.

F*** the consumer, right? Nobody cares anymore.

Google should of been using their Chrome OS

And now maybe some sufficient condition rather than a necessary one? :-)

What sort of 'sufficient condition' are you looking for?

Either/Or etc

chrome OS is a lightweight end user OS which is mainly web only for shit hardware devices. Where in that is a proper development environment for a super high end desktop?.

ffs people talking because oxigen is free

the absence of evidence is not the evidence of absence

That said, I probably am not much of a factor and am the biggest bozo here, lol.Ah, I see now. Threads vs processes elluded me for a bit (been a while since I programmed). This is indeed a crappy build method if it's all processes, though not uncommon inside unix-land.

I think there is only two scenarios where multiple processes makes sense:

1) Experimental, distributed applications like BOINC. You want them separate for stability, security, and control reasons. They usually never finish at the same time even if you have 1000 projects running so even that is very unlikely to expose this bug.

2) Application updaters because you can't modify a running application. Usually only main application + updater and they never really ever run at the same time so no problem here either.

A process is effectively a collection of related threads.

I'm curious how *nix behaves in the same situation (closing 1000 processes in rapid succession).

A thread has a shared resouce pool with its parent process and its thread relatives. if you load a file in a thread that file is concurrently accessible by every relative thread and by the main process app.

When you are building an application and you have a shared library all the threads that are trying to read code from that library will try to access that file at different times or the same time. While compiling, you rely on different temporary resources to write to and read from; 10 thread doing this work have to have a mechanism to do not write and read from the same shared resource. So if you think about memory footprint and resource destruction, the point of having threads in spite of processes makes little to no difference. You will have to load in memory a different version of you application for every thread you spawn and you have to have a lot of control code. It will be slower, unsafer and probably as slower to unload.

Now take the process spawning approach.

You develop a simple working application, you spawn that 10 times in 10 different processes and you are done.

Spawning lots of processes is just developer laziness relying on the operating system for locks and catching appcrashes instead of doing it yourself (at a huge performance boost).