Wednesday, July 25th 2018

Intel Core i9 8-core LGA1151 Processor Could Get Soldered IHS, Launch Date Revealed

The fluid thermal interface material between the processor die and the IHS (integrated heatspreader) has been a particularly big complaint of PC enthusiasts in recent times, especially given that AMD has soldered IHS (believed to be more effective in heat-transfer), across its Ryzen processor line. We're getting reports of Intel planning to give at least its top-dog Core i9 "Whiskey Lake" 8-core socket LGA1151 processor a soldered IHS. The top three parts of this family have been detailed in our older article.

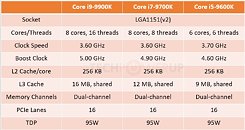

The first Core i9 "Whiskey Lake" SKU is the i9-9900K, an 8-core/16-thread chip clocked between 3.60~5.00 GHz, armed with 16 MB of L3 cache. The introduction of the Core i9 extension to the mainstream desktop segment could mean Intel is carving out a new price point for this platform that could be above the $300-350 price traditionally held by top Core i7 "K" SKUs from the previous generations. In related news, we are also hearing that the i9-9900K could be launched as early as 1st August, 2018. This explains why motherboard manufacturers are in such hurry to release BIOS updates for their current 300-series chipset motherboards.

Source:

Coolaler

The first Core i9 "Whiskey Lake" SKU is the i9-9900K, an 8-core/16-thread chip clocked between 3.60~5.00 GHz, armed with 16 MB of L3 cache. The introduction of the Core i9 extension to the mainstream desktop segment could mean Intel is carving out a new price point for this platform that could be above the $300-350 price traditionally held by top Core i7 "K" SKUs from the previous generations. In related news, we are also hearing that the i9-9900K could be launched as early as 1st August, 2018. This explains why motherboard manufacturers are in such hurry to release BIOS updates for their current 300-series chipset motherboards.

79 Comments on Intel Core i9 8-core LGA1151 Processor Could Get Soldered IHS, Launch Date Revealed

But now with the 8-core dies of the 9900k, it likely is a big enough die to make soldering easy enough for Intel to do it. And the switch from solder to TIM was also a fault of Intel's stagnation, sticking with the same 4-core die for so long, and just doing process shrinks, lead to very small physical cores. If they had move the mainstream up sooner, like they should have, then the dies would have been large enough to make solder a viable option.So not the entire series then.

I think the 5820k had 28 lanes, so did the 5930k? Was it the 5960X that only had the full 40 lanes? Same went for the 6xxx series and such I believe. I don't think it was until the 5xxx series CPUs that the limitation was brought in was it?

As for RR, I can see ASP being the reason why AMD did this. I won't defend it but I can see why it's unsustainable, given the volumes & margin on that part.

To me saying Raven Ridge and Summit Ridge are the same series of cpus is like saying the same for 7700K and 7900X. They both start with a 7, but they couldn't be any more different...

Cheaper IMO, why would it be easier given they still make soldered CPU's & seemingly are going back to solder for the upcoming i9.

Now, whether an effective 28 PCIe 3.0 lanes is too little for the average midrange gamer with 1 GPU and 1 M.2 drive is another story altogether.

AMD has gotten way better. But not that much better.

Come on now, we all know you know better, its pretty obvious solder has way better heat transfer than toothpaste

2) The difference between Raven Ridge and Summit/Pinnacle Ridge is far bigger than between any mainstream Intel chips, regardless of differences in core count. The Intel 4+2 and 6+2 dice are largely identical except for the 2 extra cores. All Summit/Pinnacle Ridge chips (and Threadripper) are based off the same 2-CCX iGPU-less die (well, updated/tuned for Pinnacle Ridge and the updated process node, obviously). Raven Ridge is based off an entirely separate die design with a single CCX, an iGPU, and a whole host of other uncore components belonging to that. The difference is comparable to if not bigger than the difference between the ring-bus MSDT and the mesh-interconnect HEDT Intel chips.

3) If "Same socket, in the same product stack" is the rule, do you count Kaby Lake-X as the same series as Skylake-X?

4) "Same product stack" is also grossly misleading. From the way you present this, Intel has one CPU product stack - outside of the weirdly named low-end core-based Pentium and Celerons, that is, which seem to "lag" a generation or two in their numbering. They all use the same numbering scheme, from mobile i3s to HEDT 18-core i9s. But you would agree that the U, H and other suffixes for mobile chips place them in a different product stack, no? Or would you say that Intel has no mobile product stack? 'Cause if you think they do, then you have to agree that the G suffix of the desktop RR APUs also makes that a separate product stack. Not to mention naming: Summit and Pinnacle Ridge are "Ryzen". Then there's "Ryzen Threadripper". Then there's "Ryzen with Vega Graphics". Subsets? Sure. Both are. But still separate stacks.You're right that DMA alleviates this somewhat, but that depends on the workload. Is all you do with your SSDs copying stuff between them? If not, the data is going to go to RAM or CPU. If you have a fast NIC, have you made sure that the drive you're downloading to/uploading from is connected off the PCH and not the CPU? 'Cause if not, you're - again - using that QPI link. And so on, and so on. The more varied your load, the more that link is being saturated. And again, removing the bottleneck almost entirely would not be difficult at all - Intel would just have to double the lanes for the uplink. This would require a tiny increase in die space on the CPUs and PCHes, and somewhat more complex wiring in the motherboard, but I'm willing to bet the increase in system cost would be negligible.Apparently you're not familiar with Intel HSIO/Flex-IO or the feature sets of their chipsets. You're partially right that USB is provided - 2.0 and 3.0, but not 3.1 except for the 300-series excepting the Z370 (which is really just a rebranded Z270). Ethernet is done through separate controllers over PCIe, and SATA shares lanes with PCIe. Check out the HSIO lane allocation chart from AnandTech's Z170 walkthrough from the Skylake launch - the only major difference between this and Z270/370 is the addition of a sixth PCIe 3.0x4 controller, for 4 more HSIO lanes. How they can be arranged/split (and crucially, how they can not) works exactly the same. Note that Intel's PCH spec sheets (first picture here) always say "up to" X number of USB ports/PCIe lanes and so on - due to them being interchangeable. Want more than 6 USB 3.0 ports? That takes away an equivalent amount of PCIe lanes. Want SATA ports? All of those occupy RST PCIe lanes, though at least some can be grouped on the same controller. Want dual Ethernet? Those will eat PCIe lanes too. And so on. The moral of the story: An implemented Intel chipset does not have the amount of available PCIe lanes that they advertise that it has.That jives pretty well with der8auers recent look into the question of "can you solder an IHS yourself?", but with one major caveat: the difference in complexity and cost between doing a one-off like the process shown there and doing the same on an industrial scale should really not be underestimated. Intel already knows how to to this. They already own the tools and machinery, as they've done this for years. Intel can buy materials at bargain-basement bulk costs. Intel has the engineering expertise to minimize the occurrence of cracks and faults. And it's entirely obvious that an industrial-scale process like this would be fine-tuned to minimize the soldering process causing cracked dice and other failures.