Wednesday, July 25th 2018

Intel Core i9 8-core LGA1151 Processor Could Get Soldered IHS, Launch Date Revealed

The fluid thermal interface material between the processor die and the IHS (integrated heatspreader) has been a particularly big complaint of PC enthusiasts in recent times, especially given that AMD has soldered IHS (believed to be more effective in heat-transfer), across its Ryzen processor line. We're getting reports of Intel planning to give at least its top-dog Core i9 "Whiskey Lake" 8-core socket LGA1151 processor a soldered IHS. The top three parts of this family have been detailed in our older article.

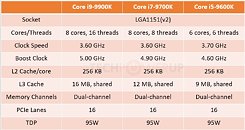

The first Core i9 "Whiskey Lake" SKU is the i9-9900K, an 8-core/16-thread chip clocked between 3.60~5.00 GHz, armed with 16 MB of L3 cache. The introduction of the Core i9 extension to the mainstream desktop segment could mean Intel is carving out a new price point for this platform that could be above the $300-350 price traditionally held by top Core i7 "K" SKUs from the previous generations. In related news, we are also hearing that the i9-9900K could be launched as early as 1st August, 2018. This explains why motherboard manufacturers are in such hurry to release BIOS updates for their current 300-series chipset motherboards.

Source:

Coolaler

The first Core i9 "Whiskey Lake" SKU is the i9-9900K, an 8-core/16-thread chip clocked between 3.60~5.00 GHz, armed with 16 MB of L3 cache. The introduction of the Core i9 extension to the mainstream desktop segment could mean Intel is carving out a new price point for this platform that could be above the $300-350 price traditionally held by top Core i7 "K" SKUs from the previous generations. In related news, we are also hearing that the i9-9900K could be launched as early as 1st August, 2018. This explains why motherboard manufacturers are in such hurry to release BIOS updates for their current 300-series chipset motherboards.

79 Comments on Intel Core i9 8-core LGA1151 Processor Could Get Soldered IHS, Launch Date Revealed

As for overclocking, we have yet to see what these particular chips can do... but aside from that, the bigger point is that Intel killed overclocking too on all but the most expensive chips. I am confident that if we could overclock anything we wanted to like we used to do up until Sandy Bridge, there would be a lot less users on this forum with the 8600k or 8700k, they would be using the much more affordable 8400 or an even lower model. I'd be perfectly happy with one of those $100ish quad core i3 chips running at 5GHz, but Intel killed that possibility.

Doesn't change the fact that they used probably the worst possible tim from Skylake onwards. We probably wouldn't be having this entire TIM vs Solder debate if Intel used higher quality paste.

Intel has some of the best engineers, they also have some of the best people for catering to the investors. The later are higher in hierarchy.

"Micro cracks in solder preforms can damage the CPU permanently after a certain amount of thermal cycles and time."

Nehalem is soldered. It was released 10 years ago. People are still pushing those CPUs hard to this day. They still work fine.

"Thinking about the ecology it makes sense to use conventional thermal paste."

Coming from the guy known for extreme overclocking in which your setup consumes close to 1kW.

I've only ever used single GPUs on the Zxx/Z1xx boards, but the X99 and such I've used dual up to quad GPUs.. But things where different back in the days of 920 D0's and the Classified motherboards I had then I think..

GPUs can, in essence, run on however many PCIe lanes you allocate to them (up to their maximum, which is 16). As such, you can run a GPU off an x1 slot if you wanted to - and it would work! - but it would be bottlenecked beyond belief (except for cryptomining, which is why they use cheapo x1 PCIe risers to connect as many GPUs as possible to their rigs). However, the difference between x8 and x16 is barely measurable in the vast majority of games, let alone noticeable. It's usually in the 1-2% range.

Intel's current product stack on the 1151(300) socket ranges from the Celeron G4900 all the way up to the 8700K. The mobile processors are a completely different series and product stack.Yes, removing the limit would be easy, but it hasn't become necessary. Even on their HEDT platform, the link hasn't become an issue. The fact is with the exception of some very extreme fringe cases, the QPI link between the chipset and the CPU isn't a bottleneck. The increased cost would be negligible, but so would the increase in performance.

The drive is never connected to the CPU on the mainstream platform. Data will flow from the NIC directly to the drive through the PCH. The QPI link to the CPU never comes into play. And even if it did, a 10Gb NIC isn't coming close to maxing out the QPI link. It would use about 1/4th of the QPI link.Yes, you are correct, I was wrong about those bits not using PCI-E lanes from the PCH. But it still leaves 16 or 17 dedicated PCI-E lanes coming off the PCH even with SATA, USB3.0, and Gb NIC. So combined with the lanes from the CPU you still get 32 PCI-E lanes, more than enough for two graphics card, two M.2 drives, and extra crap.

There's no good reason to deliberately make them run hot. Sure it might "work", it might be "in spec", but they can do better than that. I've seen many times in the past that the lifetime of electronics is positively affected when they run nice and cool. I've also seen the lifetime of electronics affected negatively due to poorly designed, hot running and/or insufficiently cooled garbage.

Solder vs TIM doesn't matter to 99% of the buyers.

That said, I reiterate that though I've been slamming Intel pretty hard over the thermal paste, they should also include better coolers. I think, even if soldered, that coaster cooler is okay for the really low power chips like Celeron or Pentium, but once you get up to i3 territory, they should at least use the full height cooler.

It's a shame... Intel has some great silicon, but they ruin it with their poor design choices when it comes to cooling. It's like they made a Ferrari engine, and put it in this:

Still though, they listed a dinky thermal product for Kaby Lake, if I recall. Which is still crazy.

The majority of users don't even know what CPU they have.

But I was sweating bullets when I opened my 7900X. The delid turned out great.

medium.com/@OpenSeason/soldered-cpu-vs-cheap-paste-59fb96a4fca7

It is a bit funny (IMO) - this hole debate, in particular the part, that Intel is so and so much faster. Yes, may be, in synthetic test and benchmarks - but when it comes to real life,

who the f... can see, if the game is running with 2 fps more on an Intel vs. AMD?? It´s like all people are doing nothing but running benchmarks all day long.....

As for a 10GbE link not saturating the QPI link, you're right, but need to take into account overhead and imperfect bandwidth conversions between standards (10GbE controllers have a max transfer speed of ~1000MB/s, which only slightly exceeds the 985MB/s theoretical max of a PCI 3.0 x1 connection, but the controllers require a x4 connection still - there's a difference between internal bandwidth requirements and external transfer speeds) and bandwidth issues when transferring from multiple sources across the QPI link. You can't simply bunch all the data going from the PCH to the CPU or RAM together regardless of its source - the PCH is essentially a PCIe switch, not some magical data-packing controller (as that would add massive latency and all sorts of decoding issues). If the QPI link is transferring data from one x4 device, it'll have to wait to transfer data from any other device. Of course, switching happens millions if not billions of times a second, so "waiting" is a weird term to use, but it's the same principle as non-MU-MIMO Wifi: you might have a 1,7Gb/s max theoretical speed, but when the connection is constantly rotating between a handful of devices, performance drops significantly both due to each device only having access to a fraction of that second, and also some performance being lost in the switching. PCIe is quite latency-sensitive, so PCIe switches prioritize fast switching over fancy data-packing methods.

And, again: unless your main workload is copying data back and forth between drives, it's going to have to cross the QPI link to reach RAM and the CPU. If you're doing video or photo editing, that's quite a heavy load. Same goes for any type of database work, compiling code, and so on. For video work, a lot of people use multiple SSDs for increased performance - not necessarily in RAID, but as scratch disks and storage disks, and so on. We might not be quite at the point where we're seeing the limitations of the QPI link, but we are very, very close.

My bet is that Intel is hoping to ride this out until PCIe 4.0 or 5.0 reach the consumer space (the latter seems to be the likely one, given that it's arriving quite soon after 4.0, which has barely reached server parts), so that they won't have to spend any extra money on doubling the lane count. And they still might make it, but they're riding a very fine line here.As I said in my previous post: you can't just lump all the lanes together like that. The PCH has six x4 controllers, four of which (IIRC) support RST - and as such, either SATA or NVMe. The rest can support NVMe drives, but are never routed as such (as an m.2 slot without RST support, yet still coming off the chipset would be incredibly confusing). If your motherboard only has four SATA ports, then that occupies one of these RST ports, with three left. If there's more (1-4), two are gone - you can't use any remaining PCIe lanes for an NVMe drive when there are SATA ports running off the controller. A 10GbE NIC would occupy one full x4 controller - but with some layout optimization, you could hopefully keep that off the RST-enabled controllers. But most boards with 10GbE also have a normal (lower power) NIC, which also needs PCIe - which eats into your allocation. Then there's USB 3.1G2 - which needs a controller on Z370, is integrated on every other *3** chipset, but requires two PCIe lanes per port (most controllers are 2-port, PCIe x4) no matter what (the difference is whether the controller is internal or external). Then there's WiFi, which needs a lane, too.

In short: laying out all the connections, making sure nothing overlaps too badly, that everything has bandwidth, and that the trade-offs are understandable (insert an m.2 in slot 2, you disable SATA4-7, insert an m.2 in slot 3, you disable PCIe_4, and so on) is no small matter. The current PCH has what I would call the bare minimum for a full-featured high-end mainstream motherboard today. They definitely don't have PCIe to spare. And all of these devices need to communicate with and transfer data to and from the CPU and RAM.[/QUOTE]