Wednesday, February 13th 2019

NVIDIA DLSS and its Surprising Resolution Limitations

TechPowerUp readers today were greeted to our PC port analysis of Metro Exodus, which also contained a dedicated section on NVIDIA RTX and DLSS technologies. The former brings in real-time ray tracing support to an already graphically-intensive game, and the latter attempts to assuage the performance hit via NVIDIA's new proprietary alternative to more-traditional anti-aliasing. There was definitely a bump in performance from DLSS when enabled, however we also noted some head-scratching limitations on when and how it can even be enabled, depending on the in-game resolution and RTX GPU employed. We then set about testing DLSS on Battlefield V, which was also available from today, and it was then that we noticed a trend.

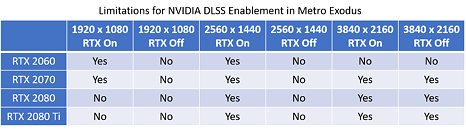

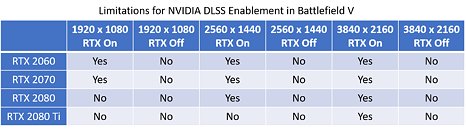

Take Metro Exodus first, with the relevant notes in the first image below. DLSS can only be turned on for a specific combination of RTX GPUs ranging from the RTX 2060 to the RTX 2080 Ti, but NVIDIA appear to be limiting users to a class-based system. Users with the RTX 2060, for example, can't even use DLSS at 4K and, more egregiously, owners of the RTX 2080 and 2080 Ti can not enjoy RTX and DLSS simultaneously at the most popular in-game resolution of 1920x1080, which would be useful to reach high FPS rates on 144 Hz monitors. Battlefield V has a similar, and yet even more divided system wherein the gaming flagship RTX 2080 Ti can not be used with RTX and DLSS at even 1440p, as seen in the second image below. This brought us back to Final Fantasy XV's own DLSS implementation last year, which was all or nothing at 4K resolution only. What could have prompted NVIDIA to carry this out? We speculate further past the break.We contacted NVIDIA about this to get word straight from the green horse's mouth, hoping to be able to provide a satisfactory answer to you. Representatives for the company told us that DLSS is most effective when the GPU is at maximum work load, such that if a GPU is not being challenged enough, DLSS is not going to be made available. Accordingly, this implementation encourages users to turn on RTX first, thus increasing the GPU load, to then enable DLSS. It would thus be fair to extrapolate why the RTX 2080 Ti does not get to enjoy DLSS at lower resolutions, where perhaps it is not being taxed as hard.

We do not buy this explanation, however. Turning off VSync alone results in uncapped frame rates, which allow for a GPU load nearing 100%. NVIDIA has been championing high refresh rate displays for years now, and our own results show that we need the RTX 2080 and RTX 2080 Ti to get close to 144 FPS at 1080p, for that sweet 120+ Hz refresh rate display action. Why not let the end user decide what takes priority here, especially if DLSS aims to improve graphical fidelity as well? It was at this point where we went back to the NVIDIA whitepaper on their Turing microarchitecture, briefly discussed here for those interested.

DLSS, as it turns out, operates on a frame-by-frame basis. A Turing microarchitecture-based GPU has shader cores for gaming, tensor cores for large-scale compute/AI load, and RT cores for real-time ray tracing. As load on the GPU is applied, relevant to DLSS, this is predominantly on the tensor cores. Effectively thus, a higher FPS in a game means a higher load on the tensor cores. The different GPUs in the NVIDIA GeForce RTX family have a different number of tensor cores, and thus limit how many frames/pixels can be processed in a unit time (say, one second). This variability in the number of tensor cores is likely the major reason for said implementation of DLSS. With their approach, it appears that NVIDIA wants to make sure that the tensor cores never become the bottleneck during gaming.

Another possible reason comes via Futuremark's 3DMark Port Royal benchmark for ray tracing. It recently added support for DLSS, and is a standard bearer to how RTX and DLSS can work in conjunction to produce excellent results. Port Royal, however, is an extremely scripted benchmark using pre-determined scenes to make good use of the machine learning capabilities integrated in DLSS. Perhaps this initial round of DLSS in games is following a similar mechanism, wherein the game engine is being trained to enable DLSS on specific scenes at specific resolutions, and not in a resolution-independent way.

Regardless of what is the underlying cause, all in-game DLSS implementations so far have come with some small print attached, that sours the ultimately-free bonus of DLSS which appears to work well - when it can- providing at least an additional dial for users to play with, to fine-tune their desired balance of visual experience to game FPS.

Take Metro Exodus first, with the relevant notes in the first image below. DLSS can only be turned on for a specific combination of RTX GPUs ranging from the RTX 2060 to the RTX 2080 Ti, but NVIDIA appear to be limiting users to a class-based system. Users with the RTX 2060, for example, can't even use DLSS at 4K and, more egregiously, owners of the RTX 2080 and 2080 Ti can not enjoy RTX and DLSS simultaneously at the most popular in-game resolution of 1920x1080, which would be useful to reach high FPS rates on 144 Hz monitors. Battlefield V has a similar, and yet even more divided system wherein the gaming flagship RTX 2080 Ti can not be used with RTX and DLSS at even 1440p, as seen in the second image below. This brought us back to Final Fantasy XV's own DLSS implementation last year, which was all or nothing at 4K resolution only. What could have prompted NVIDIA to carry this out? We speculate further past the break.We contacted NVIDIA about this to get word straight from the green horse's mouth, hoping to be able to provide a satisfactory answer to you. Representatives for the company told us that DLSS is most effective when the GPU is at maximum work load, such that if a GPU is not being challenged enough, DLSS is not going to be made available. Accordingly, this implementation encourages users to turn on RTX first, thus increasing the GPU load, to then enable DLSS. It would thus be fair to extrapolate why the RTX 2080 Ti does not get to enjoy DLSS at lower resolutions, where perhaps it is not being taxed as hard.

We do not buy this explanation, however. Turning off VSync alone results in uncapped frame rates, which allow for a GPU load nearing 100%. NVIDIA has been championing high refresh rate displays for years now, and our own results show that we need the RTX 2080 and RTX 2080 Ti to get close to 144 FPS at 1080p, for that sweet 120+ Hz refresh rate display action. Why not let the end user decide what takes priority here, especially if DLSS aims to improve graphical fidelity as well? It was at this point where we went back to the NVIDIA whitepaper on their Turing microarchitecture, briefly discussed here for those interested.

DLSS, as it turns out, operates on a frame-by-frame basis. A Turing microarchitecture-based GPU has shader cores for gaming, tensor cores for large-scale compute/AI load, and RT cores for real-time ray tracing. As load on the GPU is applied, relevant to DLSS, this is predominantly on the tensor cores. Effectively thus, a higher FPS in a game means a higher load on the tensor cores. The different GPUs in the NVIDIA GeForce RTX family have a different number of tensor cores, and thus limit how many frames/pixels can be processed in a unit time (say, one second). This variability in the number of tensor cores is likely the major reason for said implementation of DLSS. With their approach, it appears that NVIDIA wants to make sure that the tensor cores never become the bottleneck during gaming.

Another possible reason comes via Futuremark's 3DMark Port Royal benchmark for ray tracing. It recently added support for DLSS, and is a standard bearer to how RTX and DLSS can work in conjunction to produce excellent results. Port Royal, however, is an extremely scripted benchmark using pre-determined scenes to make good use of the machine learning capabilities integrated in DLSS. Perhaps this initial round of DLSS in games is following a similar mechanism, wherein the game engine is being trained to enable DLSS on specific scenes at specific resolutions, and not in a resolution-independent way.

Regardless of what is the underlying cause, all in-game DLSS implementations so far have come with some small print attached, that sours the ultimately-free bonus of DLSS which appears to work well - when it can- providing at least an additional dial for users to play with, to fine-tune their desired balance of visual experience to game FPS.

102 Comments on NVIDIA DLSS and its Surprising Resolution Limitations

Sorry, that doesn't make any sense. Why would they limit 2080Ti's at 1080 or even 1440 then? 2080Ti have the horsepower on the tensor cores to run it without bottleneck.

I still think the ability is focused where it is needed, however. As about any card can get 60 FPS in FF XV bench at 2560x1440 or lower. Why waste resources when it isn't needed?

If no one wants to develop for DX12, where do we go? Are we on DX11 for years to come because no publishers want to invest in future tech? Do we need to wait until there is a revolutionary tech that publishers can't ignore? Are RTX and DLSS those features? Doesn't seem so...

DX12 certainly is a more efficient API and should increase fps when used properly (we've already seen this is not always the case).

But this is not attractive to the customer. He doesn't care about few fps.

RTRT is a totally different animal. It's qualitative rather than quantitative. It really changes how games look.

I mean: if we want games to be realistic in some distant future, they will have to utilize RTRT.

Does RTRT require 3D APIs to become more low-level? I don't know. But that's the direction DX12 went. And it's a good direction in general. It's just that DX12 is really unfriendly for the coders, so:

1) the cost is huge

and

2) this most likely leads to non-optimal code and takes away some of the gains.

But since there could actually be a demand for RTRT games, at least the cost issue could go away. And who knows... maybe next revision of DX12 will be much easier to live with.

The biggest gainers among the top 25 in the last month according to Steam HW Survey were (by order of cards out there): 4th place 1070 (+0.18%), entire R7 series (+0.19%), 21st place RX 580 (+ 0.15%) and 24th place GTX 650 (+0.15%) ... Biggest losers were the 1st place 1060 (-0.52%) 14th place GTX 950 with - 0.19%/ The 2070 doubled it's market share to 0.33 % ... and the 2080 is up 50% to 0.31% share which kinda surprised me. The RX Vega (includes combined Vega 3, Vega 6, Vega 8, RX Vega 10, RX Vega 11, RX Vega 56, RX Vega 64, RX Vega 64 Liquid, and apparently, Radeon VII) made a nice 1st showing at 0.16%. Also interesting that the once dominant 970 will likely drop below 3% in next month.I thot about that for a bit. If we use 5% as the cutoff for discussion, then all we can talk about is technology that shows its benefits for:

1920 x 1080 = 60.48%

1366 x 768 = 14.02%

Even 2560 x 1440 is in use by only 3.97% .... 2160p is completely off the table as it is used by only 1.48 %. But don't we all want to "move up" at some point in the near future ?

The same arguments were used when the automobile arrived, unreliable, will never replace the horse ! .... and most other technologies. I'm old enough to remember when it was said "Bah, who would ever actually buy a color TV ?" Technology advances much like human development, "walking is stoopid, all I gotta do is whine and momma will carry me ... " . I sucked at baseball my 1st year; I got better (a little). I sucked at football my 1st year (got better each year I played). I sucked at basketball my 1st year, was pretty good by college. Technology advances slowly, we find what works and then take it as far as it will go ... eventually, our needs outgrow the limits of the tech you in use and you need new tech. Where's Edison's carbon filament today ? When any tech arrives, in its early iterations, expect it to be less efficient, less cost effective but it has room to grow. Look at IPS ... when folks started thinking "Ooh IPS has more accurate color, let's use it for gaming" ... turned out it wasn't a good idea by any stretch of the imagination.

But over time, the tech advanced, AUoptronics screens came along and we had a brand new gaming experience. Should IPS development have been shut down because less than 5% of folks were using it (at least properly and satisfactoruly) ? My son wanted an IPS screen for his photo work (which he spent $1250 on) thinking it would be OK for gaming ... 4 months later he had a 2nd (TN) monitor as the response time and lag drive him nutz and every time he went into a dark place, he'd get dead cause everyone and everything could see him long before he could see them from the IPS glow. Now, when not on one of those AU screens, feels like I am eating oatmeal but w/o any cinnamon, maple syrup, milk or anything else which provides any semblance of flavor.

But if we're going to say that what is being done by < 5% of gamers doesn't matter, then we are certainly saying that we should not be worrying about a limitation that does not allow a 2080 Ti owner to use a feature at 1080p. That's like buying a $500 tie to wear with a $99 suit

if they allowed dlss at 4k without rtx (all were hoping for this)

then 2060 would be perfectly capable of 4k gaming

so noone would buy 2070 2080 2080ti

I guess sometime users would be able to unlock dlss without the above limitations

the gain or loss in image quality is VERY serious matter, promissing performance gains and butchering image quality is stealing and fraud

If the UI itself is instantly changing settings based on selected settings, then that is beyond what a game profile for a driver can usually do. The game has to be using an API of some kind that test settings against the game profile. One could perhaps run the game and check modules to see if it is loading some NVIDIA branded library specific to RTX to do that.

To give you an example of hype, I remember when AMD released in its drivers the Morphological Filtering. It was kind of a hype way back. Its still available in the Drivers, but when I enable it, the Picture Quality doesn't look right. lol