Wednesday, February 13th 2019

NVIDIA DLSS and its Surprising Resolution Limitations

TechPowerUp readers today were greeted to our PC port analysis of Metro Exodus, which also contained a dedicated section on NVIDIA RTX and DLSS technologies. The former brings in real-time ray tracing support to an already graphically-intensive game, and the latter attempts to assuage the performance hit via NVIDIA's new proprietary alternative to more-traditional anti-aliasing. There was definitely a bump in performance from DLSS when enabled, however we also noted some head-scratching limitations on when and how it can even be enabled, depending on the in-game resolution and RTX GPU employed. We then set about testing DLSS on Battlefield V, which was also available from today, and it was then that we noticed a trend.

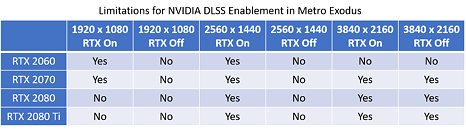

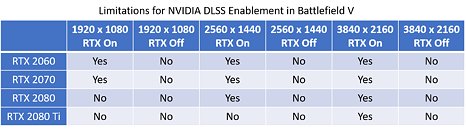

Take Metro Exodus first, with the relevant notes in the first image below. DLSS can only be turned on for a specific combination of RTX GPUs ranging from the RTX 2060 to the RTX 2080 Ti, but NVIDIA appear to be limiting users to a class-based system. Users with the RTX 2060, for example, can't even use DLSS at 4K and, more egregiously, owners of the RTX 2080 and 2080 Ti can not enjoy RTX and DLSS simultaneously at the most popular in-game resolution of 1920x1080, which would be useful to reach high FPS rates on 144 Hz monitors. Battlefield V has a similar, and yet even more divided system wherein the gaming flagship RTX 2080 Ti can not be used with RTX and DLSS at even 1440p, as seen in the second image below. This brought us back to Final Fantasy XV's own DLSS implementation last year, which was all or nothing at 4K resolution only. What could have prompted NVIDIA to carry this out? We speculate further past the break.We contacted NVIDIA about this to get word straight from the green horse's mouth, hoping to be able to provide a satisfactory answer to you. Representatives for the company told us that DLSS is most effective when the GPU is at maximum work load, such that if a GPU is not being challenged enough, DLSS is not going to be made available. Accordingly, this implementation encourages users to turn on RTX first, thus increasing the GPU load, to then enable DLSS. It would thus be fair to extrapolate why the RTX 2080 Ti does not get to enjoy DLSS at lower resolutions, where perhaps it is not being taxed as hard.

We do not buy this explanation, however. Turning off VSync alone results in uncapped frame rates, which allow for a GPU load nearing 100%. NVIDIA has been championing high refresh rate displays for years now, and our own results show that we need the RTX 2080 and RTX 2080 Ti to get close to 144 FPS at 1080p, for that sweet 120+ Hz refresh rate display action. Why not let the end user decide what takes priority here, especially if DLSS aims to improve graphical fidelity as well? It was at this point where we went back to the NVIDIA whitepaper on their Turing microarchitecture, briefly discussed here for those interested.

DLSS, as it turns out, operates on a frame-by-frame basis. A Turing microarchitecture-based GPU has shader cores for gaming, tensor cores for large-scale compute/AI load, and RT cores for real-time ray tracing. As load on the GPU is applied, relevant to DLSS, this is predominantly on the tensor cores. Effectively thus, a higher FPS in a game means a higher load on the tensor cores. The different GPUs in the NVIDIA GeForce RTX family have a different number of tensor cores, and thus limit how many frames/pixels can be processed in a unit time (say, one second). This variability in the number of tensor cores is likely the major reason for said implementation of DLSS. With their approach, it appears that NVIDIA wants to make sure that the tensor cores never become the bottleneck during gaming.

Another possible reason comes via Futuremark's 3DMark Port Royal benchmark for ray tracing. It recently added support for DLSS, and is a standard bearer to how RTX and DLSS can work in conjunction to produce excellent results. Port Royal, however, is an extremely scripted benchmark using pre-determined scenes to make good use of the machine learning capabilities integrated in DLSS. Perhaps this initial round of DLSS in games is following a similar mechanism, wherein the game engine is being trained to enable DLSS on specific scenes at specific resolutions, and not in a resolution-independent way.

Regardless of what is the underlying cause, all in-game DLSS implementations so far have come with some small print attached, that sours the ultimately-free bonus of DLSS which appears to work well - when it can- providing at least an additional dial for users to play with, to fine-tune their desired balance of visual experience to game FPS.

Take Metro Exodus first, with the relevant notes in the first image below. DLSS can only be turned on for a specific combination of RTX GPUs ranging from the RTX 2060 to the RTX 2080 Ti, but NVIDIA appear to be limiting users to a class-based system. Users with the RTX 2060, for example, can't even use DLSS at 4K and, more egregiously, owners of the RTX 2080 and 2080 Ti can not enjoy RTX and DLSS simultaneously at the most popular in-game resolution of 1920x1080, which would be useful to reach high FPS rates on 144 Hz monitors. Battlefield V has a similar, and yet even more divided system wherein the gaming flagship RTX 2080 Ti can not be used with RTX and DLSS at even 1440p, as seen in the second image below. This brought us back to Final Fantasy XV's own DLSS implementation last year, which was all or nothing at 4K resolution only. What could have prompted NVIDIA to carry this out? We speculate further past the break.We contacted NVIDIA about this to get word straight from the green horse's mouth, hoping to be able to provide a satisfactory answer to you. Representatives for the company told us that DLSS is most effective when the GPU is at maximum work load, such that if a GPU is not being challenged enough, DLSS is not going to be made available. Accordingly, this implementation encourages users to turn on RTX first, thus increasing the GPU load, to then enable DLSS. It would thus be fair to extrapolate why the RTX 2080 Ti does not get to enjoy DLSS at lower resolutions, where perhaps it is not being taxed as hard.

We do not buy this explanation, however. Turning off VSync alone results in uncapped frame rates, which allow for a GPU load nearing 100%. NVIDIA has been championing high refresh rate displays for years now, and our own results show that we need the RTX 2080 and RTX 2080 Ti to get close to 144 FPS at 1080p, for that sweet 120+ Hz refresh rate display action. Why not let the end user decide what takes priority here, especially if DLSS aims to improve graphical fidelity as well? It was at this point where we went back to the NVIDIA whitepaper on their Turing microarchitecture, briefly discussed here for those interested.

DLSS, as it turns out, operates on a frame-by-frame basis. A Turing microarchitecture-based GPU has shader cores for gaming, tensor cores for large-scale compute/AI load, and RT cores for real-time ray tracing. As load on the GPU is applied, relevant to DLSS, this is predominantly on the tensor cores. Effectively thus, a higher FPS in a game means a higher load on the tensor cores. The different GPUs in the NVIDIA GeForce RTX family have a different number of tensor cores, and thus limit how many frames/pixels can be processed in a unit time (say, one second). This variability in the number of tensor cores is likely the major reason for said implementation of DLSS. With their approach, it appears that NVIDIA wants to make sure that the tensor cores never become the bottleneck during gaming.

Another possible reason comes via Futuremark's 3DMark Port Royal benchmark for ray tracing. It recently added support for DLSS, and is a standard bearer to how RTX and DLSS can work in conjunction to produce excellent results. Port Royal, however, is an extremely scripted benchmark using pre-determined scenes to make good use of the machine learning capabilities integrated in DLSS. Perhaps this initial round of DLSS in games is following a similar mechanism, wherein the game engine is being trained to enable DLSS on specific scenes at specific resolutions, and not in a resolution-independent way.

Regardless of what is the underlying cause, all in-game DLSS implementations so far have come with some small print attached, that sours the ultimately-free bonus of DLSS which appears to work well - when it can- providing at least an additional dial for users to play with, to fine-tune their desired balance of visual experience to game FPS.

102 Comments on NVIDIA DLSS and its Surprising Resolution Limitations

This explains why high end cards can't use DLSS at lower resolutions.

Lower-end cards on the other hand have so few tensor cores, that they can't keep up with the high resolution to begin with. This doesn't explain why the 2060 can use DLSS at 4k in Battlefield, though.

In any case, RTX takes care to keep FPS down so the tensor cores can keep up.

Kind of makes sense to me. Introducing new paradigms and having all the necessary resources on-die to support them don't come in the same generation.

That was quite a load of fertilizer about needing more GPU load, more like, they need frame times to be high enough so the end users don't notice.

alt theory DLSS requires a certain level of training and training is resolution dependent

How can one test that it's actually a TC limitation?

How they say so.

It's still a developing technology, only time will tell if this is a permanent limitation or something that will be addressed in future drivers and/or patches for supporting games.

Either way, I'm very happy with the results, I'm not a competitive gamer, so I don't really care for frame rates beyond 100FPS, I'm just enjoying the fact that now I can really play BFV at 4K with everything maxed out and a smooth frame rate.

I listened to the advise of many people in the forum and now I make sure to leave my PC on from time to time so all games and drivers are up to date, so to me it was a really nice bonus to be able to enable this feature before I came to work today, the cherry on top you could say lol.

Both DLSS and RTX are still in their infancy, I have a feeling competitive players would disable either function to get the max performance out of any given game.

1440p with DLSS would be nice too.... so we can hit that 200fps mark

Thought at least one of ngreedia new techs are worthwhile, seems i was wrong.

Is it perfect? Is it what we'll be using in 5 years? Hell no. It's just a first iteration of a new tech. Support it if you want, ignore it if you think it's too expensive. Whining about it and reapeatedly pointing out flaws is childish though.