Wednesday, February 13th 2019

NVIDIA DLSS and its Surprising Resolution Limitations

TechPowerUp readers today were greeted to our PC port analysis of Metro Exodus, which also contained a dedicated section on NVIDIA RTX and DLSS technologies. The former brings in real-time ray tracing support to an already graphically-intensive game, and the latter attempts to assuage the performance hit via NVIDIA's new proprietary alternative to more-traditional anti-aliasing. There was definitely a bump in performance from DLSS when enabled, however we also noted some head-scratching limitations on when and how it can even be enabled, depending on the in-game resolution and RTX GPU employed. We then set about testing DLSS on Battlefield V, which was also available from today, and it was then that we noticed a trend.

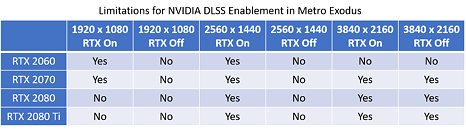

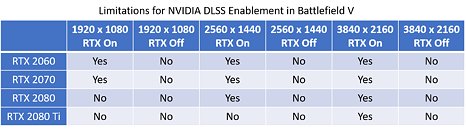

Take Metro Exodus first, with the relevant notes in the first image below. DLSS can only be turned on for a specific combination of RTX GPUs ranging from the RTX 2060 to the RTX 2080 Ti, but NVIDIA appear to be limiting users to a class-based system. Users with the RTX 2060, for example, can't even use DLSS at 4K and, more egregiously, owners of the RTX 2080 and 2080 Ti can not enjoy RTX and DLSS simultaneously at the most popular in-game resolution of 1920x1080, which would be useful to reach high FPS rates on 144 Hz monitors. Battlefield V has a similar, and yet even more divided system wherein the gaming flagship RTX 2080 Ti can not be used with RTX and DLSS at even 1440p, as seen in the second image below. This brought us back to Final Fantasy XV's own DLSS implementation last year, which was all or nothing at 4K resolution only. What could have prompted NVIDIA to carry this out? We speculate further past the break.We contacted NVIDIA about this to get word straight from the green horse's mouth, hoping to be able to provide a satisfactory answer to you. Representatives for the company told us that DLSS is most effective when the GPU is at maximum work load, such that if a GPU is not being challenged enough, DLSS is not going to be made available. Accordingly, this implementation encourages users to turn on RTX first, thus increasing the GPU load, to then enable DLSS. It would thus be fair to extrapolate why the RTX 2080 Ti does not get to enjoy DLSS at lower resolutions, where perhaps it is not being taxed as hard.

We do not buy this explanation, however. Turning off VSync alone results in uncapped frame rates, which allow for a GPU load nearing 100%. NVIDIA has been championing high refresh rate displays for years now, and our own results show that we need the RTX 2080 and RTX 2080 Ti to get close to 144 FPS at 1080p, for that sweet 120+ Hz refresh rate display action. Why not let the end user decide what takes priority here, especially if DLSS aims to improve graphical fidelity as well? It was at this point where we went back to the NVIDIA whitepaper on their Turing microarchitecture, briefly discussed here for those interested.

DLSS, as it turns out, operates on a frame-by-frame basis. A Turing microarchitecture-based GPU has shader cores for gaming, tensor cores for large-scale compute/AI load, and RT cores for real-time ray tracing. As load on the GPU is applied, relevant to DLSS, this is predominantly on the tensor cores. Effectively thus, a higher FPS in a game means a higher load on the tensor cores. The different GPUs in the NVIDIA GeForce RTX family have a different number of tensor cores, and thus limit how many frames/pixels can be processed in a unit time (say, one second). This variability in the number of tensor cores is likely the major reason for said implementation of DLSS. With their approach, it appears that NVIDIA wants to make sure that the tensor cores never become the bottleneck during gaming.

Another possible reason comes via Futuremark's 3DMark Port Royal benchmark for ray tracing. It recently added support for DLSS, and is a standard bearer to how RTX and DLSS can work in conjunction to produce excellent results. Port Royal, however, is an extremely scripted benchmark using pre-determined scenes to make good use of the machine learning capabilities integrated in DLSS. Perhaps this initial round of DLSS in games is following a similar mechanism, wherein the game engine is being trained to enable DLSS on specific scenes at specific resolutions, and not in a resolution-independent way.

Regardless of what is the underlying cause, all in-game DLSS implementations so far have come with some small print attached, that sours the ultimately-free bonus of DLSS which appears to work well - when it can- providing at least an additional dial for users to play with, to fine-tune their desired balance of visual experience to game FPS.

Take Metro Exodus first, with the relevant notes in the first image below. DLSS can only be turned on for a specific combination of RTX GPUs ranging from the RTX 2060 to the RTX 2080 Ti, but NVIDIA appear to be limiting users to a class-based system. Users with the RTX 2060, for example, can't even use DLSS at 4K and, more egregiously, owners of the RTX 2080 and 2080 Ti can not enjoy RTX and DLSS simultaneously at the most popular in-game resolution of 1920x1080, which would be useful to reach high FPS rates on 144 Hz monitors. Battlefield V has a similar, and yet even more divided system wherein the gaming flagship RTX 2080 Ti can not be used with RTX and DLSS at even 1440p, as seen in the second image below. This brought us back to Final Fantasy XV's own DLSS implementation last year, which was all or nothing at 4K resolution only. What could have prompted NVIDIA to carry this out? We speculate further past the break.We contacted NVIDIA about this to get word straight from the green horse's mouth, hoping to be able to provide a satisfactory answer to you. Representatives for the company told us that DLSS is most effective when the GPU is at maximum work load, such that if a GPU is not being challenged enough, DLSS is not going to be made available. Accordingly, this implementation encourages users to turn on RTX first, thus increasing the GPU load, to then enable DLSS. It would thus be fair to extrapolate why the RTX 2080 Ti does not get to enjoy DLSS at lower resolutions, where perhaps it is not being taxed as hard.

We do not buy this explanation, however. Turning off VSync alone results in uncapped frame rates, which allow for a GPU load nearing 100%. NVIDIA has been championing high refresh rate displays for years now, and our own results show that we need the RTX 2080 and RTX 2080 Ti to get close to 144 FPS at 1080p, for that sweet 120+ Hz refresh rate display action. Why not let the end user decide what takes priority here, especially if DLSS aims to improve graphical fidelity as well? It was at this point where we went back to the NVIDIA whitepaper on their Turing microarchitecture, briefly discussed here for those interested.

DLSS, as it turns out, operates on a frame-by-frame basis. A Turing microarchitecture-based GPU has shader cores for gaming, tensor cores for large-scale compute/AI load, and RT cores for real-time ray tracing. As load on the GPU is applied, relevant to DLSS, this is predominantly on the tensor cores. Effectively thus, a higher FPS in a game means a higher load on the tensor cores. The different GPUs in the NVIDIA GeForce RTX family have a different number of tensor cores, and thus limit how many frames/pixels can be processed in a unit time (say, one second). This variability in the number of tensor cores is likely the major reason for said implementation of DLSS. With their approach, it appears that NVIDIA wants to make sure that the tensor cores never become the bottleneck during gaming.

Another possible reason comes via Futuremark's 3DMark Port Royal benchmark for ray tracing. It recently added support for DLSS, and is a standard bearer to how RTX and DLSS can work in conjunction to produce excellent results. Port Royal, however, is an extremely scripted benchmark using pre-determined scenes to make good use of the machine learning capabilities integrated in DLSS. Perhaps this initial round of DLSS in games is following a similar mechanism, wherein the game engine is being trained to enable DLSS on specific scenes at specific resolutions, and not in a resolution-independent way.

Regardless of what is the underlying cause, all in-game DLSS implementations so far have come with some small print attached, that sours the ultimately-free bonus of DLSS which appears to work well - when it can- providing at least an additional dial for users to play with, to fine-tune their desired balance of visual experience to game FPS.

102 Comments on NVIDIA DLSS and its Surprising Resolution Limitations

Typical buyer (at least inside the mind of nVidia):

- "This new tech sounds awesome! No way I gonna miss out on this bandwagon. Just to be safe I'll buy a more expensive model, so I can enjoy this new stuff and not have to worry about performance hits, resolutions or whatever. Unlimited power!"

Yep, bamboozled.

This is the core of my RTX-hate. Cost. DLSS has a very shaky business case, and RTX even more so - both for the industry and for Nvidia itself. Its a massive risk for everyone involved and for all the work all this deep learning and brute forcing is supposed to 'save', other work is created to keep Nvidia busy and developers struggling. And the net profit? I don't know... looking at those Metro screenshots I sure as hell don't prefer the RTX world they show, and the benefit of DLSS is far too situational.

Physically and logically the screenshots with RTX do look more correct but that does not have a direct relevance to looking better or more playable. As you said, some of these screenshots - especially with closed off indoor areas - are too dark. If you think about it, it makes perfect sense. But it does not make the area good to go through in the game if you cannot see anything.

The more I see of this technology and its effects, the more I get convinced it really serves a niche, if that, at the very best. Its unusuable in competitive gaming, its not very practical in any dark (immersive?) gameplay setting which happens to be a vast majority of them, and it doesn't play well with existing lighting systems either.

Dead end is dead...

There is one light source that can't have its placement changed and that's the Sun (global illumination). Incidentally, global illumination is exactly what Metro uses RTX for. As cards become more powerful, they'll be able to handle both global and point light sources. But even then you'll still be able to claim rasterization looks better to you because... well... there's no metric for that.

Honestly, if it objectively looks better I'm converted, but so far, the only instance of that has been a tech demo. And only ONE of them at that, the rest wasn't impressive at all, using low poly models or simple shapes only to show it can be done in real time.

But yeah, interesting editorial...thought provoking. :)

I keep harping on it but all the virtues of DX12 we were told were coming to games have not happened yet so I don't hold my breath for these. Of the new tech introduced, I think DLSS has the best chance to be successful as it gets tuned.I don't understand what the benefit would be, honestly. If you are already at high frames, what do you need it for? At this point, it lowers image quality to the point where you could tweak your settings to achieve the same effect.

Would it be simply to say that I run 'ultra' vs saying I run 'mostly high'?

Another aspect I think we might overlook is the engine itself. I can totally understand that some engines are more rigid in their resolution scaling and support/options/features. Case in point with Metro Exodus: it can only run in fullscreen at specific resolutions locked to desktop res.Spot on. Its all about money in the end. That is also why I think there is a cost aspect to DLSS and adoption rate ties into that in the most direct way for Nvidia. And it also supports my belief that this tech won't take off under DXR. The lacking adoption of DX12 has everything to do with workforce and money, too, and not with complexity. If its viable, complexity doesn't matter, because all that is, is additional time (and time is money). Coding games for two radically different render techniques concurrently is another major time sink.

This really lowers the appeal of it and limits it's usage especially for the weaker card's though it's limiting for the stronger cards as well at the same time. It's like they are trying to tie it strictly to ultra high resolution and/or RTRT only. The thing is I don't think people that bought the cards with DLSS more in mind bought it with that handicap in the back of their heads this is almost akin to the 3.5GB issue if it's as bad as it sounds.

Sniper Elite 4, Shadow of Tomb Raider. With some concessions, Rise of Tomb Raider and Hitman with latest patches and drivers.

There are some DX12-only games with good performance like Forzas or Gears of War 4 but we have no other APIs in them to compare to.

Metro Exodus seems to be one of the titles where DX12 at least does not hurt which is a good thing (as weird as that may sound).

I am probably missing 1-2 games here but not really more than that.

As an AMD card user there is a bunch of games where you can get a benefit from using DX12 but not from all DX12 games. Division, Deus Ex: Mankind Divided, Battlefields.

As an Nvidia card user you can generally stick to DX11 for the best. With very few exceptions.

DX12 hasn't really offered anything interesting for the consumer. A few fps more is not enough. And yes, it's harder to code and makes game development more expensive.

RTRT could be the thing that DX12 needs to become an actual next standard.

Call me the pessimist here... but the state of this new generation of graphics really doesn't look rosy. Its almost impossible to do a fair like-for-like comparison with all these techniques that are proprietary and on a per-game basis, Nvidia is creating tech that makes everything abstract which is obviously a way to hide problems as much as it is a solution.