Monday, August 19th 2019

Minecraft to Get NVIDIA RTX Ray-tracing Support

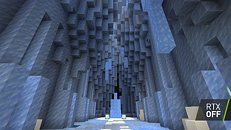

Minecraft is the perfect gaming paradox. It's a stupidly-popular title, but with simple graphics that can run on practically any Windows machine, but supports the latest 3D graphics APIs such as DirectX 12. The title now adds another feather to its technical feature-set cap, with support for NVIDIA RTX real-time raytracing. RTX will now be used to render realistic light shafts using path-tracing, global illumination, shadows, ambient occlusion, and simple reflections. "Ray tracing sits at the center of what we think is next for Minecraft," said Saxs Persson, Franchise Creative Director of Minecraft at Microsoft. "RTX gives the Minecraft world a brand-new feel to it. In normal Minecraft, a block of gold just appears yellow, but with ray tracing turned on, you really get to see the specular highlight, you get to see the reflection, you can even see a mob reflected in it."

NVIDIA and Microsoft are yet to put out a release date on this feature update. It remains to be seen how hardcore crafters take this feature. Looking at images 1 and 2 (below), we can see that the added global illumination / bloom blurs out objects in the distance. This gives crafters the impression that the draw-distance is somehow affected. Crafters demand the highest possible draw-distance, with nothing blurring their view. We can't wait to try this out ourselves to see how RTX affects very-large crafting.A video presentation by NVIDIA follows.

NVIDIA and Microsoft are yet to put out a release date on this feature update. It remains to be seen how hardcore crafters take this feature. Looking at images 1 and 2 (below), we can see that the added global illumination / bloom blurs out objects in the distance. This gives crafters the impression that the draw-distance is somehow affected. Crafters demand the highest possible draw-distance, with nothing blurring their view. We can't wait to try this out ourselves to see how RTX affects very-large crafting.A video presentation by NVIDIA follows.

66 Comments on Minecraft to Get NVIDIA RTX Ray-tracing Support

Java is so outdated for this kind of things ...

And it wouldn't take that much time... With a good small team, maybe ...5-6 months ?

Minecraft is not that complex. Once the graphical first layer is mastered, the rest is really easy even for a beginner.

Bleh - seems like a waste of time/resources. Then again, Nvidia CEO did say we should be buying cards that support RTRT....maybe this was why?

Amazing how we got to the point of criticizing game improvements, just because the order of the day is bash everything Nvidia related.

Sad times indeed...

That said, I would not touch Minecraft with a 10ft pole today, but ok.Aha, so its going to be platform limited then to their PC/X1 versions and it won't be feasible on any other port. Definitely not going the Super Duper way then, that's good.Don't mistake hate with a lack of enthusiasm ;) The reason it might be seen as hate is because somehow a group seems to think you have to be standing there cheering on Nvidia for all the greatness they bring to our lives, or something. Against that, almost everyone who thinks otherwise looks like 'a hater'.

Surprise, there is a middle ground, where you just wait it out and judge things by what they are to you and you alone, not what they are for an internet movement. And if you take that stance, its pretty difficult to be cheering for Nvidia right now.

It doesn't make any sense in my opinion, it would be like criticizing OLED, just because someone may not like the leading manufacturer LG, for example.

Simply put, we don't like paying for technology we can't use yet. It'd be like selling us a 4K TV without any 4K content available. Or a QLED TV that tries really hard to make us think its actually OLED, if we would keep to your example :)

And something could definitely be said about LG patenting OLED the way it did, and locking it down entirely for the rest of the market; at the same time relegating Samsung to its QLED and microled research which is by principle inferior to it. Nvidia didn't do quite that, but due to its timing (and high-end monopoly), the effect is rather close to that - there is only one supplier of the hardware.

What I mean is : the Java version should not exist at all as the "base game". A part of it is written in C++, C or Assembly ? pretty damn sure some parts.

But the core of the engine lays on Java.

It's cool for a object visualization, but not for a game.

That, and as a Java dev, Java is not the old engine's issue. Notch learning to code was.

Java is a ram hog, but is actually pretty performant and has world class multithreading (which Notch never used).The whole modern version of the game is.

www.reuters.com/article/us-nvidia-gaming/microsoft-nvidia-team-up-for-more-realistic-visuals-on-minecraft-game-idUSKCN1V90HS

NVIDIA is apparently making a big deal out of this.There's lots of problems with using Java for anything. #1 is OpenGL gets poor performance on AMD cards because AMD's OpenGL focus is on professional software. #2 is Java specific things like no unsigned integer types can cause serious memory and/or performance problems because you're either forced to use types that are double in length to accommodate half of the values being useless or you're constantly converting to and from types to work around the fact nothing is unsigned. #3 JVM performance is generally not as good as .NET Framework performance. The same code ran on both systems, .NET is usually a great deal faster.

The UWP version of Minecraft...is locked into Microsoft's ecosystem where the Java version isn't. They both suck for their own reasons.

At last they got their money´s worth :D

I can be wrong, didn't dive into this since RTX, meh... And this game which could be ported to NES, meh.

Unsigned integer arithmetic was introduced with Java 8 besides even if that were true. Which is nearly EOL by now...This I don't disagree with but... that wasn't the point either.

Even a similar game, Rising World, made on JVM, has scrapped future development on JVM in favor of Unity. Video games on JVM are a rare breed for a reason.

Even Microsoft and NVIDIA overlooked the JVM version in favor of the UWP version because NVIDIA has no intention of supporting RTX on OpenGL.