Monday, February 10th 2020

Intel Xe Graphics to Feature MCM-like Configurations, up to 512 EU on 500 W TDP

A reportedly leaked Intel slide via DigitalTrends has given us a load of information on Intel's upcoming take on the high performance graphics accelerators market - whether in its server or consumer iterations. Intel's Xe has already been cause for much discussion in a market that has only really seen two real competitors for ages now - the coming of a third player with muscles and brawl such as Intel against the already-established players NVIDIA and AMD would surely spark competition in the segment - and competition is the lifeblood of advancement, as we've recently seen with AMD's Ryzen CPU line.

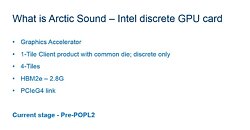

The leaked slide reveals that Intel will be looking to employ a Multi-Chip-Module (MCM) approach to its high performance "Arctic Sound" graphics architecture. The GPUs will be available in up to 4-tile configuration (the name Intel is giving each module), which will then be joined via Foveros 3D stacking (first employed in Intel Lakefield. This leaked slide shows Intel's approach starting with a 1-tile GPU (with only 96 of its 128 total EUs active) for the entry level market (at 75 W TDP) a-la DG1 SDV (Software Development Vehicle).Then we move towards the midrange market through a 1-tile 128 EU unit (150 W), a 2-tile 256 EU unit (300 W) for the enthusiasts, and finally, a 4-tile, up to 512 EU — a 400-500 W beast reserved only for the Data Center. This last one is known to be reserved for the Data Center since the leaked slide (assuming it's legitimate) points to a 48 V input voltage, which isn't available on consumer solutions. Intel's design means that each EU has access to (at least by design) the equivalent of eight graphics processing cores per EU. That's a lot of addressable hardware, but we'll see if both the performance and power efficiency are there in the final products - we hope they are.

Sources:

Digital Trends, via Videocardz

The leaked slide reveals that Intel will be looking to employ a Multi-Chip-Module (MCM) approach to its high performance "Arctic Sound" graphics architecture. The GPUs will be available in up to 4-tile configuration (the name Intel is giving each module), which will then be joined via Foveros 3D stacking (first employed in Intel Lakefield. This leaked slide shows Intel's approach starting with a 1-tile GPU (with only 96 of its 128 total EUs active) for the entry level market (at 75 W TDP) a-la DG1 SDV (Software Development Vehicle).Then we move towards the midrange market through a 1-tile 128 EU unit (150 W), a 2-tile 256 EU unit (300 W) for the enthusiasts, and finally, a 4-tile, up to 512 EU — a 400-500 W beast reserved only for the Data Center. This last one is known to be reserved for the Data Center since the leaked slide (assuming it's legitimate) points to a 48 V input voltage, which isn't available on consumer solutions. Intel's design means that each EU has access to (at least by design) the equivalent of eight graphics processing cores per EU. That's a lot of addressable hardware, but we'll see if both the performance and power efficiency are there in the final products - we hope they are.

50 Comments on Intel Xe Graphics to Feature MCM-like Configurations, up to 512 EU on 500 W TDP

and I thought rocket lake was stupid.

I'm hoping for competition as much as the next guy, but let's see if they can get the baby steps right and make a viable dGPU that people might want to buy first.

After all, if it's not a success, Intel will just can it and all of these roadmap ideas will be archived like Larabree was.

And please don't say at Samsung, TSMC, etc. There is a vast difference not only in the process of making the chips but also is the type of transistors they make whether they're cmos, etc.

Also, is no one going to make mention about how this "leaked slide" looks like it's from the mid 2000s?

This "Intel is going to produce a discrete GPU" things keep coming up every 5 years or so and never materialize because Intel is a CPU company.

edit: After a little searching, Intel has stated that the GPU will be built on its 10nm+. This is more proof that this is never going to happen because all the leaks about 10nm and 10nm+ from Intel is that it's a dumpster fire.

Intel does far more than CPUs - there is the foundry, Flash/SSDs, XPoint, all kinds of NICs (including 5G, at least until recently), FPGAs, it has some foothold in AI/ML, bunch of interconnect stuff and I probably missed a few.

Nvidia is the goal post here, not AMD. The fight for 2nd place is moot because the real winner will be consumers.

At worst Intel produces mediocre product but AMD falls behind because Intel does driver support very good. At best Intel GPUs handily beat AMD and slowly Radeon becomes irrelevant.