Thursday, July 9th 2020

NVIDIA Surpasses Intel in Market Cap Size

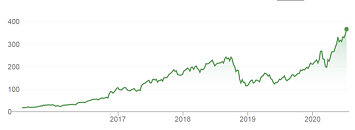

Yesterday after the stock market has closed, NVIDIA has officially reached a bigger market cap compared to Intel. After hours, the price of the NVIDIA (ticker: NVDA) stock is $411.20 with a market cap of 251.31B USD. It marks a historic day for NVIDIA as the company has historically been smaller than Intel (ticker: INTC), with some speculating that Intel could buy NVIDIA in the past while the company was much smaller. Intel's market cap now stands at 248.15B USD, which is a bit lower than NVIDIA's. However, the market cap is not an indication of everything. NVIDIA's stock is fueled by the hype generated around Machine Learning and AI, while Intel is not relying on any possible bubbles.

If we compare the revenues of both companies, Intel is having much better performance. It had a revenue of 71.9 billion USD in 2019, while NVIDIA has 11.72 billion USD of revenue. No doubt that NVIDIA has managed to do a good job and it managed to almost double revenue from 2017, where it went from $6.91 billion in 2017 to $11.72 billion in 2019. That is an amazing feat and market predictions are that it is not stopping to grow. With the recent acquisition of Mellanox, the company now has much bigger opportunities for expansion and growth.

If we compare the revenues of both companies, Intel is having much better performance. It had a revenue of 71.9 billion USD in 2019, while NVIDIA has 11.72 billion USD of revenue. No doubt that NVIDIA has managed to do a good job and it managed to almost double revenue from 2017, where it went from $6.91 billion in 2017 to $11.72 billion in 2019. That is an amazing feat and market predictions are that it is not stopping to grow. With the recent acquisition of Mellanox, the company now has much bigger opportunities for expansion and growth.

136 Comments on NVIDIA Surpasses Intel in Market Cap Size

Will fans of the green team ever understand how to properly compare hardware ? Probably not but one can only hope.Yeah I bet, "rock solid stability" is the definitely the first thing that pops in my head from that era, besides stuff like this : www.techpowerup.com/review/asus-geforce-gtx-590/26.html

This phenomena is a complete mystery to them.

and why would you not include one with 5700xt,I wonder.....

Nvidia's true intentions where totally clear when it chose to lock PhysX while it was probably totally independent to the primary GPU used. Probably because Ageia developed that way and Nvidia didn't bothered to make it incompatible with anything else than Nvidia GPUs. So with a simple patch you could unlock PhysX and play those few games that where supporting hardware PhysX with an AMD primary GPU without any problems and good framerates. I enjoyed Alice with a 4890 as a primary card and a 9600GT as a PhysX card. Super smooth, super fun. There was also a driver from Nvidia that accidentally came out without a PhysX lock. I think it was 256.xxx something.

Nvidia could had offered PhysX without support in those cases where the primary GPU was not an Nvidia one. They didn't.

www.codercorner.com/blog/?p=1129You're ascribing information from 2010 to AMD's decision making process in 2008. In 2008 AMD had a lot more reason to distrust Intel than Nvidia and yet they had no problem supporting Intel's proprietary and locked standard (Havok).AMD didn't just "not support" PhysX, they also explicitly backed Havok against it. It was a business move through and through, one that was clearly made to hurt Nvidia and stifle their development, which it did... but it also deprived their customers of a potential feature and stifled the industry's development.This came after AMD had already made their intentions clear that they wouldn't play ball.

In any case Nvidia could let the PhysX feature unlocked and just throw a pop up window while installing the driver informing that there would be no customer support when a non Nvidia GPU is used as primary. That customer support and bug reports would be valid only for those using an Nvidia GPU as primary. Anyway let me repeat something here. With a simple patch PhysX was running without any problems with an AMD card as primary.

Nvidia GTX 30X0 burns like coal in hell.

Now, PhysX wasn't locked when it was meant to run on Ageia cards, before Nvidia took over. I would be objecting on Ageia cards, you would also, if I was seeing developers throwing all physics effects on the Ageia card and forcing people to buy one more piece of hardware, when there where already multicore CPUs to do the job.

By the way. Saying ALL the time that the other person posts bullshit, is a red flag. You are putting a red flag on yourself, that you are a total waste of time. You look like a brainless 8 years old fanboy that just wants to win an argument when you keep saying that the other person posts bullshit. This is the simplest way to explain it to you.If this convenient explanation makes yourself happy, no problem. Why spoil your happiness?You just don't want to understand. You have an image in you mind that Nvidia is a company run by saints who want to push technology and make people happy. Maybe in another reality. It's funny that GameWorks even hurt performance in older series of Nvidia cards, but hey, Nvidia would have treated AMD cards fair with it's locked and proprietary code. You reject reality and then you ask what is it with us? And who are we? Are we a group? Maybe a group of non believers?Look at my system specs. My answer is there. I keep a simple GT 620 card in my system just so I can enjoy hardware PhysX effects in games like Batman for example. When i enable software PhysX in a systme with a 4th gen quad core i5 and an RX 580 framerate goes down to single digit. That simple GT 620 is enough for fluid gaming. And no I didn't had to install a patch because as I said, Nvidia decided to remove the lock? Why did they removed the lock. Did they decided to support AMD cards by themselves? Throw a bone to AMD's customers? Maybe they finally came in agreements with AMD? And by the way, why didn't they announced that lock removal? There was NO press release.

But things changed. The PhysX software became faster on the CPU, it had to become, or Havoc would have totally killed it and only a couple of developers where choosing to take Nvidia's money and create a game with hardware PhysX, where gamers that where using Intel or AMD GPUs would have to settle for a game with only minimal physics effects. No developer could justify going hardware PhysX in a world with 4-6-8 cores/threads CPUs. So Nvidia decided to offer a PhysX software engine that was usable. It's dream to make AMD GPUs look inferior through physics had failed.

As long as you reject reality, you would keep believing that we(the non believers) post bullshit and lies.

Well I checked the date. SDK 3.0 came out in June 2011. I guess programmers also need time to learn it and implement it, so games using it came out when? Probably when hardware PhysX was clear that wasn't meant to became a standard.

Try again.Ageia didn't had the connections, money, power to enforce that. So even if they wanted to do that, they couldn't. Also PPUs where not something that people where rushing to buy, so developers wouldn't cripple the game for 99% of their customers, just to make 1% happy. Nvidia was a totally different beast. And they did try to enforce physics on their GPUs. You are NOT reading or you just pretend to not read what I post.It seems that you are a waste of time after all. What you don't like is not a lie. I could call you also a liar. But I am not 5 years old.I come on. You keep posting like a 5 years old. I am just bored to post the parts of your posts where you make assumptions about what I think, what I mean, where I intentionally lie.You do reject reality. As for ignorance, it's your bliss.So you had nothing to say here.

Well, nice losing my time with you. Have a nice day.

Am I missing something about the whole "AI business" or is it about rather straighforward number crunching?By which braindamaged metric? Dear God...

AMD probably could too though it would likely end them.

The disparity between the big money like that and Bitcoin is actually quite large.

Again, Nvidia completely supported porting GPU PhysX to Radeons.

gizmodo.com/nvidia-helping-modders-port-physx-engine-to-ati-radeon-5023150You've made claims like Nvidia designed the CPU portion of PhysX to make the GPU portion look better, which is impossible because the CPU portion was written before GPUs were even part of the equation and was not even "designed" by Nvidia in the first place. When I pointed this out, did you clarify or correct your claim... no you just moved on to more falsities. Are you saying that wasn't intentional?You seemingly not reading the prior posts so as to easily put together what I meant by "you guys" is me.... rejecting reality? You're not even making sense anymore.It was already discussed before. Nvidia tried to extended their technology to AMD's products but AMD said no way, go to hell, we are backing Intel... so Nvidia said no YOU go to hell and locked out their products in response. Check the dates, AMD acted in bad faith first by stringing Eran Badit and consumers along and then sinking the whole thing. Nvidia supporting the porting effort directly contradicts the core of your notions.