Thursday, July 9th 2020

NVIDIA Surpasses Intel in Market Cap Size

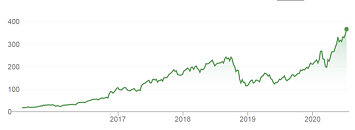

Yesterday after the stock market has closed, NVIDIA has officially reached a bigger market cap compared to Intel. After hours, the price of the NVIDIA (ticker: NVDA) stock is $411.20 with a market cap of 251.31B USD. It marks a historic day for NVIDIA as the company has historically been smaller than Intel (ticker: INTC), with some speculating that Intel could buy NVIDIA in the past while the company was much smaller. Intel's market cap now stands at 248.15B USD, which is a bit lower than NVIDIA's. However, the market cap is not an indication of everything. NVIDIA's stock is fueled by the hype generated around Machine Learning and AI, while Intel is not relying on any possible bubbles.

If we compare the revenues of both companies, Intel is having much better performance. It had a revenue of 71.9 billion USD in 2019, while NVIDIA has 11.72 billion USD of revenue. No doubt that NVIDIA has managed to do a good job and it managed to almost double revenue from 2017, where it went from $6.91 billion in 2017 to $11.72 billion in 2019. That is an amazing feat and market predictions are that it is not stopping to grow. With the recent acquisition of Mellanox, the company now has much bigger opportunities for expansion and growth.

If we compare the revenues of both companies, Intel is having much better performance. It had a revenue of 71.9 billion USD in 2019, while NVIDIA has 11.72 billion USD of revenue. No doubt that NVIDIA has managed to do a good job and it managed to almost double revenue from 2017, where it went from $6.91 billion in 2017 to $11.72 billion in 2019. That is an amazing feat and market predictions are that it is not stopping to grow. With the recent acquisition of Mellanox, the company now has much bigger opportunities for expansion and growth.

136 Comments on NVIDIA Surpasses Intel in Market Cap Size

give me a breakI suppose they'd better get back to working on graphics tho.

and what is true audio ?but nvidia gets no credit for dx12 ultimate,huhaccoriding to amd,g-sync counterpart is called freesync premium

www.amd.com/en/technologies/free-sync

and still has no ulmb

2 Ermm no? unrelated entirely

3 Google is your friend?

4 Nvidia literally has nothing to do with that sooo no

5 Yes and? still no extra money, just extra function labeled for the consumer to understand.

gizmodo.com/nvidia-helping-modders-port-physx-engine-to-ati-radeon-5023150

For instance, the 'best' VA as per response times is the least expensive in the budget monitors review. It comes with FreeSync and its own strobing.www.tftcentral.co.uk/reviews/budget_gaming_monitors_2020.htm#viewsonic_vx2458-c

Ageia was the company that made a revolutionary physics engine (sadly surpassing what we do today) that Nvidia bought, made proprietory to their hardware and doing so just killed it off.

No developer in their right mind would spend time and money on a game that would only work on Nvidia cards unless Nvidia would compensate them for the lost revenue that would have been gained from other platforms, which Nvidia never did so that never happened.

All PhysX became was a silly tagged on gimmick in a handful of games like Borderlands 2, 99.9% the same game but with some orbs floating in the water....fantastic.

and didn't ageia require a dedicated card ?

gsync has nvidia's version of strobing.freesync does not.

lfc is only present in fs premium

Nvidia dropped all support for those dedicated cards after buying Ageia and then made it exclusive to their cards.

They even actively blocked users from running an Nvidia card as a dedicated physX card alongside an AMD card.....

continued and expanded....yeah thats why no game is build on gpu hardware accelerated physics right? its only used as a cpu implementation, no more interesting then Havok which we have had for years.

Sure Nvidia allowed for the competition to use it, more money pls first though.

That is always what I have said, Nvidia should have just made it open for everyone to use/implement and even contribute to, with the only request/requirement being that at the start of a game it would say "PhysX by Nvidia" and that would be their advertisement for being the core support for this development.

That is the problem, AMD tries to better everything for everyone, Nvidia tries to better everything exclusively for itself, probably why they are doing so well as a company, but why I dont support them myself.Control is literally an Nvidia tech demo....and if anything its more about RTX then anything else so no, I have not seen a single video pointing out this "amazing physx imprementation"

Yes Ageia required a dedicated card,

Man imagine what could have been! a dedicated graphics, physics and soundcard, how fantastically better could games experiences be today if it wasnt for Nvidia buying ageia and killing it or Microsoft for ending direct sound with Vista.

but you haven't heard about it,so I must've been wrong.what :roll:lol,get a grip.

amd doesn't invest 1/10th of what nvidia does in pc gaming technologies

they lag behind more and more every gen and can't get their drivers up to snuff

Idk how your mind works with that response.

2 Not sure how that is hard to comprehend, you will have to be more specific with where I lost you.

3 Yes because they also have less then 1/10th to spend but what they spend they do a lot more for everyone then Nvidia does who cares pretty much only about themselves unless forced otherwise.

AMD drivers were fine for a long time, just with this last gen they messed things up, Nvidia has had massive driver issues as well in the last 2 years and also a lot when I last used Nvidia cards, it was a complete mess.

And lag behind more and more every gen? HD5000 series was the better choice, HD6000 series was the better choice, HD7000 series was the better choice, RX480 was a fantastic card for the price and often the better choice, Vega 56 was often the better choice and right now the RX5700(XT) is the better choice then their Nvidia counterparts soooo yeah no.

But if this is going to devolve into some silly fanboy nonsense (inb4 "you are the fanboy here buddy") then you migth as well just stop reacting.

lol,didn't see physics in control.

this game is all about environment destruction.dafuq.

and I wasn't talking about a century ago i.e. 5000 series.

ever since hawaii they got worse and worse.now all they got is an overpriced g106 counterpart that has no dx12 ultimate support like next gen consoles.so yeah,"better choice"

strobing at 120 is already way better than 144/165.also - better than no strobing,would you belive that ?

I kind of understand where you stand, but mbr monitors at least come with led overcharge. That makes them brighter for faster phasing.:rolleyes:display-corner.epfl.ch/index.php/BenQ_XL2540

and that's benq's own standard,not amd's,so I guess I'm not the one who's gotten himself into a corner

Hardware manufacturers know their hardware the best. Change my mind.

AMD uses a far superior production process (7nm) yet it can't compete with Nvidia on a 12nm Node. It is not even in the same ball-park.

I addition it seems all recent gpu architecture's of AMD just seem crap stability wise. Where an Nvidia card is easy plug-n-play. with an AMD you have to undervolt, use custom bios or have to jump through other hoops just to get a normal working card. This hold up for Navi, Vega, Fury and even polaris seemed to have minor issues (huge compared to nvidia rock-solid stability). Even in the golden days for AMD GPU's (around the 5xxx/6xxx and 7) my good old HD 6850 was inferior to Nvidia offering in the stability department (notorious for black screens and 'display-adapter-freezes' resulting in CTD.

The last great AMD card was the 7970 which they rebranded to infinity.

Don't get me wrong I would love to buy an solid AMD card, but they don't even offer high-end or enthousiast cards. At this point I have higher hopes on Intel having success on the dGPU market then AMD which makes me really sad.

Luckily Zen was a total hit.

and next time you barge in a conversation between two members you may wanna check what they were talking about

www.neowin.net/news/rumor-amd-tried-to-buy-nvidia-before-buying-ati/

Man, I'm so old and I remember all the hardware specs and news from decades ago but I don't remember what I did yesterday sigh ...