VMWare Updates Licensing Model, Setting 32-Core Limit per License

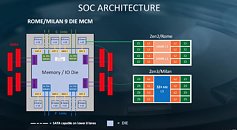

VMWare, one of the most popular virtualization solutions commercially available for businesses and the industry in general, has announced changes to its licensing model. From now on, licensees will have to acquire a license per 32 CPU cores, instead of the former "per socket" model. This effectively means that users who had made a migration to AMD's 64-core EPYC CPUs, for instance, and who saved on both price-per core and VMWare licensing fees compared to Intel customers (who would need two sockets to achieve the same core-count, and thus, two licenses) are now being charged for two licenses for a 64-core, AMD-populated socket. This was a selling point for AMD - the company stated that their high-end EPYC processors could act as a dual-socket setup with a single processor, thanks to EPYC's I/O capabilities and core counts. VMWare claims this change is in line with industry standard pricing models.

Of course this decision from VMWare hits AMD the hardest, and it comes at a time where there are already 48 and 64 core CPUs available in the market. Should this licensing change be done, perhaps it should be in line with the current state of the industry, and not following in a quasi-random core-count (it definitely isn't random, though, and I'll leave it at that). From VMware's perspective, AMD's humongous CPU core counts does affect their bottom line. The official release claiming customers license software based on CPU counts may be valid, and they do allow for free licenses for servers past 32 cores until April 30, 2020. Of course, VMWare is also preparing itself for future industry changes - Intel will obviously increase its core counts in response to AMD's EPYC attack on the expected core counts of professional applications.

Of course this decision from VMWare hits AMD the hardest, and it comes at a time where there are already 48 and 64 core CPUs available in the market. Should this licensing change be done, perhaps it should be in line with the current state of the industry, and not following in a quasi-random core-count (it definitely isn't random, though, and I'll leave it at that). From VMware's perspective, AMD's humongous CPU core counts does affect their bottom line. The official release claiming customers license software based on CPU counts may be valid, and they do allow for free licenses for servers past 32 cores until April 30, 2020. Of course, VMWare is also preparing itself for future industry changes - Intel will obviously increase its core counts in response to AMD's EPYC attack on the expected core counts of professional applications.