Friday, January 23rd 2015

GeForce GTX 970 Design Flaw Caps Video Memory Usage to 3.3 GB: Report

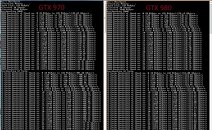

It may be the most popular performance-segment graphics card of the season, and offer unreal levels of performance for its $329.99 price, but the GeForce GTX 970 suffers from a design flaw, according to an investigation by power-users. GPU memory benchmarks run on GeForce GTX 970 show that the GPU is not able to address the last 700 MB of its 4 GB of memory.

The "GTX 970 memory bug," as it's now being called on tech forums, is being attributed to user-reports of micro-stutter noticed on GTX 970 setups, in VRAM-intensive gaming scenarios. The GeForce GTX 980, on the other hand, isn't showing signs of this bug, the card is able to address its entire 4 GB. When flooded with posts about the investigation on OCN, a forum moderator on the official NVIDIA forums responded: "we are still looking into this and will have an update as soon as possible."

Sources:

Crave Online, LazyGamer

The "GTX 970 memory bug," as it's now being called on tech forums, is being attributed to user-reports of micro-stutter noticed on GTX 970 setups, in VRAM-intensive gaming scenarios. The GeForce GTX 980, on the other hand, isn't showing signs of this bug, the card is able to address its entire 4 GB. When flooded with posts about the investigation on OCN, a forum moderator on the official NVIDIA forums responded: "we are still looking into this and will have an update as soon as possible."

192 Comments on GeForce GTX 970 Design Flaw Caps Video Memory Usage to 3.3 GB: Report

the thought that a team of engineers are scratching heads over a gpu is laughable. not a robot or a space shuttle. at this point they are getting pressure to do anything they can to stop a recall but if they cant nvidia will just do what they have to and apologize.

For example a gtx 970 only uses ~40/64 rops, a gtx 580 only 32/48, gtx 780 32/48, etc.

What it comes down to is optimizations on the architecture level and tradeoffs they will most certainly have taken into account when designing the full and cut chips. They have spent way more R&D time on it than we have, and I'm sure they have a lot more resources to use too, so I don't feel we are in a position to question HOW they lay out their architecture. I am also quite sure that these kinds of issues exist with almost any architecture, especially with cut dies, both CPU and GPU (or any other processor for that matter).

BUT, and here is a big but (and it's underlined too, guess that makes it an important but...) I have to question NVidia's way of marketing this. OK, sure, there are 4GB of accessible to, and the memory bus operates at the stated speed, but I feel there should at least be a side note that not all of the memory is addressed at the stated speed. Then again, this complicates things for the less tech savvy, and results in more confusing numbers.

I think you'd run out of superscript/asterisk notations if you fully tried to explain* the finer points of IC architectures. Both vendors tout DX12 support - Nvidia is careful to append their support with "API", while AMD's fine print reads (paraphrased) "at this time- based on known specifications". AMD were very quick off the mark in publicizing the FX series as the world's first desktop 8-core CPU, but declined to mention the shared resources and compromises involved that separate it from truly being 8 independent cores.

What it comes down to in most instances is how much the user is affected within the space between truth and claims.

* I've always found it astounding the vast number of buyers that don't even read the spec sheet, let alone the fine print and reasons behind it. The number of people who buy based on a few marketing bullet points seems to far outweigh those who research their prospective purchases. Maybe it's the nature of an industry manipulated by built-in obsolescence (or its illusion).Sleeping Dogs is an AMD Gaming Evolved title tailored to GCN and heavily coded for post-process compute - something Kepler wasn't particularly well suited for

But seriously I hope Nvidia look into this ASAP. :pimp:

So who are going to feel the pains of this card as it ages? 144 hertz 1080p? 60 hertz 1440p? People with multi-monitor setups are seeing the issues now, but going forward, which consumers will be at risk?