Friday, March 22nd 2019

AMD Ryzen 3000 "Zen 2" BIOS Analysis Reveals New Options for Overclocking & Tweaking

AMD will launch its 3rd generation Ryzen 3000 Socket AM4 desktop processors in 2019, with a product unveiling expected mid-year, likely on the sidelines of Computex 2019. AMD is keeping its promise of making these chips backwards compatible with existing Socket AM4 motherboards. To that effect, motherboard vendors such as ASUS and MSI began rolling out BIOS updates with AGESA-Combo 0.0.7.x microcode, which adds initial support for the platform to run and validate engineering samples of the upcoming "Zen 2" chips.

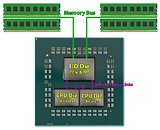

At CES 2019, AMD unveiled more technical details and a prototype of a 3rd generation Ryzen socket AM4 processor. The company confirmed that it will implement a multi-chip module (MCM) design even for their mainstream-desktop processor, in which it will use one or two 7 nm "Zen 2" CPU core chiplets, which talk to a 14 nm I/O controller die over Infinity Fabric. The two biggest components of the IO die are the PCI-Express root complex, and the all-important dual-channel DDR4 memory controller. We bring you never before reported details of this memory controller.AMD has two big reasons to take the MCM route for even its mainstream desktop platform. The first is that it lets them mix-and-match silicon production technologies. AMD bean-counters reckon that it's more economical to build only those components on a shrunk 7 nanometer production process, which can benefit from the shrink; namely the CPU cores. Other components like the memory controller can continue to be built on existing 14 nm technologies, which by now are highly mature (= cost-efficient). AMD is also competing with other companies for its share of 7 nanometer allocation at TSMC.

The 14 nm I/O controller die could, in theory, be sourced from GlobalFoundries to honor the wafer-supply agreement. The second big reason is the economics of downscaling. AMD is expected to increase CPU core counts beyond 8 and cramming 12-16 cores on a single 7 nm slab will make carving out cheaper SKUs by disabling cores costly, because AMD isn't always harvesting dies with faulty cores. These mid-range SKUs sell in higher volumes, and beyond a point AMD is forced to disable perfectly functional cores. It makes more sense to build 8-core or 6-core chiplets, and on SKUs with 8 cores or fewer, physically deploy only one chiplet. This way AMD is maximizing its utilization of precious 7 nm wafers.The downside of this approach is the memory controller is no longer physically integrated with the processor cores. The 3rd generation Ryzen processor (and all other Zen 2 CPUs), hence have an "integrated-discrete" memory controller. The memory controller is physically located inside the processor, but is not on the same piece of silicon as the CPU cores. AMD isn't the first to come up with such a contraption. Intel's 1st generation Core "Clarkdale" processor took a similar route, with CPU cores on a 32 nm die, and the memory controller plus an integrated GPU on a separate 45 nm die.

Intel used its Quick Path Interconnect (QPI), which was cutting-edge at the time. AMD is tapping into Infinity Fabric, its latest high-bandwidth scalable interconnect that's heavily implemented on "Zen" and "Vega" product lines. We have learned that with "Matisse," AMD will be introducing a new version of Infinity Fabric that offers twice the bandwidth compared to the first generation, or up to 100 GB/s. AMD needs this because a single I/O controller die must now interface with up to two 8-core CPU dies, and up to 64 cores in their "EPYC" server line SKU.

Our resident Ryzen Memory Guru Yuri "1usmus" Bubliy took a really close look at one of these BIOS updates with AGESA 0.0.7.x and found several new controls and options that will be exclusive to "Matisse," and possibly the next-generation Ryzen Threadripper processors. AMD has changed the CBS section title from "Zen Common Options" to "Valhalla Common Options." We have seen this codename on the web quite a bit over the past few days, associated with "Zen 2." We have learned that "Valhalla" could be the codename of the platform consisting of a 3rd generation Ryzen "Matisse" AM4 processor and its companion AMD 500-series chipset based motherboard, specifically the successor to X470 which is being developed in-house by AMD as opposed to sourcing from ASMedia.

When doing serious memory overclocking, it can happen that the Infinity Fabric can't handle the increased memory speed. Remember, Infinity Fabric runs at a frequency synchronized to memory. For example, with DDR-3200 memory (which runs at 1600 MHz), Infinity Fabric will operate at 1600 MHz. This is the default of Zen, Zen+ and also Zen 2. Unlike earlier generations, the new BIOS offers UCLK options for "Auto", "UCLK==MEMCLK" and "UCLK==MEMCLK/2". The last option is new and will come in handy when overclocking your memory, to achieve stability, but at the cost of some Infinity Fabric bandwidth.

Precision Boost Overdrive will receive more fine-grained control at the BIOS level, and AMD is making significant changes to this feature to make the boost setting more flexible and improve the algorithm. Early adopters of AGESA Combo 0.0.7.x on AMD 400-series chipset motherboards noticed that PBO broke or became buggy on their machines. This is because of poor integration of the new PBO algorithm with the existing one compatible with "Pinnacle Ridge." AMD also implemented "Core Watchdog", a feature that resets the system in case address or data errors destabilize the machine.

The "Matisse" processor will also provide users with finer control over active cores. Since the AM4 package has two 8-core chiplets, you will have the option to disable an entire chiplet, or adjust the core-count in decrements of 2, since each 8-core chiplet consists of two 4-core CCX (compute complexes), much like existing AMD designs. At the chiplet-level you can dial down core counts from 4+4 to 3+3, 2+2, and 1+1, but never asymmetrically, such as 4+0 (which was possible on first-generation Zen). AMD is synchronizing CCX core counts for optimal utilization of L3 cache and memory access. For the 64-core Threadripper that has eight 8-core chiplets, you will be able to disable chiplets as long as you have at least two chiplets enabled.

CAKE, or "coherent AMD socket extender" received an additional setting, namely "CAKE CRC performance Bounds". AMD is implementing IFOP (Infinity Fabric On Package,) or the non-socketed version of IF, in three places on the "Matisse" MCM. The I/O controller die has 100 GB/s IFOP links to each of the two 8-core chiplets, and another 100 GB/s IFOP link connects the two chiplets to each other. For multi-socket implementations of "Zen 2," AMD will provide NUMA node controls, namely "NUMA nodes per socket," with options including "NPS0", "NPS1", "NPS2", "NPS4" and "Auto".

With "Zen 2," AMD is introducing a couple of major new DCT-level features. The first one is called "DRAM Map Inversion," with options including "Disabled", "Enabled" and "Auto". The motherboard vendor description of this option goes like "Properly utilize the parallelism within a channel and DRAM device. Bits that flip more frequently should be used to map resources of greater parallelism within the system." Another is "DRAM Post Package Repair," with options including "Enabled", "Disabled", and "Auto." This new special mode (which is a JEDEC standard) lets the memory manufacturer increase DRAM yields by selectively disabling bad memory cells, to replace them automatically with working ones from a spare area, similar to how storage devices map out bad sectors. We're not sure why such a feature is being exposed to end-users, especially from the client-segment. Perhaps it will be removed on production motherboards.

We've also come across an interesting option related to the I/O controller that lets you select PCI-Express generation up to "Gen 4.0". This could indicate some existing 400-series chipset motherboards could receive PCI-Express Gen 4.0, given that we're examining a 400-series chipset motherboard's firmware. We've heard through credible sources that AMD's PCIe Gen 4.0 implementation involves the use of external re-driver devices on the motherboard. These don't come cheap. Texas Instruments sells Gen 3.0 redrivers for $1.5 a piece in 1,000-unit reel quantities. Motherboard vendors will have to fork out quite at least $15-20 on socket AM4 motherboards with Gen 4.0 slots, given that you need 20 of these redrivers, one per lane. We've come across several other common controls, including "RCD Parity" and "Memory MBIST" (a new memory self-test program).

One of the firmware setup program pages is titled "SoC Miscellaneous Control," and includes the following settings, many of which are industry-standard:

At CES 2019, AMD unveiled more technical details and a prototype of a 3rd generation Ryzen socket AM4 processor. The company confirmed that it will implement a multi-chip module (MCM) design even for their mainstream-desktop processor, in which it will use one or two 7 nm "Zen 2" CPU core chiplets, which talk to a 14 nm I/O controller die over Infinity Fabric. The two biggest components of the IO die are the PCI-Express root complex, and the all-important dual-channel DDR4 memory controller. We bring you never before reported details of this memory controller.AMD has two big reasons to take the MCM route for even its mainstream desktop platform. The first is that it lets them mix-and-match silicon production technologies. AMD bean-counters reckon that it's more economical to build only those components on a shrunk 7 nanometer production process, which can benefit from the shrink; namely the CPU cores. Other components like the memory controller can continue to be built on existing 14 nm technologies, which by now are highly mature (= cost-efficient). AMD is also competing with other companies for its share of 7 nanometer allocation at TSMC.

The 14 nm I/O controller die could, in theory, be sourced from GlobalFoundries to honor the wafer-supply agreement. The second big reason is the economics of downscaling. AMD is expected to increase CPU core counts beyond 8 and cramming 12-16 cores on a single 7 nm slab will make carving out cheaper SKUs by disabling cores costly, because AMD isn't always harvesting dies with faulty cores. These mid-range SKUs sell in higher volumes, and beyond a point AMD is forced to disable perfectly functional cores. It makes more sense to build 8-core or 6-core chiplets, and on SKUs with 8 cores or fewer, physically deploy only one chiplet. This way AMD is maximizing its utilization of precious 7 nm wafers.The downside of this approach is the memory controller is no longer physically integrated with the processor cores. The 3rd generation Ryzen processor (and all other Zen 2 CPUs), hence have an "integrated-discrete" memory controller. The memory controller is physically located inside the processor, but is not on the same piece of silicon as the CPU cores. AMD isn't the first to come up with such a contraption. Intel's 1st generation Core "Clarkdale" processor took a similar route, with CPU cores on a 32 nm die, and the memory controller plus an integrated GPU on a separate 45 nm die.

Intel used its Quick Path Interconnect (QPI), which was cutting-edge at the time. AMD is tapping into Infinity Fabric, its latest high-bandwidth scalable interconnect that's heavily implemented on "Zen" and "Vega" product lines. We have learned that with "Matisse," AMD will be introducing a new version of Infinity Fabric that offers twice the bandwidth compared to the first generation, or up to 100 GB/s. AMD needs this because a single I/O controller die must now interface with up to two 8-core CPU dies, and up to 64 cores in their "EPYC" server line SKU.

Our resident Ryzen Memory Guru Yuri "1usmus" Bubliy took a really close look at one of these BIOS updates with AGESA 0.0.7.x and found several new controls and options that will be exclusive to "Matisse," and possibly the next-generation Ryzen Threadripper processors. AMD has changed the CBS section title from "Zen Common Options" to "Valhalla Common Options." We have seen this codename on the web quite a bit over the past few days, associated with "Zen 2." We have learned that "Valhalla" could be the codename of the platform consisting of a 3rd generation Ryzen "Matisse" AM4 processor and its companion AMD 500-series chipset based motherboard, specifically the successor to X470 which is being developed in-house by AMD as opposed to sourcing from ASMedia.

When doing serious memory overclocking, it can happen that the Infinity Fabric can't handle the increased memory speed. Remember, Infinity Fabric runs at a frequency synchronized to memory. For example, with DDR-3200 memory (which runs at 1600 MHz), Infinity Fabric will operate at 1600 MHz. This is the default of Zen, Zen+ and also Zen 2. Unlike earlier generations, the new BIOS offers UCLK options for "Auto", "UCLK==MEMCLK" and "UCLK==MEMCLK/2". The last option is new and will come in handy when overclocking your memory, to achieve stability, but at the cost of some Infinity Fabric bandwidth.

Precision Boost Overdrive will receive more fine-grained control at the BIOS level, and AMD is making significant changes to this feature to make the boost setting more flexible and improve the algorithm. Early adopters of AGESA Combo 0.0.7.x on AMD 400-series chipset motherboards noticed that PBO broke or became buggy on their machines. This is because of poor integration of the new PBO algorithm with the existing one compatible with "Pinnacle Ridge." AMD also implemented "Core Watchdog", a feature that resets the system in case address or data errors destabilize the machine.

The "Matisse" processor will also provide users with finer control over active cores. Since the AM4 package has two 8-core chiplets, you will have the option to disable an entire chiplet, or adjust the core-count in decrements of 2, since each 8-core chiplet consists of two 4-core CCX (compute complexes), much like existing AMD designs. At the chiplet-level you can dial down core counts from 4+4 to 3+3, 2+2, and 1+1, but never asymmetrically, such as 4+0 (which was possible on first-generation Zen). AMD is synchronizing CCX core counts for optimal utilization of L3 cache and memory access. For the 64-core Threadripper that has eight 8-core chiplets, you will be able to disable chiplets as long as you have at least two chiplets enabled.

CAKE, or "coherent AMD socket extender" received an additional setting, namely "CAKE CRC performance Bounds". AMD is implementing IFOP (Infinity Fabric On Package,) or the non-socketed version of IF, in three places on the "Matisse" MCM. The I/O controller die has 100 GB/s IFOP links to each of the two 8-core chiplets, and another 100 GB/s IFOP link connects the two chiplets to each other. For multi-socket implementations of "Zen 2," AMD will provide NUMA node controls, namely "NUMA nodes per socket," with options including "NPS0", "NPS1", "NPS2", "NPS4" and "Auto".

With "Zen 2," AMD is introducing a couple of major new DCT-level features. The first one is called "DRAM Map Inversion," with options including "Disabled", "Enabled" and "Auto". The motherboard vendor description of this option goes like "Properly utilize the parallelism within a channel and DRAM device. Bits that flip more frequently should be used to map resources of greater parallelism within the system." Another is "DRAM Post Package Repair," with options including "Enabled", "Disabled", and "Auto." This new special mode (which is a JEDEC standard) lets the memory manufacturer increase DRAM yields by selectively disabling bad memory cells, to replace them automatically with working ones from a spare area, similar to how storage devices map out bad sectors. We're not sure why such a feature is being exposed to end-users, especially from the client-segment. Perhaps it will be removed on production motherboards.

We've also come across an interesting option related to the I/O controller that lets you select PCI-Express generation up to "Gen 4.0". This could indicate some existing 400-series chipset motherboards could receive PCI-Express Gen 4.0, given that we're examining a 400-series chipset motherboard's firmware. We've heard through credible sources that AMD's PCIe Gen 4.0 implementation involves the use of external re-driver devices on the motherboard. These don't come cheap. Texas Instruments sells Gen 3.0 redrivers for $1.5 a piece in 1,000-unit reel quantities. Motherboard vendors will have to fork out quite at least $15-20 on socket AM4 motherboards with Gen 4.0 slots, given that you need 20 of these redrivers, one per lane. We've come across several other common controls, including "RCD Parity" and "Memory MBIST" (a new memory self-test program).

One of the firmware setup program pages is titled "SoC Miscellaneous Control," and includes the following settings, many of which are industry-standard:

- DRAM Address Command Parity Retry

- Max Parity Error Replay

- Write CRC Enable

- DRAM Write CRC Enable and Retry Limit

- Max Write CRC Error Replay

- Disable Memory Error Injection

- DRAM UECC Retry

- ACPI Settings:

o ACPI SRAT L3 Cache As NUMA Domain

o ACPI SLIT Distance Control

o ACPI SLIT remote relative distance

o ACPI SLIT virtual distance

o ACPI SLIT same socket distance

o ACPI SLIT remote socket distance

o ACPI SLIT local SLink distance

o ACPI SLIT remote SLink distance

o ACPI SLIT local inter-SLink distance

o ACPI SLIT remote inter-SLink distance - CLDO_VDDP Control

- Efficiency Mode

- Package Power Limit Control

- DF C-states

- Fixed SOC P-state

- CPPC

- 4-link xGMI max speed

- 3-link xGMI max speed

73 Comments on AMD Ryzen 3000 "Zen 2" BIOS Analysis Reveals New Options for Overclocking & Tweaking

Not saying it is not good or not an improvement.

Well if you think about it, if AMD is taking this approach as described by TPU, Infinity Fabric 2 can't be the same speed as the Integrated Memory Controller if it wants to achieve that very high speed frequency of 100 GB/s. If for example IF2 is tied to the memory controller speed, ZEN2 WILL face a huge Latency Penalty. IMO

The original Infinity Fabric was tied to the memory controller for this reason. Personally I don't think simply doubling the bandwidth of Infinity Fabric from the original ZEN is going to be enough to fully offset latency issues. They need more than 100 GB/s IMO.

Example, a system using DDR4-2133 would have the entire SDF (Scalable Data Fabric) plane operating at 1066 MHz. This is a fundamental design choice made by AMD in order to eliminate clock-domain latency. This time around it ain't possible unless AMD jacks up the DDR4 memory speed to well over 4500-5000+? if they plan on IF2 running at the DDR4 speed.

Who knows really, all I can say is AMD is VERY well aware of the ZEN+ and ZEN latency issues. Hopefully they've they've resolved it for the official ZEN2 launch.

if the IF still has big latency, this are a processors that are going to be good for heavy duty things with large data sets, you will can play games on them but probably not as high performance as intel (round 2 of low 1080p performance on zen).

but this is all theories nothing is know right now, and I also hope that outside the increase speed the latency this time will also be good.

it strange but seems to me that all industry is going in the same direction: DDR4 higher latency than DDR3, haswell processors more latency over core 2 duo, AMD more latency in cache and memory etc

funny thing is that up to 2008 the trend was reverse: they both intel and AMD developed integrated memory controllers with nahaylem and phenom

1) just the 2700x to 3700x 12-core

2) the processor AND my x470 k4

3) processor, motherboard and my 32gb 3200c16 ram

I did keep all options in mind when building my pc, but I'm just not sure what will limit performance and stability with zen 2, especially if any extra features are limited to x570 and my ram isn't 100% stable on my current mobo just yet, about 99% (resets if there's a software failure because the bios isn't too keen on it and ryzen master is keeping it at 3200).

Hopefully a bios update and a new processor will solve my ram stability issues at least, cause it turns out the k4 actually has built-in rgb lighting and it looks awesome in my pc!

I think that now MB's Vendor's know the potential in AM4 boards and they will make even more models with great futures.

P.S- why no single Gigabyte board got the new Bios?

AMD could sell all the broken cores/chiplets, and we could buy them cheaply. Everybody wins. :D

Check this out if you haven't already. Its perhaps one of my favourite break downs on cache memory. And in regards to zen/zen+ watch at 23min. But in short, the latency issues are not as bad as people think if you actually look at this from the right perspective. Basically you would need to look at zen as having 8mb L3 cache per ccx rather than 16mb total L3 cache per chip. Often times its not as big of an issue because data from main memory is copied to the L1 and L2 cache only(inclusive cache), and L3 cache working only when data is evicted from L2 cache. When L3 cache is filled; the ccx goes back to main memory rather than the other ccx L3 cache. Normally thats ok because the L3 cache works more to support the L2 which is local to each core so the performance impact is hardly a big deal especially when the OS scheduler is aware of the memory configuration.

Also in response to some of the other comments in this thread; I'm not exactly sure this has anything to do with gaming performance compared to intel, that is more due to the slight single core advantage intel has on the super high clocked models, but otherwise we see AMD ryzen doing rather excellent on multicore performance which is, in theory, where you would expect to see a shortcoming.

With the latency and cross migration issues being highlighted however; we can now speculate on what AMD can do to offset the issues and how an IO die fits into all of this:

1. An IO can simply work as a scheduler that stores data addresses to ensure no redundancy takes place when you have multiple cores and data migration, so even if the latency is higher, the communication remains streamlined and manageable. When you have 4 chips on a module like in threadripper; each memory controller would need to connect with 3 other chips via different IF links, which is probably why according to the test in the video we see the latency inline with main memory which indicates resorting to main memory rather than other ccx directly. This implementation would still be NUMA but would work much better than previous iterations of MCM allowing for some level of L3 utilization/sharing across all chiplets without always resorting to main memory.

2. The IO chip can also include the memory controller rather than just IF interconnects and schedulers. This would mean the chiplet complex wont really need a NUMA configuration and the latencies would be normalized across all chiplets. I can see this having some drawbacks/trade-off's but also much cost effectiveness in terms of the chiplet design. This implementation is most likely the case because AMD already showed a 1 chiplet cpu that had the IO chip as well; which gives the impression that a single chiplet cannot function without the IO chip.

3. AMD can double L3 cache and retain the higher modularity aspect per ccx. This retains the older challenges but gives a larger buffer before needing to reach out to main memory or other CCX L3.

4. make L3 cache shared between 2 ccx on each chiplet and add complexity in design in case of a one ccx zen2 implementation (unless a one ccx design retains the same L3 cache size). However we already saw the zen apu(2400g) having 4mb l3 cache for the 1 ccx it has rather than 8mb so perhaps this is not a big concern for AMD. This implementation can be used to pair 2 ccx's together without needing to redesign the whole ccx into an 8 core; giving one bigger pool of L3 cache per 8 cores. This means apps using up to 8 cores would naturally be less effected by any latency issues of cross chip/cross core migration etc. Do note though that I'm ignorant of much of the finer technicalities here so id love some input on this area and whether shared L3 cache local to all 8 cores in 2 ccx's is even possible without major redesign or using IF links.

5. AMD could combine aspects from all the above which would practically minimize most or all issues related to latency. One thing that we can however count on for sure is that the IO chip does a better job connecting the ccx's and chiplets together without resorting to main memory; otherwise AMD would've stuck to the old design. One thing I do worry about is if other drawbacks get introduced in case the IO has a unified memory controller for all chips that would fix old problems of cross migration by offering consistent latencies, but in turn increase latencies when an application exceeds all L3 cache and is running on system memory as well.

Isn't this exactly the feature that everyone wants so you can disable bad memory cells and pass memtest and continue using the PC for some more time. Long overdue.

Should be some kind of a setting to select which lets you select which cell, in HEX or otherwise (you'd need to look at the manual for the map of cell IDs), unless it has some onboard-chip/logic which figures that out.

My setup does 1440p Ultra High Quality Settings and I'm between 70 to 144 FPS on all my games including Metro 2033 and Metro Last Light. On a RX580 8GB GPU and a Ryzen 7 1700X.

My RX 580 will most likely be replaced by Navi, depending on its price to performance.

But enough with the "Intel is better in Gaming" nonsense.