Thursday, May 23rd 2019

AMD X570 Unofficial Platform Diagram Revealed, Chipset Puts out PCIe Gen 4

AMD X570 is the company's first in-house design socket AM4 motherboard chipset, with the X370 and X470 chipsets being originally designed by ASMedia. With the X570, AMD hopes to leverage new PCI-Express gen 4.0 connectivity of its Ryzen 3000 Zen2 "Matisse" processors. The desktop platform that combines a Ryzen 3000 series processor with X570 chipset is codenamed "Valhalla." A rough platform diagram like what you'd find in motherboard manuals surfaced on ChipHell, confirming several features. To maintain pin-compatibility with older generations of Ryzen processors, Ryzen 3000 has the same exact connectivity from the SoC except two key differences.

On the AM4 "Valhalla" platform, the SoC puts out a total of 28 PCI-Express gen 4.0 lanes. 16 of these are allocated to PEG (PCI-Express graphics), configurable through external switches and redrivers either as single x16, or two x8 slots. Besides 16 PEG lanes, 4 lanes are allocated to one M.2 NVMe slot. The remaining 4 lanes serve as the chipset bus. With X570 being rumored to support gen 4.0 at least upstream, the chipset bus bandwidth is expected to double to 64 Gbps. Since it's an SoC, the socket is also wired to LPCIO (SuperIO controller). The processor's integrated southbridge puts out two SATA 6 Gbps ports, one of which is switchable to the first M.2 slot; and four 5 Gbps USB 3.x ports. It also has an "Azalia" HD audio bus, so the motherboard's audio solution is directly wired to the SoC. Things get very interesting with the connectivity put out by the X570 chipset.Update May 21st: There is also information on the X570 chipset's TDP.

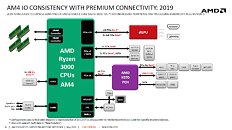

Update May 23rd: HKEPC posted what looks like an official AMD slide with a nicer-looking platform map. It confirms that AMD is going full-tilt with PCIe gen 4, both as chipset bus, and as downstream PCIe connectivity.

AMD X570 overcomes the greatest shortcoming of the previous-generation X470 "Promontory" chipset - downstream PCIe connectivity. The X570 chipset appears to put out 16 downstream PCI-Express gen 4.0 lanes. Two of these are allocated to two M.2 slots with x4 wiring, each, and the rest as x1 links. From these links, three are put out as x1 slots, one lane drives an ASMedia ASM1143 controller (takes in one gen 3.0 x1 and puts out two 10 Gbps USB 3.x gen 2 ports); one lane driving the board's onboard 1 GbE controller (choices include Killer E2500 or Intel i211-AT or even Realtek 2.5G); and one lane towards an 802.11ax WLAN card such as the Intel "Cyclone Peak." Other southbridge connectivity includes a 6-port SATA 6 Gbps RAID controller, four 5 Gbps USB 3.x gen 1 ports, and four USB 2.0/1.1 ports.

Update May 21st: The source also mentions the TDP of the AMD X570 chipset to be at least 15 Watts, a 3-fold increase over the X470 with its 5W TDP. This explains why every X570-based motherboard picture leak we've seen thus far shows a fan-heatsink over the chipset.

Sources:

ChipHell Forums, HKEPC

On the AM4 "Valhalla" platform, the SoC puts out a total of 28 PCI-Express gen 4.0 lanes. 16 of these are allocated to PEG (PCI-Express graphics), configurable through external switches and redrivers either as single x16, or two x8 slots. Besides 16 PEG lanes, 4 lanes are allocated to one M.2 NVMe slot. The remaining 4 lanes serve as the chipset bus. With X570 being rumored to support gen 4.0 at least upstream, the chipset bus bandwidth is expected to double to 64 Gbps. Since it's an SoC, the socket is also wired to LPCIO (SuperIO controller). The processor's integrated southbridge puts out two SATA 6 Gbps ports, one of which is switchable to the first M.2 slot; and four 5 Gbps USB 3.x ports. It also has an "Azalia" HD audio bus, so the motherboard's audio solution is directly wired to the SoC. Things get very interesting with the connectivity put out by the X570 chipset.Update May 21st: There is also information on the X570 chipset's TDP.

Update May 23rd: HKEPC posted what looks like an official AMD slide with a nicer-looking platform map. It confirms that AMD is going full-tilt with PCIe gen 4, both as chipset bus, and as downstream PCIe connectivity.

AMD X570 overcomes the greatest shortcoming of the previous-generation X470 "Promontory" chipset - downstream PCIe connectivity. The X570 chipset appears to put out 16 downstream PCI-Express gen 4.0 lanes. Two of these are allocated to two M.2 slots with x4 wiring, each, and the rest as x1 links. From these links, three are put out as x1 slots, one lane drives an ASMedia ASM1143 controller (takes in one gen 3.0 x1 and puts out two 10 Gbps USB 3.x gen 2 ports); one lane driving the board's onboard 1 GbE controller (choices include Killer E2500 or Intel i211-AT or even Realtek 2.5G); and one lane towards an 802.11ax WLAN card such as the Intel "Cyclone Peak." Other southbridge connectivity includes a 6-port SATA 6 Gbps RAID controller, four 5 Gbps USB 3.x gen 1 ports, and four USB 2.0/1.1 ports.

Update May 21st: The source also mentions the TDP of the AMD X570 chipset to be at least 15 Watts, a 3-fold increase over the X470 with its 5W TDP. This explains why every X570-based motherboard picture leak we've seen thus far shows a fan-heatsink over the chipset.

75 Comments on AMD X570 Unofficial Platform Diagram Revealed, Chipset Puts out PCIe Gen 4

Original source (german).

Sure, you can make the argument that they can use PCI-E 4.0 x2 drives and only use 2 of the 16 lanes. But that also doesn't work. The reason being that motherboard manufacturers are not going to put x2 M.2 slots on their boards unless the absolutely have to(because they are out of PCI-E lanes). The reason being that most consumers are going to be putting PCI-E 3.0 drives in the slots, so they will be limited to PCI-E x2 3.0, and that looks bad on paper.

Sure, people will have these boards in use for 5+ years, but that is not what I mean by usable lifespan of the chipset. The usable lifespan of the chipset is how long manufacturers will be designing motherboards around this chipset. That lifespan is likely a year, maybe 2. And the fact is, in that time it is unlikely they will actually be designing boards with PCI-E 4.0 as the priority. They will still be designing boards assuming people will be using PCI-E 3.0 devices, because it doesn't make sense to design boards for use 3 years after it is bought.

It's the lower QD/small R/W tasks that are the reason we don't see much real-world difference in current products.

3x the IOPS will move things along nicely even if the b/w has only gone up 30%.

@my_name_is_earl What are you on about? AMD's platforms have more lanes than their competitor.

Maybe wait until Tuesday before you respond?

As for pricing, there's nothing announced, but there is zero reason to expect them to drastically increase in price over today's most common drives. I'd be very surprised if they were as expensive as the 970 Pro, and prices are bound to drop quickly as more options arrive. An relatively minor interface upgrade for a commodity like an SSD is hardly grounds for a doubling in price.

My entire point is this shouldn't have to be a necessity. Give me enough lanes so that when I plug in an M.2 drive, my RAID card doesn't stop working.I wouldn't say they are popular. They are just a think that exists. And they exist because the drives themselves can't really use more than a x2 connection anyway. If you are saying those are very popular and hence what everyone is buying, then there really is no need for PCI-E 4.0 drives, as I said.You don't have a good grasp on how the tech world works, do you? The latest bleeding edge technology, especially when it is the "fastest on the market" is never cheap. if they can throw a marketing gimmick in the specs that's new and faster, the price will be higher. Even if actual performance isn't. Hell, M.2 SATA drives have historically been more expensive than their 2.5" counterparts for the only reason being M.2 is "new and fancy" so they figure they can charge 5% more.

Also, if your PC contains enough SSDs to require that last NVMe slot, and enough HDDs to require a RAID card, you should consider spinning your storage array out into a NAS or storage server. Then you won't have to waste lanes on a big GPU in that, making room for more controllers, SSDs and whatnot, while making your main PC less power hungry. Not keeping all your eggs in one basket is smart, particularly when it comes to storage. And again, if you can afford a RAID card and a bunch of NVMe SSDs, you can afford to set up a NAS.They are popular, because they are cheap. They are cheap because the controllers are simpler than x4 drives - mostly in both internal lanes and external PCIe, but having a narrower PCIe interface is a significant cost savings, which won't go away when moving to 4.0 even if they double up on internal lanes to increase performance. In other words, unless PCIe 4.0 controllers are extremely complex to design and manufacture, a PCIe 4.0 x2 SSD controller will be cheaper than a similarly performing 3.0 x4 controller.Phison and similar controller makers don't have the brand recognition or history of high-end performance to sell drives at proper "premium" NVMe prices - pretty much only Samsung does (outside of the enterprise/server space, that is, where prices are bonkers as always). Will they charge a premium for a 4.0 controller over a similar 3.0 one? Of course. But it won't be that much, as it wouldn't sell. Besides, even for the 970 Pro the flash is the main cost driver, not the controller. There's no doubt 4.0 drives will demand a premium, but as I said, I would be very surprised if they came close to the 970 Pro (which, for reference, is $100 more for 1TB compared to the Evo).

As for a 25w TDP, no that also is unreasonable and if it was that high then that is also a fault of AMD. The Z390 gives 24 downstream lanes and has a TDP of 6w, and it's also providing more I/O than the X570 would be. The fact is, thanks to AMD's better SoC style platform and the CPU doing a lot of the I/O that Intel still has to rely on the southbridge to handle, the X570 has a major advantage when it comes to TDP thanks to needing to do less work. And I'd also guess the high 15w TDP estimates of the X570 come down to the fact that they are using PCI-E 4.0.

So, again, at this point in time I would rather them put more PCI-E 3.0 lanes in and not bother with PCI-E 4.0 in the consumer chipset. The more lanes will allow better motherboard designs without the need for switching and port disabling. It would likely lower the TDP as well.

And the mainstream users are likely not using X570 either. They are likely going for the B series boards, so likely B550. They buy less expensive boards, with less extra features, that require less PCI-E lanes. But enthusiasts that buy X570 boards, expect those boards to be loaded with extra features, and most of those extras run off PCI-E lanes.Phison isn't going to be selling drives to the consumer, they never have AFAIK. So it doesn't matter how well know they are to the consumer, they are very well known to the drive manufacturers. They sell the controllers to drive manufacturers, and the drive manufacturers sell the drives to consumers. Phison will charge more for their controller, and the drive manufactures will charge more for the end drives. They will charge more because the controller costs more, as well as the NAND to get actual higher rated speed costs more, and the have the marketing gimmick of PCI-E 4.0.

And of course, yes, more 3.0 lanes would allow for more ports/slots/devices without the need for switching, and likely lower the TDP of the chipset. But it would also drive up motherboard costs as implementing all of those PCIe lanes will require more complex PCBs. The solution, as with cheaper Z3xx boards, will likely be that a lot of those lanes are left unused.That's not quite true. Of course, it's possible that X570 will demand more of a premium than X470 or X370, and yes, there are a lot of people using Bx50 boards, but the vast majority of people on X3/470 are still very solidly in the "mainstream" category, and have relatively few PCIe devices.No, they won't but they will be selling them to OEMs. Which OEMs? Not Samsung - which has the premium NVMe market cornered - and not WD, which is the current NVMe price/perf king. So they're left with brands with less stellar reputations, which means they'll be less able to sell products at ultra-premium prices, no matter the performance. Sure, some will try with exorbitant MSRPs, but prices inevitably drop once products hit the market. It's obvious that some will use PCIe 4.0 as a sales gimmick (with likely only QD>32 sequential reads exceeding PCIe 3.0 x4 speeds, if that), but in a couple of years the NVMe market is likely to have begun a wholesale transition to 4.0 with no real added cost. If AMD didn't move to 4.0 now, that move would happen an equivalent time after whenever 4.0 became available - in other words, we'd have to wait for a long time to get faster storage. The job of an interface is to provide plentiful performance for connected devices. PCIe 3.0 is reaching a point where it doesn't quite do that any more, so the move to 4.0 is sensible and timely. Again, it's obvious that there will be few devices available in the beginning, but every platform needs to start somewhere, and postponing the platform also means postponing everything else, which is a really bad plan.

Given the speed of USB 3.1 versus most peripherals, a hub is probably better (again for those that need it) than putting a pile of USB on every motherboard.

Someone could in theory also build a monster i/o card giving out 2x the amount the chipset does (8x versus 4) for the few people that want more I/O without going to HEDT.

As you say, the loss from 16x to 8x for a GPU is currently very low even on 3.0

The lanes from the CPU are already PCI-E 4.0. That covers your GPUs and a high end PCI-E 4.0 M.2 SSD when you want to upgrade to one in the future. Make the chipset put out PCI-E 3.0 lanes and give more flexibility with more lanes. You're still getting your futureproofing, you're still getting your adoption of a new standard, and you also get more flexibility to add more components to the motherboard without forcing the consumer to make a decision between what they want to use.So, what you're saying, is the only PCI-E 4.0 NVMe SSD controller we've seen so far, won't be used by either of the two biggest well know SSD manufacturers(it won't likely be used by Micron either so that's actually the 3 biggest SSD manufacturers). Yeah, those PCI-E 4.0 controller are ready to go mainstream I tell ya!

And, like I said, it isn't like the platform wouldn't have a PCI-E 4.0 M.2 slot for the future anyway. Remember, I'm not arguing to completely get rid of PCI-E 4.0, the CPU would still be putting out PCI-E 4.0 lanes. So there would still be a slot available when the time comes that you actually want to buy an PCI-E 4.0 M.2.

People talk about how primitive the fans are but it's not like tower VRM coolers are a new thing. Nor are boards with copper highly-finned coolers. But, clearly, we are advancing as an industry because rainbow LEDs, plastic shrouds, and false phase count claims, are where it's at.

I wonder if even one of the board sellers are going to bring feature parity between AMD and Intel. Intel boards for quad CPUs were given coolers that could be hooked up to a loop. AMD buyers, despite having Piledriver to power, were given the innovation of tiny fans. Yes, folks, the tiny fan innovation for AMD was most recently seen in the near-EOL AM3+ boards. Meanwhile, only Intel buyers were considered serious enough to have the option of making use of their loops without having to pay through the nose for an EK-style solution. (I'm trying to remember the year the first hybrid VRM cooler was sold to Intel buyers. 2011? There was some controversy over it being anodized aluminum but ASUS claimed it was safe. Nevertheless, it switched to copper shortly after. I believe Gigabyte sold hybrid-cooled boards as well, for Intel systems. The inclusion of hybrid cooling was not a one-off. It was multigenerational and expanded from ASUS to Gigabyte.)

MSI's person said no one wanted this but ASUS, at least, thought the return of the tiny fan was innovative.