Friday, March 6th 2020

AMD RDNA2 Graphics Architecture Detailed, Offers +50% Perf-per-Watt over RDNA

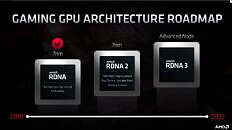

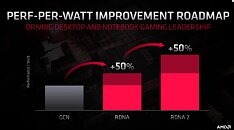

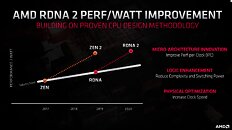

With its 7 nm RDNA architecture that debuted in July 2019, AMD achieved a nearly 50% gain in performance/Watt over the previous "Vega" architecture. At its 2020 Financial Analyst Day event, AMD made a big disclosure: that its upcoming RDNA2 architecture will offer a similar 50% performance/Watt jump over RDNA. The new RDNA2 graphics architecture is expected to leverage 7 nm+ (7 nm EUV), which offers up to 18% transistor-density increase over 7 nm DUV, among other process-level improvements. AMD could tap into this to increase price-performance by serving up more compute units at existing price-points, running at higher clock speeds.

AMD has two key design goals with RDNA2 that helps it close the feature-set gap with NVIDIA: real-time ray-tracing, and variable-rate shading, both of which have been standardized by Microsoft under DirectX 12 DXR and VRS APIs. AMD announced that RDNA2 will feature dedicated ray-tracing hardware on die. On the software side, the hardware will leverage industry-standard DXR 1.1 API. The company is supplying RDNA2 to next-generation game console manufacturers such as Sony and Microsoft, so it's highly likely that AMD's approach to standardized ray-tracing will have more takers than NVIDIA's RTX ecosystem that tops up DXR feature-sets with its own RTX feature-set.Variable-rate shading is another key feature that has been missing on AMD GPUs. The feature allows a graphics application to apply different rates of shading detail to different areas of the 3D scene being rendered, to conserve system resources. NVIDIA and Intel already implement VRS tier-1 standardized by Microsoft, and NVIDIA "Turing" goes a step further in supporting even VRS tier-2. AMD didn't detail its VRS tier support.

AMD hopes to deploy RDNA2 on everything from desktop discrete client graphics, to professional graphics for creators, to mobile (notebook/tablet) graphics, and lastly cloud graphics (for cloud-based gaming platforms such as Stadia). Its biggest takers, however, will be the next-generation Xbox and PlayStation game consoles, who will also shepherd game developers toward standardized ray-tracing and VRS implementations.

AMD also briefly touched upon the next-generation RDNA3 graphics architecture without revealing any features. All we know about RDNA3 for now, is that it will leverage a process node more advanced than 7 nm (likely 6 nm or 5 nm, AMD won't say); and that it will come out some time between 2021 and 2022. RDNA2 will extensively power AMD client graphics products over the next 5-6 calendar quarters, at least.

AMD has two key design goals with RDNA2 that helps it close the feature-set gap with NVIDIA: real-time ray-tracing, and variable-rate shading, both of which have been standardized by Microsoft under DirectX 12 DXR and VRS APIs. AMD announced that RDNA2 will feature dedicated ray-tracing hardware on die. On the software side, the hardware will leverage industry-standard DXR 1.1 API. The company is supplying RDNA2 to next-generation game console manufacturers such as Sony and Microsoft, so it's highly likely that AMD's approach to standardized ray-tracing will have more takers than NVIDIA's RTX ecosystem that tops up DXR feature-sets with its own RTX feature-set.Variable-rate shading is another key feature that has been missing on AMD GPUs. The feature allows a graphics application to apply different rates of shading detail to different areas of the 3D scene being rendered, to conserve system resources. NVIDIA and Intel already implement VRS tier-1 standardized by Microsoft, and NVIDIA "Turing" goes a step further in supporting even VRS tier-2. AMD didn't detail its VRS tier support.

AMD hopes to deploy RDNA2 on everything from desktop discrete client graphics, to professional graphics for creators, to mobile (notebook/tablet) graphics, and lastly cloud graphics (for cloud-based gaming platforms such as Stadia). Its biggest takers, however, will be the next-generation Xbox and PlayStation game consoles, who will also shepherd game developers toward standardized ray-tracing and VRS implementations.

AMD also briefly touched upon the next-generation RDNA3 graphics architecture without revealing any features. All we know about RDNA3 for now, is that it will leverage a process node more advanced than 7 nm (likely 6 nm or 5 nm, AMD won't say); and that it will come out some time between 2021 and 2022. RDNA2 will extensively power AMD client graphics products over the next 5-6 calendar quarters, at least.

306 Comments on AMD RDNA2 Graphics Architecture Detailed, Offers +50% Perf-per-Watt over RDNA

I edited like 35 minutes before your post, lol...hit refresh before you post if its sitting that long, lol.

EDIT: We have no idea how either RDNA2 nor Ampre will respond versus its TBP. So to that, I used a static value, the MFG ratings (sourced from TPUs specs pages on the cards). Actual use will vary but how will depend... so again, I took the only static numbers out there that would not vary by card...I see the actual numbers are lower. They are at least 10% behind in that metric. Still facing an uphill battle considering Nvidia has a node shrink in front of them along with a change in architecture.

Regardless of 50W(~20%) or 24W (~10%)The high level point is unchanged... the RDNA arch on a smaller node is less efficient than Turing on a larger node. They have a lot of work to do to reclaim the performance crown and have some work to regain performance /watt. Where AMD only has an arch change, Nvidia is coming with both barrels loaded (arch and node shrink).

EDIT:My reply all started with this comment, mind you.......

I don't think they have much to worry about except for the usual price to performance ratio considering all that we know right now, including the 50% rumors from both camps...but I've said that like 3 times now to 3 different people it feels like.

EDIT2: Isn't RDNA2 also supposed to at RT capabilities as well? Won't that eat into their 'normal' power envelope? Like Nvidia, this lowered their typical GoG (generation over geneation) performance improvements.... will it do the same to AMD?

All of these factors make me confident Nvidia isn't "worried" about 'big navi'. They have A LOT of work to do in order to catch up.

GDDR6 consumes 20Watts for 16GB. Same capacity HBM2 is 10W.

It is possible and we can only assume of the outcome.

You're right that RDNA is still slightly less efficient in an absolute sense (though that depends on the implementation; the RX 5700 XT is slightly less efficient than the 2070S, but the 5600 XT is (even with the new, boosted BIOS) better than its Nvidia competition by a few percent. Nvidia still (obviously!) has the more efficient architecture given the node disadvantage, but taking into account that AMD has historically struggled on perf/W, just launched a new arch with major perf/w improvements (not just due to 7nm, remember that the 5700 XT roughly matches the VII in performance at significantly less power draw on the same node, and with less efficient memory to boot), one might assume that there weren't major efficiency improvements to be had in the new architecture right off the bat. Apparently AMD says there are. Which is surprising to me, at least.

Now, I'm not saying "Nvidia should be worried", as that's a silly statement implying that AMD is somehow going to surpass them out of the blue, but unless Nvidia manages to pull off their fifth consecutive round of significant efficiency improvements (beyond just the node change, that is) we might see AMD come close to parity if these rumors pan out. Of course we also might not, the rumors might be entirely wrong, or Nvidia might indeed have a major improvement coming - we have no idea.

It's also worth pointing out that your initial statement is rather self-contradictory - on the one hand you're saying we don't have data so we should use manufacturer specs (for entirely different cards..?), while you also say "we will deal with actual numbers" (which I'm reading as real-world test data) once they arrive. Why not then also base ourselves on real-world numbers for currently available cards, rather than their specs (which are very often misleading if not flat out wrong)? Your latter statement implies that real-world data is better, so why not also use that for existing cards?Possible, yes. But AMD brought in HBM specifically as a way of increasing memory bandwidth without the massive PCBs and expensive and complex trace layouts required by 512-bit memory buses. Now, GDDR6 is much faster than GDDR5, but also more expensive, which somewhat alleviates the main pain point of HBM - cost. Add to that that GDDR6 needs even more complex traces than GDDR5, and it becomes highly unlikely that we'll ever see a GPU with a 512-bit GDDR6 bus - HBM2(E) is far more likely at that kind of performance (and thus price) level. You're welcome to disagree, but AMD's recent history doesn't.

Again, I wasn't really talking to you out of the gate, but to the Super XP guy who thinks Nvidia is going to be "worried". AMD has a long way to go, bud, no matter what way you slice the numbers. Nvidia has a die shrink and arch change, while AMD has an arch change while adding on RT hardware for the first time. I'm a betting man and my money is on Nvidia being able to reach these rumored goals.

But yes, we have no idea... I know/knew that going into my first reply to Super XP... may have even said it there too....this merry go round is making me dizzy. I don't give 2 shits to split hairs and semantics which don't matter to the overall point........ :).

AMD is currently behind in ppw. Outside of the 5600XT which had to be tweaked the week before reviews, Navi is less efficient than Turing. At best, with 5600XT it is on par/negligible differences. However the budget 5500 XT and the (current) flagship 5700 XT are not as efficient. So there is that hurdle to overcome. Next, performance. 46% increase to reach 2080 Ti speeds from a 5700 XT. If we use Kepler to Turing and its paltry increase (25%), that means AMD needs to come close to a 71% performance increase to match Ampre. I'll call AMD's flagship 'close' to Nvidia's when it is within 10%. So let's say it needs 61% improvement over the 5700 XT.... I ask again, to all, have we ever seen a 61% performance increase from gen to gen? Maybe 8800 GTS over a decade ago??? I don't recall....

So, for the last time....... :)

Nvidia is sure as hell not worried about AMD. AMD has a lot of work to match/come close to what Ampre can bring in performance, a bit less work - but work nonetheless - to take the overall PPW crown. Can anyone refute those points?

Also, saying Navi is overall less efficient than Turing ... well, that depends massively on the implementation. First off mentioning that the 5600 XT was tweaked just before launch is rather contrary to your argument in this context, as it was tweaked to be far less efficient by boosting clocks, with the pre-update bios being by far the most efficient GPU TPU has ever tested at 1440p and 4k (not that it's a 4k capable GPU, but it is definitely an entry-level 1440p card). In other words, depending on the implementation Navi can be both more and less efficient than Turing. Does that mean it's a more efficient architecture? Obviously not - the node advantage AMD has at this point means that Nvidia still has their obvious architecture advantage. But Navi has been demonstrated to be very efficient when it's not being pushed as far as it can possibly go. That it scales well downwards is very promising in terms of a larger die being efficient at lower clocks, after all. People keep talking about "AMD just needs X times the 5700 XT to beat the 2080 Ti", yet that would be a ~440W GPU barring major efficiency improvements. 2x 5600 XT, on the other hand, would still beat the 2080 Ti handily (the latter is 60, 74 and 85% faster at 1080p, 1440p and 4k respectively), but at just ~330W. Or you could use clocks closer to the original 5600 XT BIOS, and still beat or nearly match it (2x 91 vs 160%,2x 91 vs. 174% and 2x 90 vs. 185%, assuiming perfect scaling which is of course a bit optimistic) but at just 250W! So yeah, don't discount the value of scaling down clocks to reach a performance target with a larger die. Just because the 5700 XT was pushed as far as it can go to compete as well as possible with the 2070 doesn't mean that AMD's next large GPU will be pushed as far. They have a history of doing so, but that was with GCN which had a hard limit of 64 CUs, which meant that the only way to improve performance was higher clocks. That no longer applies for RDNA.

As I said above, I completely agree that saying "Nvidia should be worried" is silly, but you on the other hand seem to be consistently skewing things in favor of Nvidia, whether consciously or not.

AMD is going to have a tough time beating Ampre on either front...one has arch + node, the other, just arch.

Cheers.

And node advantage doesnt mean much here. Even if you potato your way into a lower node, there are still inherent efficiency gains to be had. If there is a sponge where more can be squeezed out of, it seems like that is Nvidia considering node shrink on top of new arch. AMD is also adding ray tracing cores. If their addition is anything like nvidia's, it will be lucky to reach 2080ti speeds.

As I said, I'll bet it lands between a 2080ti and Ampre flagship. I believe it will fall at least 10% short of ampre on performance alone (no clue on rtx performance, likely the same idea...faster than 2080ti, slower than ampre) and slightly worse power to performance overall. Pricing on these parts, from both parties, will be paramount in choosing the right card...and amd will surely be a worthy competitor and offer viable options.

I assume you meant that Nvidia would have a Arch+node advantage over the other (AMD) just arch? Because AMD is already on 7nm, where as Nvidia currently is not. If that is what you mean, then you are saying that Nvidia has a node advantage over AMD. Which is why I said AMD has more 7nm experience, which would render Nvidia's so called node advantage obsolete.

Correct me if I am wrong of course.Fully Agree.

We will definitely get more concrete details about both RDNA2 & Ampere. It's going to be a very interesting y2020. Hopefully the COVID-19 doesn't slow down both AMD & Nvidia GPU launches, because many are itching for new GPUs. :D

Nvidia waiting for AMDs Big Navi lol..

Nvidia might be holding off finalizing the timing, pricing and segmentation until they know more, but if so this is to position themselves, not due to concern. When rumors are pointing in every direction, it's usually a sign that the rumors are all speculation, and Nvidia probably don't know quite what to expect.

But I don't think Nvidia's next-gen is imminent. Everything seems to point to it being months away.

2020 will be a great year for new GPUs. Can't wait,

:toast:

The waiting to finalize clocks/specs is quite normal. But it's not like they are sitting there ready to go waiting on amd to release. They, naturally, are not ready.

www.techpowerup.com/gpu-specs/radeon-hd-5750.c249

www.techpowerup.com/gpu-specs/radeon-hd-5870.c253

These are the same generation, the same micro-architecture, just scaled up and down.

RX 5700 XT is heavily overvolted out of the box, heavily pushed beyond its sweet spot. It's not an upper middle but lower middle range card.

Its real power consumption should be not more than 180-190-watt and even then it's too much.

Navi 21 at 505 sq.mm should have 100% more shaders and 50% higher power consumption, performance-per-watt, too.

Anything less than 80-100% higher performance than Navi 10 would be a major fail.

And where are your sources that say Nvidia is on track for delivery next-gen cards?

Because we hear exactly nothing and see no signs of anything in physical existence from them.

Regarding the rest of your post... read on after my post you quoted. People have said that and I've already responded to it. ;)wow... 80%+ or bust ehh? That's the most optimistic take I've heard.

Nvidia is supposingly getting a little nervous but then states not in terms of being worried, but because Nvidia may have to alter its next gen GPU specifications as to ensure they have enough to combat the Big Navi GPU.

At the TIME 3:17 or listen to 3:00 to 4:00 about a minute.