Friday, March 6th 2020

AMD RDNA2 Graphics Architecture Detailed, Offers +50% Perf-per-Watt over RDNA

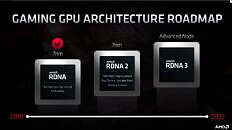

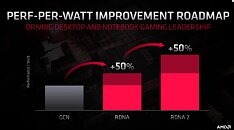

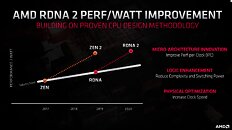

With its 7 nm RDNA architecture that debuted in July 2019, AMD achieved a nearly 50% gain in performance/Watt over the previous "Vega" architecture. At its 2020 Financial Analyst Day event, AMD made a big disclosure: that its upcoming RDNA2 architecture will offer a similar 50% performance/Watt jump over RDNA. The new RDNA2 graphics architecture is expected to leverage 7 nm+ (7 nm EUV), which offers up to 18% transistor-density increase over 7 nm DUV, among other process-level improvements. AMD could tap into this to increase price-performance by serving up more compute units at existing price-points, running at higher clock speeds.

AMD has two key design goals with RDNA2 that helps it close the feature-set gap with NVIDIA: real-time ray-tracing, and variable-rate shading, both of which have been standardized by Microsoft under DirectX 12 DXR and VRS APIs. AMD announced that RDNA2 will feature dedicated ray-tracing hardware on die. On the software side, the hardware will leverage industry-standard DXR 1.1 API. The company is supplying RDNA2 to next-generation game console manufacturers such as Sony and Microsoft, so it's highly likely that AMD's approach to standardized ray-tracing will have more takers than NVIDIA's RTX ecosystem that tops up DXR feature-sets with its own RTX feature-set.Variable-rate shading is another key feature that has been missing on AMD GPUs. The feature allows a graphics application to apply different rates of shading detail to different areas of the 3D scene being rendered, to conserve system resources. NVIDIA and Intel already implement VRS tier-1 standardized by Microsoft, and NVIDIA "Turing" goes a step further in supporting even VRS tier-2. AMD didn't detail its VRS tier support.

AMD hopes to deploy RDNA2 on everything from desktop discrete client graphics, to professional graphics for creators, to mobile (notebook/tablet) graphics, and lastly cloud graphics (for cloud-based gaming platforms such as Stadia). Its biggest takers, however, will be the next-generation Xbox and PlayStation game consoles, who will also shepherd game developers toward standardized ray-tracing and VRS implementations.

AMD also briefly touched upon the next-generation RDNA3 graphics architecture without revealing any features. All we know about RDNA3 for now, is that it will leverage a process node more advanced than 7 nm (likely 6 nm or 5 nm, AMD won't say); and that it will come out some time between 2021 and 2022. RDNA2 will extensively power AMD client graphics products over the next 5-6 calendar quarters, at least.

AMD has two key design goals with RDNA2 that helps it close the feature-set gap with NVIDIA: real-time ray-tracing, and variable-rate shading, both of which have been standardized by Microsoft under DirectX 12 DXR and VRS APIs. AMD announced that RDNA2 will feature dedicated ray-tracing hardware on die. On the software side, the hardware will leverage industry-standard DXR 1.1 API. The company is supplying RDNA2 to next-generation game console manufacturers such as Sony and Microsoft, so it's highly likely that AMD's approach to standardized ray-tracing will have more takers than NVIDIA's RTX ecosystem that tops up DXR feature-sets with its own RTX feature-set.Variable-rate shading is another key feature that has been missing on AMD GPUs. The feature allows a graphics application to apply different rates of shading detail to different areas of the 3D scene being rendered, to conserve system resources. NVIDIA and Intel already implement VRS tier-1 standardized by Microsoft, and NVIDIA "Turing" goes a step further in supporting even VRS tier-2. AMD didn't detail its VRS tier support.

AMD hopes to deploy RDNA2 on everything from desktop discrete client graphics, to professional graphics for creators, to mobile (notebook/tablet) graphics, and lastly cloud graphics (for cloud-based gaming platforms such as Stadia). Its biggest takers, however, will be the next-generation Xbox and PlayStation game consoles, who will also shepherd game developers toward standardized ray-tracing and VRS implementations.

AMD also briefly touched upon the next-generation RDNA3 graphics architecture without revealing any features. All we know about RDNA3 for now, is that it will leverage a process node more advanced than 7 nm (likely 6 nm or 5 nm, AMD won't say); and that it will come out some time between 2021 and 2022. RDNA2 will extensively power AMD client graphics products over the next 5-6 calendar quarters, at least.

306 Comments on AMD RDNA2 Graphics Architecture Detailed, Offers +50% Perf-per-Watt over RDNA

I also said this a few posts later:So, I don't know who it is you are arguing against, but it certainly isn't me. What you are saying bears no relation to the post you quoted when it's read in its proper context. It was commenting on something that related to an architecture and a series of chips (RDNA 1 vs 2 and Navi 1X vs 2X), not a specific chip, so talking absolute performance numbers (such as 2x 5700 XT) is meaningless in that context. AMD has said that they will be competing in the flagship space this generation, so at least close to 2x 5700XT is quite likely. But even then using such a card to say "RDNA 2 is 2x as fast as RDNA 1" would be stupid as you'd be comparing cards in different price ranges and power envelopes.

I mean they could have easily named it NAVI 2 and NAVI 3. But they choose the "X" for a reason IMO.

For me anyway I never thought that 2X or 3X meant performance increase over 1X, though I can understand why some might read it that way. My speculation, RDNA2 is going to be more than 2x the performance of RDNA1.

GeForce RTX 2080 Ti = 273 W (average gaming consumption) = 156% performance (3840x2160)

50% better performance per watt in Navi 2* will mean 150% performance in the same 219 W as Navi 10.

If we assume that Navi 10 is memory bandwidth starved (only around 448 GB/s) and is overvolted at stock, then we could add additional 10-20% performance in considerably lower stock power consumption, for instance 160-170% performance in 180 W (average gaming consumption).

If Navi 21's average gaming consumption is 280 W and its performance scales linearly, then it should show around 55% higher consumption or 215-225% the performance of Navi 10.

So, around 45% higher performance than RTX 2080 Ti at the same consumption.

If, however, AMD decides to push the TDP further to 350 W, then the relative performance would be 250-260%.

They have already got working cards and as per reports are testing them right now.

Maybe a new revision to try to improve it even further?

*) I only know about Navi 21/22/23 so far.Which delay in particular are you thinking of?

Where is the performance, high-end, enthusiast card?

Isn't this a delay of years?

But I don't know if there ever was a "big Navi" for Navi 1x, if so it was scrapped long before tapeout.

It's particularly interesting to hear Navi's story, was it originally intended for N14 node and then moved forward to N7, was simply N7 too late........

They offer some features in their graphics that no one else can or will because their IP competence is lower.

But AMD is way too late to implement 4K gaming for the masses, way too late to introduce ray-tracing, way too late to even compete in some segments of the market.

To be honest, I would be happier if Nvidia goes only for the low-end and mid-range markets, while AMD competes only with itself at the top high-end tier.

I believe it was early last year that Lisa Su said something along the lines of "Vega level performance" for Navi (1x). So I believe it's the hype and expectations to blame here.

Not sure if there was any Sony involvement though.

Too late for Ray Tracing? Currently Ray Tracing is useless. 4K gaming? Radeon 7 takes care of that for those that didn't want to buy Nvidia. The meat and potatoes for the RTG is RDNA2, that's Rumor'd to be a market disrupter. And yes we ALL can't wait for stiffer competition.

But I do expect the big Navi much sooner. According to me, it must already have been launched.

It is not and I do explain it in front of myself with bizarre political decisions.

In recent interviews, Mr. Papermaster from AMD says that they try to implement only right IPC improvements. What does "right" mean and if he is the person who decides, then these are subjective and wrong decisions.

See how many times the word "right" has been said by him:

www.anandtech.com/show/15268/an-interview-with-amds-cto-mark-papermaster-theres-more-room-at-the-topHow do consoles with poor compared to the top PC hardware run 4K then and why?

Why are 4K TVs mainstream now?

also did the 8k benchmark

ran the 8k peru video no problem , but I don use any of those other browsers except brave for personal stuff not watching videos

7N => 7NP/7N+ could give 10%/15% power savings, but the rest...

So, 35-40% improvement would come from arch updates alone?

And that following major perf/watt jump Vega=>Navi?Welp, what about Vega vs Navi? Same process, 330mm2 with faster mem barely beating 250mm2 chip from the next generation.Messages 9 (0.07/day)

Ahaha, hi there, is it you, burnt fuse? :D

The same people who introduced R600, Bulldozer, Jaguar, Vega and now have two competing chips Polaris 30 and Navi 14 covering absolutely the same market segment.

Please, let's just agree to disagree with each other and stop the argument here and now.

Thanks.