Friday, March 6th 2020

AMD RDNA2 Graphics Architecture Detailed, Offers +50% Perf-per-Watt over RDNA

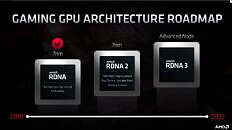

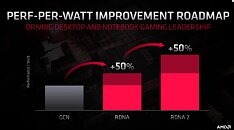

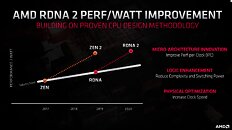

With its 7 nm RDNA architecture that debuted in July 2019, AMD achieved a nearly 50% gain in performance/Watt over the previous "Vega" architecture. At its 2020 Financial Analyst Day event, AMD made a big disclosure: that its upcoming RDNA2 architecture will offer a similar 50% performance/Watt jump over RDNA. The new RDNA2 graphics architecture is expected to leverage 7 nm+ (7 nm EUV), which offers up to 18% transistor-density increase over 7 nm DUV, among other process-level improvements. AMD could tap into this to increase price-performance by serving up more compute units at existing price-points, running at higher clock speeds.

AMD has two key design goals with RDNA2 that helps it close the feature-set gap with NVIDIA: real-time ray-tracing, and variable-rate shading, both of which have been standardized by Microsoft under DirectX 12 DXR and VRS APIs. AMD announced that RDNA2 will feature dedicated ray-tracing hardware on die. On the software side, the hardware will leverage industry-standard DXR 1.1 API. The company is supplying RDNA2 to next-generation game console manufacturers such as Sony and Microsoft, so it's highly likely that AMD's approach to standardized ray-tracing will have more takers than NVIDIA's RTX ecosystem that tops up DXR feature-sets with its own RTX feature-set.Variable-rate shading is another key feature that has been missing on AMD GPUs. The feature allows a graphics application to apply different rates of shading detail to different areas of the 3D scene being rendered, to conserve system resources. NVIDIA and Intel already implement VRS tier-1 standardized by Microsoft, and NVIDIA "Turing" goes a step further in supporting even VRS tier-2. AMD didn't detail its VRS tier support.

AMD hopes to deploy RDNA2 on everything from desktop discrete client graphics, to professional graphics for creators, to mobile (notebook/tablet) graphics, and lastly cloud graphics (for cloud-based gaming platforms such as Stadia). Its biggest takers, however, will be the next-generation Xbox and PlayStation game consoles, who will also shepherd game developers toward standardized ray-tracing and VRS implementations.

AMD also briefly touched upon the next-generation RDNA3 graphics architecture without revealing any features. All we know about RDNA3 for now, is that it will leverage a process node more advanced than 7 nm (likely 6 nm or 5 nm, AMD won't say); and that it will come out some time between 2021 and 2022. RDNA2 will extensively power AMD client graphics products over the next 5-6 calendar quarters, at least.

AMD has two key design goals with RDNA2 that helps it close the feature-set gap with NVIDIA: real-time ray-tracing, and variable-rate shading, both of which have been standardized by Microsoft under DirectX 12 DXR and VRS APIs. AMD announced that RDNA2 will feature dedicated ray-tracing hardware on die. On the software side, the hardware will leverage industry-standard DXR 1.1 API. The company is supplying RDNA2 to next-generation game console manufacturers such as Sony and Microsoft, so it's highly likely that AMD's approach to standardized ray-tracing will have more takers than NVIDIA's RTX ecosystem that tops up DXR feature-sets with its own RTX feature-set.Variable-rate shading is another key feature that has been missing on AMD GPUs. The feature allows a graphics application to apply different rates of shading detail to different areas of the 3D scene being rendered, to conserve system resources. NVIDIA and Intel already implement VRS tier-1 standardized by Microsoft, and NVIDIA "Turing" goes a step further in supporting even VRS tier-2. AMD didn't detail its VRS tier support.

AMD hopes to deploy RDNA2 on everything from desktop discrete client graphics, to professional graphics for creators, to mobile (notebook/tablet) graphics, and lastly cloud graphics (for cloud-based gaming platforms such as Stadia). Its biggest takers, however, will be the next-generation Xbox and PlayStation game consoles, who will also shepherd game developers toward standardized ray-tracing and VRS implementations.

AMD also briefly touched upon the next-generation RDNA3 graphics architecture without revealing any features. All we know about RDNA3 for now, is that it will leverage a process node more advanced than 7 nm (likely 6 nm or 5 nm, AMD won't say); and that it will come out some time between 2021 and 2022. RDNA2 will extensively power AMD client graphics products over the next 5-6 calendar quarters, at least.

306 Comments on AMD RDNA2 Graphics Architecture Detailed, Offers +50% Perf-per-Watt over RDNA

2X is the generational code name for all consumer-oriented non-semi custom RDNA 2 silicon, with each piece of silicon then having a distinct second digit. End discussion.

...and by "everyone's" I mean everyone in this thread but me.

Vega's architecture was also supposed to bring 4X the performance per watt than previous generation.

But I hope this time it’s what AMD says.

Anyway, I can't wait to see these on the market and AMD catch up to performance per /W over the 12nm Turing parts. The put some special sauce in the 5600 XT which put it on par with Nvidia, so this should be interesting, as well as seeing an apples to apples comparison with Ampre and its increase in efficiency p /w along with the shrink to 7nm...I bet NV still holds that lead........

AMD's GPU department have a long standing tradition of over-promising and under-delivering, unfortunately.

This was from a fake Aprils fools leak. Come on, just how low will you fanboys go.

wccftech.com/vega-teaser-slides-leak-nda/

Dont worry someone will take this video as official AMD video also.(Dont click)

GCN (5th gen) used SIMD16, which means it issues instructions every 4(??) cycles, where as RDNA issues it every cycle. This inherently makes a 40 CU (RX 5700 XT) cluster faster than the previous 64 CU cards (Vega 64/Radeon VII).

Depending on what you're trying to achieve (raw core performance vs. optimized IPC), GCN5 can still compete well against its younger sibling. However RDNA can do everything GCN5 can do, except beating it in raw compute loads.

As for the fanboy part, you're wrong, but you're entitled to your opinion.