Tuesday, October 20th 2020

AMD Radeon RX 6000 Series "Big Navi" GPU Features 320 W TGP, 16 Gbps GDDR6 Memory

AMD is preparing to launch its Radeon RX 6000 series of graphics cards codenamed "Big Navi", and it seems like we are getting more and more leaks about the upcoming cards. Set for October 28th launch, the Big Navi GPU is based on Navi 21 revision, which comes in two variants. Thanks to the sources over at Igor's Lab, Igor Wallossek has published a handful of information regarding the upcoming graphics cards release. More specifically, there are more details about the Total Graphics Power (TGP) of the cards and how it is used across the board (pun intended). To clarify, TDP (Thermal Design Power) is a measurement only used to the chip, or die of the GPU and how much thermal headroom it has, it doesn't measure the whole GPU power as there are more heat-producing components.

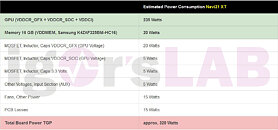

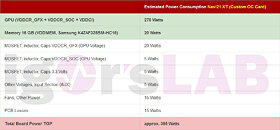

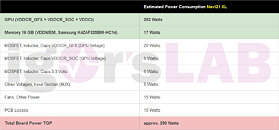

So the break down of the Navi 21 XT graphics card goes as follows: 235 Watts for the GPU alone, 20 Watts for Samsung's 16 Gbps GDDR6 memory, 35 Watts for voltage regulation (MOSFETs, Inductors, Caps), 15 Watts for Fans and other stuff, and 15 Watts that are used up by PCB and the losses found there. This puts the combined TGP to 320 Watts, showing just how much power is used by the non-GPU element. For custom OC AIB cards, the TGP is boosted to 355 Watts, as the GPU alone is using 270 Watts. When it comes to the Navi 21 XL GPU variant, the cards based on it are using 290 Watts of TGP, as the GPU sees a reduction to 203 Watts, and GDDR6 memory uses 17 Watts. The non-GPU components found on the board use the same amount of power.When it comes to the selection of memory, AMD uses Samsung's 16 Gbps GDDR6 modules (K4ZAF325BM-HC16). The bundle AMD ships to its AIBs contains 16 GB of this memory paired with GPU core, however, AIBs are free to put different memory if they want to, as long as it is a 16 Gbps module. You can see the tables below and see the breakdown of the TGP of each card for yourself.

Sources:

Igor's Lab, via VideoCardz

So the break down of the Navi 21 XT graphics card goes as follows: 235 Watts for the GPU alone, 20 Watts for Samsung's 16 Gbps GDDR6 memory, 35 Watts for voltage regulation (MOSFETs, Inductors, Caps), 15 Watts for Fans and other stuff, and 15 Watts that are used up by PCB and the losses found there. This puts the combined TGP to 320 Watts, showing just how much power is used by the non-GPU element. For custom OC AIB cards, the TGP is boosted to 355 Watts, as the GPU alone is using 270 Watts. When it comes to the Navi 21 XL GPU variant, the cards based on it are using 290 Watts of TGP, as the GPU sees a reduction to 203 Watts, and GDDR6 memory uses 17 Watts. The non-GPU components found on the board use the same amount of power.When it comes to the selection of memory, AMD uses Samsung's 16 Gbps GDDR6 modules (K4ZAF325BM-HC16). The bundle AMD ships to its AIBs contains 16 GB of this memory paired with GPU core, however, AIBs are free to put different memory if they want to, as long as it is a 16 Gbps module. You can see the tables below and see the breakdown of the TGP of each card for yourself.

153 Comments on AMD Radeon RX 6000 Series "Big Navi" GPU Features 320 W TGP, 16 Gbps GDDR6 Memory

For me the biggest win is silence. I want a quiet rig above everything else really. I play music and games over speakers. Noticeable fan noise from the case is the most annoying immersion breaker - much more so than the loss of single digit FPS. Is that worth buying a bigger GPU for that I run at lower power? Probably, yes. Its that, or I can jump through a million hoops trying to dampen the noise coming out of the case... which is also adding extra cost but not offering the option of more performance should I want it. Because I haven't lost that, when I buy a bigger GPU.

Why AMD would let Watt on the table if they can make their cards faster ? These 320 watts card exist because people are buying it. They even complain hard when they can't buy them because they are back order.

If nobody was buying a 250+ cards, AMD and Nvidia wouldn't produce them, that is as simple as that. That just show how little people really care about power consumption in general.

The good things is there will also be 200 watt GPU that will have very good performance increase for people that want a GPU that consume less power while still having better performance than current Gen.

But if people want to get the highest performance possible no matter the cost, why would AMD and Nvidia hold back ?

If they could sell a 1000w card that is twice the performance like they sell the 3080, they would certainly do it.Any proof of this?

i mean if that is true, my PSU would just die right now with my current setup. But hey, it's still running strong and rock stable.

That is a myth or maybe true for cheap PSU with bad componement but it's certainly not true for good PSU.

I mean warranty period + 7 years they probably add 20% on this as well. Not just 7.

I think 10% per year is a pretty "worst case" scenario. That may be true to cheap PSUs with even cheaper caps in 24/7 full load in a 50°C environment....

I think 10% loss per year is a safe bet for a worst-case-scenario but there are plenty of people and independent tests proving that decade-old PSUs are still capable of delivering all or nearly all of their rated power. PSUs components are overprovisioned when new so that as the capacitors and other components wear out, they are still up to the rated specification during the warranty period. I forget where I read it but I seem to recall a review of a decade old OCZ 700W supply that had been in nearly 24/7 operation, yet it still hit the rated specs without any problems. The temperature it ran at was much higher (but still in spec) and the ripple was worse than when it was new (but still in spec) and it shutdown when tested at 120% load, something it managed to cope with when new.

I would not be using a decade-old PSU for a new build with high-end parts, but at the same time I would expect a new 750W PSU to still deliver 750W in 7 years from now.

On the other hand, I'd be happy if more AIBs (other than EVGA) adopted the idea of AIO watercooled graphics cards, especially with Ampere and RDNA2. It's not only good for these hungry GPUs, but using the radiator as exhaust helps keeping other components cool as well.

Actual perf/watt might be well off claimed one due to this uncertainty.

That said, when the new AMD card is released, it will be apples to apples if only across one title.

The Vega as well is at certain clocks and voltages, very efficient > Untill AMD decided that pushing the Vega to compete with the 1080 was beyond it's efficiency curve. Like a Ryzen 2700x > From 4Ghz and above you need quite more and more voltages to clock higher, untill the point that the more voltage needed for just that few more Mhz is proportional. Makes no sense.

Here's a old screenie of my RX580 running at 300 watts power consumption. Really if your hardware is capable of it it shoud'nt cause issues. I'm running with Vsync anyway capped at 70Hz. Its not using 300W sustained here, more like 160 to 190 Watts in gaming. Same goes out for AMD cards. Their enveloppe is set at up to xxx watts; and you can play / tweak / tune it if desired.

We have an 18k BTU mini-split that runs in our 1300 sq. ft. garage virtually 24/7. The 3-5 PC's in the home that run at any given moment are NOTHING compared to that.

But for Desktops etc i dont care since more power often means more performance and high power is only drawn during high load which is only minutes when working or 2h a night when gaming...

Currently, RDNA (and GCN) split instructions into two categories: Scalar, and Vector. "Scalar" instructions handle branching and looping for the most part (booleans are often a Scalar 64-bit or 32-bit value), while "vector" instructions are replicated across 32 (64 on GCN) copies of the program.

Still, Some game's are going to cook people while gaming, warm winter perhaps, hopefully that looto tickets not as shit as all my last one's.

All of these GPUs idle at levels we can pretty much ignore.

Even the 14W idle of RX Vega is 0.0042€ per hour. It only ramps up to max power if you give it a game, or other load, that requires that kind of power draw. Cap your framerate to lower values (especially if you have VSync / GSync), etc. etc.

On the other hand, I don't think most people even give power-consumption thoughts to their computers. But... its not like these things are running full tilt all the time. If you really cared about power, there's plenty of things you can do right now, today, with your current GPU to reduce power consumption.

Whether you run it at maximum power, or minimum power, is up to you. Laptop chips, such as the Laptop RTX 2070 Super, are effectively underclocked versions of the desktop chip. The same thing, just running at lower power (and greater energy efficiency) for portability reasons. Similarly, a mini-PC user may have a harder time cooling down their computer, or maybe a silent-build wants to reduce the fan noise.

A wider GPU (ex: 3090) will still provide more power-efficiency than a narrower GPU (ex: 3070), even if you downclock a 3090 to 3080 or 3070 levels. More performance at the same levels of power, that's the main benefit of "more silicon".

--------

Power consumption is something like the voltage-cubed (!!!). If you reduce voltage by 10%, you get something like 30% less power draw. Dropping 10% of your voltage causes a 10% loss of frequency, but you drop in power-usage by a far greater number.