Wednesday, December 9th 2020

NVIDIA Updates Cyberpunk 2077, Minecraft RTX, and 4 More Games with DLSS

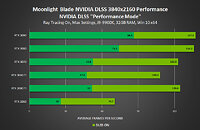

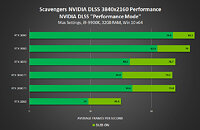

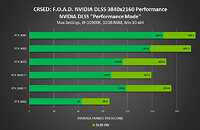

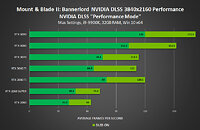

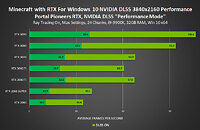

NVIDIA's Deep Learning Super Sampling (DLSS) technology uses advanced methods to offload sampling in games to the Tensor Cores, dedicated AI processors that are present on all of the GeForce RTX cards, including the prior Turing generation and now Ampere. NVIDIA promises that the inclusion of DLSS is promising to deliver up to a 40% performance boost, or even more. Today, the company has announced that DLSS is getting support in Cyberpunk 2077, Minecraft RTX, Mount & Blade II: Bannerlord, CRSED: F.O.A.D., Scavengers, and Moonlight Blade. The inclusion of these titles is now making NVIDIA's DLSS technology present in a total of 32 titles, which is no small feat for new technology.Below, you can see the company provided charts about the performance of DLSS inclusion in the new titles, except the Cyberpunk 2077.

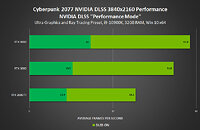

Update: The Cyberpunk 2077 performance numbers were leaked (thanks to kayjay010101 on TechPowerUp Forums), and you can check them out as well.

Update: The Cyberpunk 2077 performance numbers were leaked (thanks to kayjay010101 on TechPowerUp Forums), and you can check them out as well.

52 Comments on NVIDIA Updates Cyberpunk 2077, Minecraft RTX, and 4 More Games with DLSS

But don't get me wrong, I think DLSS is a great feature to have in hand if you have an underpowered GPU for the latest and greatest games, allowing you to run, for example, 1440p High DLSS instead native 1080p. In that situation, DLSS 1440p will look a lot better than running 1080p native in a 1440p monitor. Also it's great if you want more fps at the same resolution, but please don't spread the word DLSS is the holy grail at game graphics because it's not. A good developed game will always look better at native than any upscaled method.

We are in the infancy of RT. I think it's the future, but right now we don't have the GPU power to make it viable at decent framerates without DLSS, and sometimes even with DLSS enabled the performance is not there. We need devs to make a clever use of RT too, because in several games the effects are so subtle and the performance hit so high that it's only included to tick a box in game's specs.

Free FPS with no perceptible IQ loss is kind of awesome.

4k with DLSS is a 4K 2D output, with a scaled/stretched 1440p (i believe) 3D rendering.

It's like loading a 1080p video on a 4k screen - sure all the pixels are lit up and in use, but some are just using repeated information. The key to DLSS is that its tweaked to look better than typical examples of this.

I'm all for this tech, as it allows us to have a good desktop resolution without the gaming performance hit... but it'll never be quite as good as native resolution (we seem to agree on that)

Some people may well like DLSS and say it looks better depending on what they see - some people want 32x TAA so not a single jaggy is in sight, while others are offended by the blurry edges that would cause

In any case, Cyberpunk just revealed how underpowered the current generation of GPUs are for the purpose to utilizing multiple ray tracing techniques at the same time. Previous AAA RT titles are mostly confined to using RT for either shadows, lighting or reflection, and therefore the performance penalty is still acceptable. So ultimately, gamers will still need to decide if they want to play Cyberpunk at higher resolution and FPS vs having RT on.

_Native Res without any AA - jaggies, some games can be quite distracting even on high PPI screens.

_Native Res with MSAA - Highest IQ but Low FPS

_Native Res with TAA - No jaggies but Lower IQ

_Native Res with DLSS - IQ is between TAA and MSAA with massive performance improvement, however there is some visual anomaly sometimes.

With the performance boost that come with DLSS 2.0 though, just enable it in any game that support it. Not using it is like not using autopilot when you drive a Tesla :D.

Pick what pleases you... but seeing all these games barely manage 60FPS is laughable, i want my 144+ damnit

Well nothing stopping from breaking the game grahics to get your doses of 144+ though, try DLSS Ultra Performance :roll: with everything on Low.

I think the technical and performance advantage of DLSS is apparent. The problem is implementation. I'm hoping this will go the Gsync way, with a widely available version broadly applied. Because again, we can explain this in two ways... either Nvidia is doing it right but only for itself... or AMD is late to the party. Its clear as day we want this everywhere and if both red and green are serious about RT, they will NEED it.Yep.... Im scared tbh :p But it seems like we can squeeze acceptable perf from it still. Only just.

And perhaps you legitimately should just lower resolution to 1440p.... ;)It is screenshot that is used to illustrate how good DLSS is... Good to know it's "not the same screenshot" once issues that TAA is known for pop up... :D

Plus can you even tell the difference with that 40" 1080P? or does everything look like a vaseline smear? ;)The weird thing is AMD isn't THAT late to the party - you can absolutely use super resolution and then Radeon image sharpening to get almost the same effect, it's just not marketed at all, and not packaged up nicely with a toggle like DLSS - the one advantage it does have is that you don't need to upscale 1440P but drop to like 1800P, gpu upscale and sharpen for an easy 15% fps boost with minimal quality drop.

DLSS is awesome right now -- going to be playing some Cyberpunk on my 4k TV, and there is no way the 2080ti would make it a fun time without DLSS even with normal rasterization, and upgrading the card is not even an option since there's not cards to buy lol.

I don't care what the pics are used for in anyone's mind, all I see is two shots that are not identical - its just a frame with different content or taken from a slightly adjusted angle, or a millisecond earlier or later. You can see this as the position of many objects is different.

At that point all bets are off, looking at still imagery. But we can still see at first glance (!) the image is virtually identical, to a degree that you need to actually pause and look carefully to find differences.

You're quite the expert at making mountains out of mole hills aren't you?

Basically DLSS 2.0 preserve all the details but get rid of all the shimmering caused by aliased edges (grass, rocks, building, etc...), while boosting performance by 25%

Youtube compression made it pretty hard to tell the IQ difference though :roll:

And, dear god, that green reality distortion field is some scary shit.

Better IQ + 40% perf uplift, Cyberpunk 2077 really punish non-RTX owners with their DLSS implementation there :roll:

again, if you would just compare performance at 1080p but then say that at 1080p with DLSS'ed up to 4k one looks better, that is fine, that is still a fair comparison.

I mean its not even the same, DLSS introduces visual artifacts, dithering etc, its not the same quality as just running it at that actual resolution, its a mitigating technique to make up for how badly RT impacts performance.

In the future with mcuh more advanced gpu's, nobody is going to run DLSS

As I have said many times now, playing at native resolution without AA looks pretty bad, the shimmering on objects are very noticable when you are moving. TAA is an acceptable form of AA because it remove all those shimmering effect while DLSS is an even better form of AA than TAA.

but then 1080p reconstructed/upscaled to 4k does not look as good as just running it at actual 4k.

That is kinda the point, its a bit like DSR or VSR except being less taxing because you dont actually run an "internal" res of 4k while being displayed on a 1080p screen for example.

DLSS is a mitigating solution, one to deal (read: make up for) with the requirements of RT, but in the future, if hardware is fast enough, you would get much better image quality by running a native res with proper anti aliasing (although that seems a bit of a thing of the past for some reason...where is my SMAA and SSAA gone? why is it all this crappy post processing nonsense?)

1. Oh god cyberpunk is a resource whore.

2. DLSS is very comparable to TAA in user experience... it helps jaggies (vs the actual res and upscaling) but softens a lot of things too much vs native res. It's not a bad thing to have a choice to use, but it is bad when a game needs it... i barely get 60FPS with DLSS on an absolute beast of a system.