Thursday, March 31st 2022

GPU Hardware Encoders Benchmarked on AMD RDNA2 and NVIDIA Turing Architectures

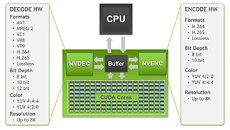

Encoding video is one of the significant tasks that modern hardware performs. Today, we have some data of AMD and NVIDIA solutions for the problem that shows how good GPU hardware encoders are. Thanks to Chips and Cheese tech media, we have information about AMD's Video Core Next (VCN) encoder found in RDNA2 GPUs and NVIDIA's NVENC (short for NVIDIA Encoder). The site managed to benchmark AMD's Radeon RX 6900 XT and NVIDIA GeForce RTX 2060 GPUs. The AMD card features VCN 3.0, while the NVIDIA Turing card features a 6th generation NVENC design. Team red is represented by the latest work, while there exists a 7th generation of NVENC. C&C tested this because it means all that the reviewer possesses.

The metric used for video encoding was Netflix's Video Multimethod Assessment Fusion (VMAF) metric composed by the media giant. In addition to hardware acceleration, the site also tested software acceleration done by libx264, a software library used for encoding video streams into the H.264/MPEG-4 AVC compression format. The libx264 software acceleration was running on AMD Ryzen 9 3950X. Benchmark runs included streaming, recording, and transcoding in Overwatch and Elder Scrolls Online.Below, you can find benchmarks of streaming, recording, transcoding, and transcoding speed.

Streaming:Recording:Transcoding:Transcoding Speed:For details on VCN and NVENC output visuals, please check out the Chips and Cheese website to see the comparison in greater detail.

Source:

Chips and Cheese

The metric used for video encoding was Netflix's Video Multimethod Assessment Fusion (VMAF) metric composed by the media giant. In addition to hardware acceleration, the site also tested software acceleration done by libx264, a software library used for encoding video streams into the H.264/MPEG-4 AVC compression format. The libx264 software acceleration was running on AMD Ryzen 9 3950X. Benchmark runs included streaming, recording, and transcoding in Overwatch and Elder Scrolls Online.Below, you can find benchmarks of streaming, recording, transcoding, and transcoding speed.

Streaming:Recording:Transcoding:Transcoding Speed:For details on VCN and NVENC output visuals, please check out the Chips and Cheese website to see the comparison in greater detail.

20 Comments on GPU Hardware Encoders Benchmarked on AMD RDNA2 and NVIDIA Turing Architectures

whereas, NVENC (page 41 whitepaper)i had to look and it seems the reviewer knew what they were doing. :)

Instead, all AMD is focused on is DLSS and making a new spin on TAA that they can brand as theirs. Hey, AMD. Wake up and make a copy of nvenc with your own branding. Call it freenc. Whatever. Help me not be hostage to nvidia.

Wait, Wut?

6900 XT doesn't seem to care about MBPS? It just always performs at 45fps ? How strange.VMAF is the "quality" of the transcoding. How many compression artifacts there are, how much like the "original" it all looks like. As you may (or may not) know, modern "compression" is largely about deleting data that humans "probably" won't see or notice if the data was missing. So a modern encoder is about finding all of these bits of the picture that can be deleted.

VMAF is an automatic tool that tries to simulate human vision. At 100, it says that humans won't be able to tell the difference. At 0, everyone will immediately notice.

--------

The other graphs at play here are speed.

Software / CPU has the highest VMAF, especially at "very slow" settings.

NVidia is the 2nd best.

AMD is 3rd / the worst at VMAF.

-------

Software / CPU is the slowest.

AMD is fast at high Mbps. NVidia is fast at low Mbps.

I think I need to look on a bigger screen, thanks for the info I'm not That clued up.

The original chips-and-cheese website has the actual pictures from the tests if you wanna see the difference.Smaller, niche websites don't have as much hardware to do a "proper apples to apples test".

I'm glad that they have the time to do a test at all. But yeah, AMD 6900 xt vs NVidia 1080 or 2060 is a bit of a funny comparison.

The main other thing AMD needs to focus much more on it in the Content Creation side of things, not just Video Editing/processing but also Accelerated 3D rendering. NV is doing so well here that its a no brainer for any content creator to use NV solutions. AMD tried to dive into this market but its still far from what NV has achieved. And now Apple is entering the game with their Silicon very strongly.

Just see how Blender & V-Ray renders using NV OptiX. It can accelerate using all the resources possible especially the RT cores to accelerate RT rendering, they have been doing it almost since RTX launched. AMD just added support for only RDNA2 in Blender 3.0 and still without RT accelerating, and nothing else in other major 3D renderers like V-Ray.

www.tomshardware.com/reviews/video-transcoding-amd-app-nvidia-cuda-intel-quicksync,2839.html

pretty sad when sandy bridge's igpu (first gen QS) beat them.

however, for most uses, just some gamerz on twitch, anything is fine, professions will want software encoding as usual until a miracle happens!

Where excellent is mapped to 100, and bad is mapped to 0.

At around ~85 there is a perceivable loss in quality, but its generally not annoying.

at around ~75 it starts to become annoying

And 60 or below is pretty terrible, but could still be acceptable in terms of filesize to quality ratios.

Generally one would aim somewhere between 92-95 as a good target to produce videos with a decent amount of compression but generally smaller than the original. Though, no one would use x264 for that today, its old and useless. VP9 or x265 at a minimum, both of which are less sensitive to thread count.

You can still quite easily notice difference even at higher score values, like >98.x. However if that noticeable difference matters or not is a question thats rather subjective.

They also do not produce all the scores and metrics vmaf has. The "score" is just mean value. Min, Max, Mean, Harmonic mean is the generally values you get out. I find all of them more important than the Mean in most cases. Since the difference between them can be rather large. See this example of the output you get:

I did test nvenc encoding vs CPU encoding in PowerDirector at the start but I had such a small sample size that I decided to wait until later. Though if anything, GPU encodes consistently average higher scores in the 5 test videos I used at the time, further testing is needed.

Although the work on said scripts are halted for learning python so I can better produce a cross-platform tool for measurements and crunching and all other fancy stuff i wanted to do with it.

Other notes I found so far:

Most video files that many modern games have these days are way too large for their own good.

For those who like anime - Not a single fan sub group is able to produce reasonable quality to filesize videos (they are~80% larger than they should be), Exceptions are some of the few x265 recoders out there.

VP9 is super nice to use compared to x265, though x265 is nice and speedy and x264 is just laughable today in how horrible it is.

If you use VP9 then enabling tiling and throwing extra threads at it for higher encoding speeds does not impact quality at all

Here are some of the later results I had, and that is indeed a file ending up as 15% of the original size with a metric of 94 for both mean and harmonic mean. Though as one can see, the minimum values are starting to drop, and that can be noticeable.

So many things today are just made with static bitrate values with no concern for filesize and because of that, a lot of videos are rather bloated today. Which was why I started to play around with this to start with a few months back. Because I was curious how bloated (or non-bloated) things were.

The review here seems to suggest otherwise?

But to get there, you need to run the CPU encoder under 'very slow' settings. For streaming, that's too slow to be useful. So they used 'very fast' settings (worse quality) for the streaming test