Tuesday, August 18th 2020

Apple A14X Bionic Rumored To Match Intel Core i9-9880H

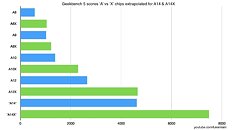

The Apple A14X Bionic is an upcoming processor from Apple which is expected to feature in the upcoming iPad Pro models and should be manufactured on TSMC's 5 nm node. Tech YouTuber Luke Miani has recently provided a performance graph for the A14X chip based on "leaked/suspected A14 info + average performance gains from previous X chips". In these graphs, the Apple A14X can be seen matching the Intel Core i9-9880H in Geekbench 5 with a score of 7480. The Intel Intel Core i9-9880H is a 45 W eight-core mobile CPU found in high-end notebooks such as the 2019 16-inch MacBook Pro and requires significant cooling to keep thermals under control.

If these performance estimates are correct or even close then Apple will have a serious productivity device and will serve as a strong basis for Apple's transition to custom CPU's for it's MacBook's in 2021. Apple may use a custom version of the A14X with slightly higher clocks in their upcoming ARM MacBooks according to Luke Miani. These results are estimations at best so take them with a pinch of salt until Apple officially unveils the chip.

Source:

@LukeMiani

If these performance estimates are correct or even close then Apple will have a serious productivity device and will serve as a strong basis for Apple's transition to custom CPU's for it's MacBook's in 2021. Apple may use a custom version of the A14X with slightly higher clocks in their upcoming ARM MacBooks according to Luke Miani. These results are estimations at best so take them with a pinch of salt until Apple officially unveils the chip.

85 Comments on Apple A14X Bionic Rumored To Match Intel Core i9-9880H

We hardware nerds love to pretend that we're running our systems at high utilization with high efficiency, as if we were bitcoin miners or Fold@Home geeks all the time. But that's just not the reality of the day-to-day. Even programming at work has started to get offloaded to dedicated "build servers" and continuous integration facilities, off of the desktop / workstation at my workdesk.

Browsing HTML documentation for programming is hugely important, and a lot of that is "TURBO race to idle" kind of workloads. The chip idles, then suddenly the HTML5 DOM shows up, maybe with a bit of Javascript to run before reaching its final form. But take this webpage for instance: This forum page is ~18.8 KBs HTML5, which is small enough to fit inside the L1 cache of all of our machines.

That's the stuff Geekbench is measuring: opening PDF documents, browsing HTML5, parsing DOM, interpreting JPEG images. It seems somewhat realistic to me, with various internal webpages constantly open at my work computer and internal wikis I'm updating constantly.

---------

I don't even like Apple. I don't own a single Apple product. But you're going to have to explain the flaws of Geekbench if you really want to support your discussion points.

I also said it's my opinion sooo.

And my pc has said 80-100% load for years now, as I also said.

You know what gets hardly any abuse, my phone it's surfed on and fit's your skewed perspective.

Geek bench on it makes sense.

'PowerPC was fast'... it was even part used in a Playstation, yet today you don't see a single one in any gaming or consumer machine. In enterprise though? Yep. Its a tool that works best in that setting.

'ARM is fast'... correct. We have ThunderX chips that offer shitloads of cores and can use lots of RAM. They're not the ones we see in a phone though. We also have Apple's low-core-count, single-task optimized mobile chips. You won't see those in an enterprise environment. That's not 'ignoring it', it is separating A from B correctly.

Sustained means nothing in THE USE CASE Apple has selected for these chips. That is where all chips are going. More specialized. More specific to optimal performance in a desired setting. Even Intel's own range of CPUs, even in all those years they were 'sleeping' have pursued that goal. They are still re-using the same core design in a myriad of power envelopes and make it work top to bottom - in Enterprise, in laptop, and they've been trying to get there on mobile. The latter is the ONE AREA where they cannot seem to succeed, a bit similar to Nvidia's Tegra designs that are always somewhat too high power and perform well, but are too bulky after all to be as lean as ARM is under 5W. End result: Nvidia still didn't get traction with its ARM CPUs for any mobile device.

In the meantime, Apple sees Qualcomm and others develop chips towards the 'x86 route'. They get higher core counts, more and more hardware thrown at ever less efficient software, expanded functions. That is where the direction of ARM departs from Apple's overall strategy - they want optimized hard- and software systems. You seem to fail to make that distinction thinking Apple's ARM approach is 'The ARM approach'. Its not, the ISA is young enough to make fundamental design decisions.

Like @theoneandonlymrk said eloquently: philips head? Philips screwdriver.There you go and that is why I said, Apple is going to offer you terminals, not truly powerful devices. Intel laptop CPUs are not much different, very bursty and slow as shit under prolonged loads. I haven't seen a single one that doesn't throttle like mad after a few minutes. They do it decently... but sustained performance isn't really there.

I will underline this again

Apple found a way to use ARM to guarantee their intended user experience.

This is NOT a performance guarantee. Its an experience guarantee.

You need to place this in the perspective of how Apple phones didn't really have true multitasking while Android did. Apple manages its scheduler in such a way that it gets the performance when the user demands it. They path out what a user will be looking at and make sure they show him something that doesn't feel like waiting to load. A smooth animation (that takes almost a second), for example, is also a way to remove the perception of latency or lacking performance. CPU won't burst? Open the app and show an empty screen, fill data points later. Its nothing new. Websites do it too irrespective of architecture, especially the newer frameworks are full of this crap. Very low information density and large sections of plain colors are not just a design style, its a way to cater to mobile limitations.

If you use Apple devices for a while, take a long look at this and monitor load and you can see how it works pretty quickly. There is no magic sauce.

Not to mention that they don't just put large L1 caches, everything related to on chip memory is colossal in size. And again, everyone can do that, that's not the merit of an ARM design or not.

Lets take a look at a truly difficult benchmark. One that takes over 20 seconds so that "Turbo" isn't a major factor.

www.cs.utexas.edu/~bornholt/post/z3-iphone.html

Though 20 seconds is slow, the blogpost indicates that they continuously ran the 3-SAT solver over and over again, so the iPhone was behaving at its thermal limits. The Z3 solver tries to solve the 3-SAT NP Complete problem. At this point, it has been demonstrated that Apple's A12 has a faster L1, L2, and memory performance than even Intel's chips in a very difficult, single-threaded task.

----------

Apple's chip team has demonstrated that its small 5W chip is in fact pretty good at some very difficult benchmarks. It shouldn't be assumed that iPhones are slower anymore. They're within striking distance of Desktops in single-core performance in some of the densest compute problems.

Another great example of a waste of time.

A true, great benchmark , 20 seconds.

Single core, dense compute problems, lmfao.

Or are you unaware what "dense compute" means? HCPG is sparse compute (memory intensive), while Linpack is dense (cpu intensive). Z3 probably is in the middle, more dense than HCPG but not as dense as Linpack (I don't know for sure. Someone else may correct me on that).

--------

When comparing CPUs, its important to choose denser compute problems, or else you're just testing the memory interface (ex: STREAM benchmark is as sparse as you can get, and doesn't really test anything aside from your DDR4 clockrate). I'd assume Z3 satisfies the requirements of dense compute for the purposes of making a valid comparison between architectures. But if we go too dense, then GPUs win (which is also unrealistic. Linpack is too dense and doesn't match anyone's typical computer use. Heck, its too dense to be practical for supercomputers)

In the note's

"This benchmark is in the QF_BV fragment of SMT, so Z3 discharges it using bit-blasting and SAT solving.

This result holds up pretty well even if the benchmark runs in a loop 10 times—the iPhone can sustain this performance and doesn’t seem thermally limited.1 That said, the benchmark is still pretty short.

Several folks asked me if this is down to non-determinism—perhaps the solver takes different paths on the different platforms, due to use of random numbers or otherwise—but I checked fairly thoroughly using Z3’s verbose output and that doesn’t seem to be the case.

Both systems ran Z3 4.8.1, compiled by me using Clang with the same optimization settings. I also tested on the i7-7700K using Z3’s prebuilt binaries (which use GCC), but those were actually slower.

What’s going on?

How could this be possible? The i7-7700K is a desktop CPU; when running a single-threaded workload, it draws around 45 watts of power and clocks at 4.5 GHz. In contrast, the iPhone was unplugged, probably doesn’t draw 10% of that power, and runs (we believe) somewhere in the 2 GHz range. Indeed, after benchmarking I checked the iPhone’s battery usage report, which said Slack had used 4 times more energy than the Z3 app despite less time on screen.

Apple doesn’t expose enough information to understand Z3’s performance on the iPhone,

This result holds up pretty well even if the benchmark runs in a loop 10 times—the iPhone can sustain this performance and doesn’t seem thermally limited.1 That said, the benchmark is still pretty short.

He said prior it uses one core only on Apple, really leverage what's there eh, or the light load might sustain a boost better cos of that single core use but this leads to my point B.

He doesn't know how it's actually running on the apple, so can't know if it is leveraging accelerator's to hit that target.

Still 7700k verses A12 ,14nm+(only one plus not mine) verses 5Nm .

That's not telling anyone much about how the A14 would compare to a CPU out today not Eol, never mind the next generation Ryzen and Cove cores it would face.

All in fail.

Do I know what Z3 is, wtaf does it matter.

We are discussing CPU performance not , coder pawn.

I don't use it 99.9% of users also don't, I am aware of it though and aware of the fact it too is irrelevant like geek bench.

Z3 wasn't even made by Apple. Its a Microsoft Research AI project that happens to run really, really well on iPhones. (Open Source too. Feel free to point out where in the Github code this "accelerator chip" is being used. Hint: you won't find it, its a pure C++ project with some Python bits)The CPU performance on the IPhone is surprisingly good in Z3. I'd like to see you explain why this is the case. There's a whole bunch of other benchmarks too that the iPhone does well on. But unlike Geekbench, something like Z3 actually has coder-ethos as a premier AI project. So you're not going to be able to dismiss Z3 as easily as Geekbench.

Can you explain where I said I was an expert in your coding speciality to put my foot in my mouth!?

I'm aware of f£#@£g Bigfoot but do I know many facts on him?.

So getting back to the 9900k it's 10-20% better than the 7700k your on about now.

And the Cove and zen3 cores are a good 17% better again (partly alleged).

That's at least 30% on the 7700K and Intel especially work hard to optimise for some code type's.

And you are still at it with second timed benches.

If I do something on a computer that takes time to run but it's a one off and a few seconds, I wouldn't even count it as a workload, at all.

Possibly a step in a process, but not a workload.

I have a workload or two and they don't Finish in 20 seconds.

Recent changes to GPU architecture and boost algorithms puts most GPU benchmarks and some people's benchmarking in the same light to me, Now, tests have to be sustained for a few minutes minimum or they're not good enough for me.

I'm happy to just start calling each other names if you want but best pm the mods don't like it.

It's a waste of time to look at independent problems, because that's not what is found in the real world. We don't solve SMT, we don't run linear algebra all the time, most user software is mixed in terms of workloads and doesn't follow regular patterns such that caches are very effective.

And that's similar to my point , one 20sec workload is no workload at all.

And the comparisons are all over the show.

I would argue the performance of a 7700k is hard core irrelevant, few are buying quad cores to game on now, even Intel stopped pushing quads A12=(1core) 7700k. = 9900k = 11900(shrug) apparently, shrug.

[/QUOTE]

That's daft, point to someone other than you that's said arm is not fast.

That's exactly the point, some of us can use an iPad pro too, many have, and put it back down, hence the opinions,

You pointed to two short busrt benchmarks as your hidden unseen by plebs truth?!, one of which was so obscure you think you got one over on me, yeah 98% of nerds haven't ran that bench ffs, yeah guy you told me, you think I couldn't show a few benches with PC's beating iPhone chip's?

I wouldn't waste my time, I have said before I have no doubt these have their place and will sell well but they are not talking the performance crown and real work will stay on x86 or PowerPC.

A programmer working on FEA (Ex: simulated car crashes), Weather Modeling, or Neural Networks will constantly run large matrix-multiplication problems. Over, and over, and over again for days or months. In these cases, GPUs, and wide-SIMD (like 512-bit AVX on Intel or A64FX) will be a huge advantage. If GPUs are a major player, you still need a CPU with high I/O (ie: EPYC or POWER9 / OpenCAPI) to service the GPUs fast enough.

A programmer working on CPU-design will constantly run verification / RTL proofs, of which are coded very similarly to Z3 or other automated solvers. (And unlike matrix-multiplication, Z3 and other automated-logic code is highly divergent and irregular. Its very difficult to write multithreaded code and load-balance the work between multiple cores. There's a lot of effort in this area, but from my understanding, CPUs are still > GPUs in this field). Strangely enough, A12 is one of the best chips here, despite it being a tiny 5W processor.

A programmer working on web servers will run RAM-constrained benchmarks, like Redis or Postgresql. (And thus POWER9 / POWER10 will probably be the best chip: big L3 cache and huge RAM bandwidth).

--------

We have many computers to choose from. We should pick the computer that best matches our personal needs. Furthermore, looking at specific problems (like Z3 in this case), gives us an idea of why the Apple A12 performs the way it does. Clearly the large 128kB L1 cache plays to the A12's advantage in Z3.

I don't see it.

Damn the irony

"We have many computers to choose from. We should pick the computer that best matches our personal needs. "

Philips head=Philips screwy.

How many Devs are on this ? What proportion of computing device users do they make up 0.00021% is it mostly just a niche?

Wanna go back to discussing Geekbench Javascript / Web tests? That's surely the highest proportion of users. In fact, everyone browsing this forum is probably performing AES-encryption/decryption (for HTTPS), and HTML5 DOM rendering. Please explain to me how such an AES-Decryption + HTML5 DOM test is unreasonable or inaccurate.Your hyperbole is misleading. The A12 was 11% faster than the Intel in the said Z3 test. I'm discussing a situation (Z3) where the Apple chip on 5W in the iPhone form factor is outright beating a full sized 91W desktop processor.

Yes. Its a comparison between apples and peanuts (and ironically, Apple a much smaller peanut in this analogy). But Apple is soon to launch a laptop-class A14 chip. Some of us are trying to read the tea-leaves for what that means. The A14 is almost assuredly built on top of the same platform as the A12 chip. Learning what the future A14 laptop will be good or bad will be an important question as the A14 based laptops hit the market.

People use such to browse the web yes, we agree there, a light use case most do on their phones .

You have failed to address the point that there are three newer generations of intel chip with an architectural change on the way.

And none of you have explained how they can stay on the latest low power mode yet somehow mythically clock as high as a high power device.

All while arguing against your test being lightweight and irrelevant, which you just agreed it's use case typically is.

Look at what silicon they will make it on ,the price they want to hit and you get a CX8 8 core 4/4 hybrid.

Great.

My facts are facts. Your "discussion" is buillshit hyperbole written as a spewing pile of one-sentence long paragraphs.

--------

Since you're clearly unable to take down my side of the discussion, I'll "attack myself" on behalf of you.

SIMD units are incredibly important to modern consumer workloads. From Photoshop, to Video Editing, to audio encoding, multimedia is very commonly consumed AND produced even by the most casual of users. With only 3 FP/Vector pipelines of 128-bit width, the Apple A12 (and future chips) will simply be handicapped in this entire class of important benchmarks. Even worse: these 128-bit SIMD units are hampered by longer latencies (2-clocks) compared to Intel's 512-bit wide x 1-clock latency (or AMD Zen2's 256-bit wide by 1-clock latency).

Future users expecting strong multimedia performance: be it Photoshop filters, messing with electronic Drums or other audio-processing, and simple video editing, will simply be unable to compete against current generation x86 systems, let alone next-gen's Zen3 or Icelake.

The Fact that the main use case You posited for your benchmark viability was web browser action!.

Getting technical Intel have foveros and FPGA tech, as soon as they have a desktop CPU with an FPGA and HBM, it could be game over so far as benches go against anything on anything, enabled by one API.

Power pc simply are in another league.

AMD will iterate core count way beyond apples horizon and incorporate better fabrics and Ip..

And despite it all most will still just surf on their phones.

And less not more people will do real work on ios.

I'm getting off this roundabout, are opinions differ let's see where five years gets us.

You don't answer any questions just epeen your leet Dev skills like we should give a shit.

We shouldn't , it's irrelevant to me what they/you like to use , it only matters to me and 99% of the public what we want to do, and our opinions differ on the scale of variability of workload here it seems.

Bye.

I actually looked up GFXbench, which is cross-platform and fairly well regarded and there is no known bias to any platform.

gfxbench.com/device.jsp?benchmark=gfx50&os=iOS&api=metal&cpu-arch=ARM&hwtype=iGPU&hwname=Apple%20A12Z%20GPU&did=83490021&D=Apple%20iPad%20Pro%20(12.9-inch)%20(4th%20generation)

gfxbench.com/device.jsp?benchmark=gfx50&os=Windows&api=dx&cpu-arch=x86&hwtype=dGPU&hwname=NVIDIA%20GeForce%20GTX%201060%206GB&did=36085769&D=NVIDIA%20GeForce%20GTX%201060%206GB

I am comparing the A12Z (from the 4th gen Ipad pro , faster than the A12X) to the 1060

For Aztec high offscreen, the most demanding test, A12Z recorded 133.8 vs 291.1 on the GTX1060.

So my question to you is, are you expecting the A14X to more than double in graphics performance?

FPGAs are damn hard to program for. Not only is Verilog / VHDL rarely taught, synthesis takes a lot longer than compiles. OpenCL / CUDA is far simpler, honest. We'll probably see more from Intel's Xe than from FPGAs.

Not to mention, high-end FPGAs are like $4000+ if we're talking things competitive vs GPUs or CPUs.