Tuesday, August 18th 2020

Apple A14X Bionic Rumored To Match Intel Core i9-9880H

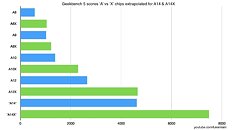

The Apple A14X Bionic is an upcoming processor from Apple which is expected to feature in the upcoming iPad Pro models and should be manufactured on TSMC's 5 nm node. Tech YouTuber Luke Miani has recently provided a performance graph for the A14X chip based on "leaked/suspected A14 info + average performance gains from previous X chips". In these graphs, the Apple A14X can be seen matching the Intel Core i9-9880H in Geekbench 5 with a score of 7480. The Intel Intel Core i9-9880H is a 45 W eight-core mobile CPU found in high-end notebooks such as the 2019 16-inch MacBook Pro and requires significant cooling to keep thermals under control.

If these performance estimates are correct or even close then Apple will have a serious productivity device and will serve as a strong basis for Apple's transition to custom CPU's for it's MacBook's in 2021. Apple may use a custom version of the A14X with slightly higher clocks in their upcoming ARM MacBooks according to Luke Miani. These results are estimations at best so take them with a pinch of salt until Apple officially unveils the chip.

Source:

@LukeMiani

If these performance estimates are correct or even close then Apple will have a serious productivity device and will serve as a strong basis for Apple's transition to custom CPU's for it's MacBook's in 2021. Apple may use a custom version of the A14X with slightly higher clocks in their upcoming ARM MacBooks according to Luke Miani. These results are estimations at best so take them with a pinch of salt until Apple officially unveils the chip.

85 Comments on Apple A14X Bionic Rumored To Match Intel Core i9-9880H

Its like @theoneandonlymrk says, again, eloquently... these highly specific workloads say little about overall CPU performance. Having a CPU repeatedly do the same task is not a real measure of overall performance. Its a measure of its performance in that specific workload. If you do that for different architectures, the comparison is off. You need a full, rounded suite of benches to get a real handle on ovevrall performance between different CPUs. Its irrelevant the chip can repeat that test a million times over. Its still a burst mode, specific workload-based view, and not the whole picture.

Its just that simple. Apple isn't smarter than the rest. They have specialized themselves to very specific workloads, specific devices, with specific use cases. That is why any sort of advanced user / system modding stuff on Apple is nigh impossible and if it IS, Apple has carefully prepared the path you need to walk for it. This is a company that manages your user experience. On most other (non mobile) OS'es, the situation is turned around: you get an OS with lots of tools, have fun with it, the only thing you can't touch is kernel... unless you try harder.

The new direction for Apple, and I've said it as a joke, but its really not... terminals. ARM and the chip Apple has created is fantastic for logging in, and getting the heavy lifting done off-site. Cloud. Apple's been big on it, and they'll go bigger. They are drooling all over Chromebooks because imagine the margins! They can sell an empty shell with an internet connection that can 'feel' like it is a true Apple device, barely include hardware, and still get the Apple Premium on it.

That is what the ARM push is about, alongside another step forward in full IP ownership of soft- and hardware.

So now we have SPECInt2006 AND Geekbench4 suites where the A12 Vortex is crushing single-threaded performance. That's just the reality of the A12 chip. The results speak for themselves, the A12 Vortex at 2.5 GHz outright beats the Xeon in 75% of the SPECint2006 suite.

Yeah, the A12 is really good at 64-bit singlethreaded code. Surprisingly good. (Note: H264 is implemented with SIMD instructions typically. The SPEC Int2006 benchmark is a 64-bit reference implementation. So this doesn't really test H264 in practice)

----------

Look, I don't even own an iPhone. I don't give a care about iPhones, and I don't plan to deal with any of Apple's walled garden bullshit. I don't like their business model, I don't like Apple. I don't like their stupid reality distortion fields.

But I've seen the benchmarks. their A12 chip is pretty damn good in single-threaded performance. As a CPU-nerd, that makes me intrigued and interested. But not really enough to buy an iPhone yet.

Xeon 8172 has one of the best SIMD-vector processors on the market. So yes, it would be "more fair" to let the Xeon use its SIMD units to the degree that is convenient (ie: GCC's autovectorizer), as long as say intrinsics and/or hand-crafted assembly aren't being used. A few memcpys or strcmp functions here and there might get a bit faster, but I don't expect any dramatic improvements in the Xeon's speed.

---------

EDIT: I can't find a benchmark that runs the Xeon 8176 exactly as we like. The closest run I found is: www.spec.org/cpu2006/results/res2017q3/cpu2006-20170710-47735.html

This runs 112 identical copies of the benchmarks across the 56-cores (x2 threads). Divide the run by 56 to get a "pseudo-single core" score. Yes, with AVX512 enabled (-xCORE-AVX512). We can see that none of the SPECInt2006 scores vary dramatically from Anandtech's single-threaded results.

I don't think AVX512 will matter too much on 64-bit oriented code like the SPECInt2006 suite.

Surface 2 with GTX 1060 scores 330k in 3Dmark Ice Storm and the iPad Pro A12Z scores 220k (GPU test, the overall score the iPad has a faster CPU than the Surface so that makes Apple look even better). So they only need 50 percent more performance.

Considering that the A12X/Z are basically 2 years old I think Apple can more than add 50 percent to GPU performance. I'm actually expecting 1.5x for the iPad and 2x for the ARM Mac models, at least. That would also be enough to beat their existing models, like the 5500M.

(the 2020 iPad Pro's A12Z is twice as fast as the A10x from 2017's CPU, and 50 percent faster GPU wise)

www.anandtech.com/show/13661/the-2018-apple-ipad-pro-11-inch-review/6 (I see you got the results from here)

FYI that is a really old test, based on rather ancient APIs.

If you look at other Anandtech's benchmarks of mobile soc, the GFXbenchmark Aztec is used a lot more often, and he also calculates fps/w of those results so I would think that's a better comparison.

Anyways, I guess the bottom line is you think it will get a 50% performance boost over the A12X/Z. I have no qualms with that and I think it's reasonable. I am still not convinced that it will match the laptop variant for the 1070 though unless it is extremely thermally constraint.

Also it gets complicated if we are talking about the iPad version, or the presumably much stronger desktop versions.

"Also it gets complicated if we are talking about the iPad version, or the presumably much stronger desktop versions."

Your original statement was that the new Ipad can easily run games from PS4/XBOX and be faster than a 1070. Don't move the goal post here.

The way you described ALU makes it sound like it excels at a certain kind of graphical workload just tells me you fail to understand it's still part of rasterization performance. Not sure why you can't just concede made a groundless claim and instead choose to double down by putting that ALU comment.