Monday, January 8th 2018

NVIDIA GeForce 390.65 Driver with Spectre Fix Benchmarked in 21 Games

The Meltdown and Spectre vulnerabilities have been making many headlines lately. So far, security researchers have identified three variants. Variant 1 (CVE-2017-5753) and Variant 2 (CVE-2017-5715) are Spectre, while Variant 3 (CVE-2017-5754) is Meltdown. According to their security bulletin, NVIDIA has no reason to believe that their display driver is affected by Variant 3. In order to strengthen security against Variant 1 and 2, the company released their GeForce 390.65 driver earlier today, so NVIDIA graphics card owners can sleep better at night.

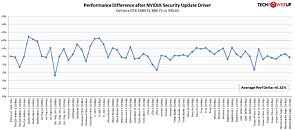

Experience tells us that some software patches come with performance hits, whether we like it or not. We were more than eager to find out if this was the case with NVIDIA's latest GeForce 390.65 driver. Therefore, we took to the task of benchmarking this revision against the previous GeForce 388.71 driver in 21 different games at the 1080p, 1440p, and 4K resolutions. We even threw in an Ethereum mining test for good measure. Our test system is powered by an Intel Core i7-8700K processor overclocked to 4.8 GHz, paired with G.Skill Trident-Z 3866 MHz 16 GB memory on an ASUS Maximus X Hero motherboard. We're running the latest BIOS, which includes fixes for Spectre, and Windows 10 64-bit with Fall Creators Update, fully updated, which includes the KB4056891 Meltdown Fix.We grouped all 21 games, each at three resolutions, into a single chart. Each entry on the X axis is for a single test, showing the percentage difference between old and new driver in percent. Negative values stand for a performance decrease when using today's driver. Positive numbers for performance gained.

Cryptominers can rest assured that the new GeForce 390.65 driver won't affect their profits negatively. Our testing shows zero impact in Ethereum mining. With regard to gaming, there is no significant difference in performance either. The new driver actually gains a little bit of performance on average over the previous version (+0.32%). The results hint at some undocumented small performance gains in Wolfenstein 2 and F1 2017; the other games are nearly unchanged. Even if we exclude those two titles, the performance difference is still +0.1%. The variations that you see in the chart above are due to random effects and due to limited precision on taking measurements in Windows. Generally, for the kind of testing done in our VGA reviews we typically expect 1-2% margin of error between benchmark runs, even when using the same game, at identical settings, on the same hardware.

Experience tells us that some software patches come with performance hits, whether we like it or not. We were more than eager to find out if this was the case with NVIDIA's latest GeForce 390.65 driver. Therefore, we took to the task of benchmarking this revision against the previous GeForce 388.71 driver in 21 different games at the 1080p, 1440p, and 4K resolutions. We even threw in an Ethereum mining test for good measure. Our test system is powered by an Intel Core i7-8700K processor overclocked to 4.8 GHz, paired with G.Skill Trident-Z 3866 MHz 16 GB memory on an ASUS Maximus X Hero motherboard. We're running the latest BIOS, which includes fixes for Spectre, and Windows 10 64-bit with Fall Creators Update, fully updated, which includes the KB4056891 Meltdown Fix.We grouped all 21 games, each at three resolutions, into a single chart. Each entry on the X axis is for a single test, showing the percentage difference between old and new driver in percent. Negative values stand for a performance decrease when using today's driver. Positive numbers for performance gained.

Cryptominers can rest assured that the new GeForce 390.65 driver won't affect their profits negatively. Our testing shows zero impact in Ethereum mining. With regard to gaming, there is no significant difference in performance either. The new driver actually gains a little bit of performance on average over the previous version (+0.32%). The results hint at some undocumented small performance gains in Wolfenstein 2 and F1 2017; the other games are nearly unchanged. Even if we exclude those two titles, the performance difference is still +0.1%. The variations that you see in the chart above are due to random effects and due to limited precision on taking measurements in Windows. Generally, for the kind of testing done in our VGA reviews we typically expect 1-2% margin of error between benchmark runs, even when using the same game, at identical settings, on the same hardware.

42 Comments on NVIDIA GeForce 390.65 Driver with Spectre Fix Benchmarked in 21 Games

Unless something has changed over the time since Story I read where they used Doom, gtx1060 vs rx 480 test. If you had high end cpu both cards were close with i think 480 a hair ahead, But as they went down the line with slower cpu the performance for gtx1060 stayed consistent where as the rx 480 lost a bit of fps. This was a while ago sure AMD has worked on that and made it better but it does prove that GPU driver if not optimized can be harmed a bit by cpu being slowed down.

In other words, everything you do on your computer gets stored in VRAM. This was'nt cleared the moment you close or minimize the application.

I'm sure this and a few other fixes. If you where banking or whatever, something with codes or sensitive information, i'm sure this gets saved in the VRAM as well, patch is written to prevent stealing data from one instance to another in VRAM.

Raja will save the day.

meltdownattack.com/

Read, Learn. Stop spreading misinformation. GPU's are not affected by these vulnerabilities.

The project zero team devised an attack reading Intel manuals, they also looked at the rowhammer attack & took it as a stepping stone to make this work!

TRIPLE MELTDOWN: HOW SO MANY RESEARCHERS FOUND A 20-YEAR-OLD CHIP FLAW AT THE SAME TIME

www.blackhat.com/docs/us-16/materials/us-16-Jang-Breaking-Kernel-Address-Space-Layout-Randomization-KASLR-With-Intel-TSX.pdfSo you've got nothing to counter & you choose to shut your eyes & ears? The twit tells us about possible side channel attacks that could work on x86-64.

lwn.net/Articles/738975/

gruss.cc/files/kaiser.pdfSide channel attacks could affect every piece of hardware out there. Also what did Nvidia patch if there's nothing to patch in there, explain that?

edit - You must've also missed unified memory in CUDA then, starting with CUDA 6 IIRC?

devblogs.nvidia.com/parallelforall/unified-memory-in-cuda-6/

Ok now that's a bit different. KASLR is very specific and complex attack. It's not easily carried out to begin with. Not sure how it will relate to Meltdown and Spectre, but the complexity would likely become exponential.EDIT, I was thinking about something else when I saw that name. It seems KASLR and Kaiser are one and the same. However, this still doesn't change that fact that GPU's are not directly affected by MLTDWN&SPCTR.Twitter is a convoluted mess most of the time and I will not waste my time with it.Didn't really read up on Kaiser to much as it seemed easily fixed and somewhat limited to the Linux sector. But I did gloss over that pdf and am not seeing the connection to it, Meltdown, Spectre and GPU'sPerhaps, but they are notoriously difficult to pull off. Most attackers either won't or can't successfully harvest usable data from such an attack. The best most could hope for is to crash the target system.IIRC, Nvidia's latest patch release had nothing to do with MLTDN&SPCTR. Do you have a link? Google is giving me nothing..

The GPU uarch might not have the same spectre or meltdown vulnerability, but we don't know if there are similar design flaws - which could in theory affect them, simply by studying the architecture in detail.This is what is important, a design flaw enabled 4 different teams to find the same vulnerabilities. So in essence it's just a matter of looking at the uarch long enough & hard enough, I'm not passing any judgement but I'm not ruling it out either.From TPU ~