Friday, March 6th 2020

AMD RDNA2 Graphics Architecture Detailed, Offers +50% Perf-per-Watt over RDNA

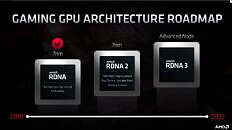

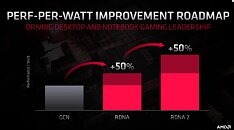

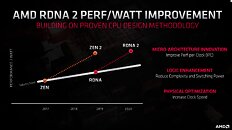

With its 7 nm RDNA architecture that debuted in July 2019, AMD achieved a nearly 50% gain in performance/Watt over the previous "Vega" architecture. At its 2020 Financial Analyst Day event, AMD made a big disclosure: that its upcoming RDNA2 architecture will offer a similar 50% performance/Watt jump over RDNA. The new RDNA2 graphics architecture is expected to leverage 7 nm+ (7 nm EUV), which offers up to 18% transistor-density increase over 7 nm DUV, among other process-level improvements. AMD could tap into this to increase price-performance by serving up more compute units at existing price-points, running at higher clock speeds.

AMD has two key design goals with RDNA2 that helps it close the feature-set gap with NVIDIA: real-time ray-tracing, and variable-rate shading, both of which have been standardized by Microsoft under DirectX 12 DXR and VRS APIs. AMD announced that RDNA2 will feature dedicated ray-tracing hardware on die. On the software side, the hardware will leverage industry-standard DXR 1.1 API. The company is supplying RDNA2 to next-generation game console manufacturers such as Sony and Microsoft, so it's highly likely that AMD's approach to standardized ray-tracing will have more takers than NVIDIA's RTX ecosystem that tops up DXR feature-sets with its own RTX feature-set.Variable-rate shading is another key feature that has been missing on AMD GPUs. The feature allows a graphics application to apply different rates of shading detail to different areas of the 3D scene being rendered, to conserve system resources. NVIDIA and Intel already implement VRS tier-1 standardized by Microsoft, and NVIDIA "Turing" goes a step further in supporting even VRS tier-2. AMD didn't detail its VRS tier support.

AMD hopes to deploy RDNA2 on everything from desktop discrete client graphics, to professional graphics for creators, to mobile (notebook/tablet) graphics, and lastly cloud graphics (for cloud-based gaming platforms such as Stadia). Its biggest takers, however, will be the next-generation Xbox and PlayStation game consoles, who will also shepherd game developers toward standardized ray-tracing and VRS implementations.

AMD also briefly touched upon the next-generation RDNA3 graphics architecture without revealing any features. All we know about RDNA3 for now, is that it will leverage a process node more advanced than 7 nm (likely 6 nm or 5 nm, AMD won't say); and that it will come out some time between 2021 and 2022. RDNA2 will extensively power AMD client graphics products over the next 5-6 calendar quarters, at least.

AMD has two key design goals with RDNA2 that helps it close the feature-set gap with NVIDIA: real-time ray-tracing, and variable-rate shading, both of which have been standardized by Microsoft under DirectX 12 DXR and VRS APIs. AMD announced that RDNA2 will feature dedicated ray-tracing hardware on die. On the software side, the hardware will leverage industry-standard DXR 1.1 API. The company is supplying RDNA2 to next-generation game console manufacturers such as Sony and Microsoft, so it's highly likely that AMD's approach to standardized ray-tracing will have more takers than NVIDIA's RTX ecosystem that tops up DXR feature-sets with its own RTX feature-set.Variable-rate shading is another key feature that has been missing on AMD GPUs. The feature allows a graphics application to apply different rates of shading detail to different areas of the 3D scene being rendered, to conserve system resources. NVIDIA and Intel already implement VRS tier-1 standardized by Microsoft, and NVIDIA "Turing" goes a step further in supporting even VRS tier-2. AMD didn't detail its VRS tier support.

AMD hopes to deploy RDNA2 on everything from desktop discrete client graphics, to professional graphics for creators, to mobile (notebook/tablet) graphics, and lastly cloud graphics (for cloud-based gaming platforms such as Stadia). Its biggest takers, however, will be the next-generation Xbox and PlayStation game consoles, who will also shepherd game developers toward standardized ray-tracing and VRS implementations.

AMD also briefly touched upon the next-generation RDNA3 graphics architecture without revealing any features. All we know about RDNA3 for now, is that it will leverage a process node more advanced than 7 nm (likely 6 nm or 5 nm, AMD won't say); and that it will come out some time between 2021 and 2022. RDNA2 will extensively power AMD client graphics products over the next 5-6 calendar quarters, at least.

306 Comments on AMD RDNA2 Graphics Architecture Detailed, Offers +50% Perf-per-Watt over RDNA

This will have GDDR6, not HBM, so the high power is welcome and good for us now.

I would not want a 400w card. I just wouldn't.

this 2 fan vaporchamber leaked design of the rtx 3080 looks very interesting for example, i bet it could cool 400 watts. all those fins :D

So no, this is BS. 400W isn't happening.

No technical problem or difficulty. Price and performance will be the decisive factors.

www.techpowerup.com/review/amd-r9-295-x2/

www.techpowerup.com/review/amd-radeon-rx-5700-xt/28.html

If you give me double the wattage then at a minimum I am expecting double the performance. To be impressed, you have to give me double the performance at significantly less than double the wattage or double the wattage and significantly more than double the performance. Besides, if they (AMD) achieve double performance of a 5700XT, then they will be at roughly the expected performance of Ampere...but.....at significantly more power usage. I, for one, don't want to dump 400watts of heat into my office that is already 28C because it is summer. I don't care about the power draw itself, I care about working/gaming in a sauna.

In which country do you live?